-

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?@akbooer said in Moving to Docker:

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?I don't know what AlpineWSL is, but you can make a volume for your cmh-ludl folder and map it in vwout's docker container.

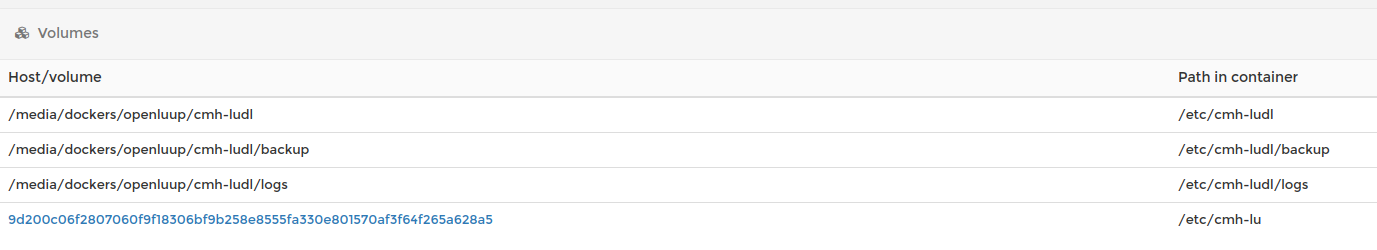

This is what it looks like in portainer, like a mapping. Really convenient, like you said.

-

@akbooer said in Moving to Docker:

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?I don't know what AlpineWSL is, but you can make a volume for your cmh-ludl folder and map it in vwout's docker container.

This is what it looks like in portainer, like a mapping. Really convenient, like you said.

Thanks for that. I guess my question is why these have to be done separately, rather than just making cmh-ludl/ (and all its sub folders) external (which, effectively is what I’ve done for WSL.)

I was trying to do it on the command line, since Portainer seemed to be several hundreds of megabytes, which seemed a bit of overkill just for this task (which, I think, is all I need it for.)

-

You mean not splitting it up in backup, log and cmh-ludl? That's a choice of vwout. To be able to be flexible with what you store where, probably. You can do it with the command line too, but I'm not familiar with that. I don't think you have to do all mappings. Probably the cmh-ludl is enough, but I'm not sure.

-

Why would you want to do that? It’s not as though installing Lua is difficult. What am I missing?

@akbooer Well, if I have a complete openLuup setup, except my data, in a Docker and I need to rebuild a system from scratch I can just run a script, restore my data and be back up and running. Creating a complete new system from scratch takes a lot more. Especially if you need to do Grafana, DB, webserver, ... All the packages I need to install again (i never document that well for the next time) etc.

-

@akbooer Well, if I have a complete openLuup setup, except my data, in a Docker and I need to rebuild a system from scratch I can just run a script, restore my data and be back up and running. Creating a complete new system from scratch takes a lot more. Especially if you need to do Grafana, DB, webserver, ... All the packages I need to install again (i never document that well for the next time) etc.

-

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?@akbooer As @RHCPNG said, you can easily do this, once you have a running setup by copying the contents of

/etc/cmh-ludl/to a local folder, restart the container with that folder mounted to/etc/cmh-ludl/.The difference between using a volume and a folder mount is (amongst other details) how Docker handles this at first startup. When using a volume, Docker copies the contents of e.g.

/etc/cmh-ludl/when it is started with a empty new volume, to that volume. As of that moment all changes are kept in the volume and survive container restarts and updates.

A mounted folder also preserves all settings, but in contrary to volumes, Docker does not copy the original contents of the mount target to the folder at first startup. This means you need to store create the files needed in/etc/cmh-ludl/yourself, which makes it harder to initially get start (except for one who masters openLuup ).

). -

I thought i'd give this a stab, as i have some dependency issues.. Found an old laptop and installed Debian to test with.

I want the following apps to run on the machine:

openLuup

Z-Way

Domoticz

InfluxDB

Grafana

Miio Server (stupid implementation of domo plugin for my vacuum)Any reason not to just have one docker each? They all use TCP/UDP for all communication (i use ser2net for usb ports)..

I've had to install python on the domoticz image i have, and therefore saved a new image. Where is this image stored?

-

@vwout: There is a choice between Debian and Alpine in your image, why?

is there advatages to one or the other? If its just interface/packages in the container there wouldn't be a difference if you're not doing changes to it right? (which ideally is not nessecary)

is there advatages to one or the other? If its just interface/packages in the container there wouldn't be a difference if you're not doing changes to it right? (which ideally is not nessecary) -

I thought i'd give this a stab, as i have some dependency issues.. Found an old laptop and installed Debian to test with.

I want the following apps to run on the machine:

openLuup

Z-Way

Domoticz

InfluxDB

Grafana

Miio Server (stupid implementation of domo plugin for my vacuum)Any reason not to just have one docker each? They all use TCP/UDP for all communication (i use ser2net for usb ports)..

I've had to install python on the domoticz image i have, and therefore saved a new image. Where is this image stored?

-

@vwout: There is a choice between Debian and Alpine in your image, why?

is there advatages to one or the other? If its just interface/packages in the container there wouldn't be a difference if you're not doing changes to it right? (which ideally is not nessecary)

is there advatages to one or the other? If its just interface/packages in the container there wouldn't be a difference if you're not doing changes to it right? (which ideally is not nessecary)@perh said in Moving to Docker:

There is a choice between Debian and Alpine in your image, why?

The Alpine image is somewhat smaller. It comes with a more limited set of Linux installed utilities, but who cares about that in the openLuup environment?

-

As long as it works..

New question: I've set up two containers (domoticz and miio server), and by default - they are on the same network. This network will however give the containers an IP at startup, and it seems to be first come - first served. Is there a way to give them static IP's in this internal docker network?

I trieddocker run --IP=<IP>without success..The plugin in Domo reaches miio server with that IP, so i need it to be the same after restarts!

-

OK, now I have a problem.

It was all going so well until I realised that all incoming IP addresses were being mapped to 172.17.0.1by the LuaSocket library.

Reading the Docker docs: https://docs.docker.com/config/containers/container-networking/

"By default, the container is assigned an IP address for every Docker network it connects to. The IP address is assigned from the pool assigned to the network, so the Docker daemon effectively acts as a DHCP server for each container. Each network also has a default subnet mask and gateway."

But I don't understand, yet, how this works if I want a straight-forward mapping of IPs from my LAN into the Docker container.

It may be that the Synology Docker interface is not adequate for this and I have to revert to the command line?

Any advice/clarification much appreciated.

-

ok, i got the IP stuff figured. A custom network must be made in order to choose IP, then connect the container.

sudo docker network create -d bridge --subnet=192.168.0.0/16 --ip-range=192.168.0.0/24 --gateway=192.168.0.254 <Network name> sudo docker network connect --ip=<IP> <Network created by you> <container name> sudo docker network disconnect bridge <container name>Still struggling with getting openluup to work as i want it.. tried to copy the cmh-ludl folder from my prod. system to the _data folder for my volume, but can't seem to overwrite user_data.json..

Tried to bind to a folder instead, but then it won't start, as the folder is missing the "openLuup_reload_for_docker" file..

what do i do next?

-

ok, i got the IP stuff figured. A custom network must be made in order to choose IP, then connect the container.

sudo docker network create -d bridge --subnet=192.168.0.0/16 --ip-range=192.168.0.0/24 --gateway=192.168.0.254 <Network name> sudo docker network connect --ip=<IP> <Network created by you> <container name> sudo docker network disconnect bridge <container name>Still struggling with getting openluup to work as i want it.. tried to copy the cmh-ludl folder from my prod. system to the _data folder for my volume, but can't seem to overwrite user_data.json..

Tried to bind to a folder instead, but then it won't start, as the folder is missing the "openLuup_reload_for_docker" file..

what do i do next?

@perh said in Moving to Docker:

but can't seem to overwrite user_data.json.

You can't do this when the system is running, because it gets overwritten with the current system state on restarts. Stop openLuup with a

/data_request?id=exitrequest, change the file, then stop and restart the container (to restart openLuup.) -

Solution: Don't bind the port at all in the docker, and call the IP of the host in the application running inside container..

EDIT: Or even better, use the "gateway" IP in the internal docker network, this way its easier to move the whole setup to hardware with a different IP address without editing this attribute.

-

For funsies, I decided to hop on board with OpenLuup by running @vwout 's pre-built container (excellent piece of work, by the way, sir!) on my Synology NAS using Docker's wizards.

It took some convincing by others more experienced than myself (both with OpenLuup and Docker), NOT to change anything in the Synology NAS GUI before simply LAUNCH-ing the Image. The only thing I set manually were the

ports- changing from 'Auto' to numbers matching those in the right column under Advanced Settings > Ports, then clicking "Apply".From within DiskStation on your Synology NAS:

- Open Docker (install from Package Center if not installed)

Registry> search for "OpenLuup" > select 'vwout / openluup' (alpine)- Click

Download. Images> select 'vwout / openluup' > clickLaunch.- On

Advanced Settingstab > 'Advanced Settings' > check 'Enable auto-restart' - On

Portstab:

• Change each port from "Auto" to match right-hand #. - On

Environmenttab:

• Change (if desired) the 'Value' field next to TZ, from 'UTC' to your time zone (e.g. GMT-5) - Click

Apply

Your new OpenLuup container will now start.

Head over to

http://<nas_ip>:3480/consolefor some fun with OpenLuup!BONUS: For step-by-step instructions on linking your Vera(s) to OpenLuup, see this reply below.

THANKS!

-

My first foray into Docker was with this image, on Synology NAS, but I didn’t mount external volumes. It’s worked flawlessly, which is just as well because I know very little about Docker, although I’m learning a bit because I’m creating my own version from a raw Alpine base.

So I’ve not seen this issue, ever... which doesn’t really help you at all, except to know that it can work.

A number of folk are running openLuup on Docker...

...and I'm about to try the same.

There are a couple of epic threads in the old place:

https://community.getvera.com/t/openluup-on-synology-via-docker/190108

https://community.getvera.com/t/openluup-on-docker-hub/199649

Much of the heavy lifting appears to have been done by @vwout there (and I've asked if they'll join us here!) There's a great GitHub repository:

https://github.com/vwout/docker-openluup

Hoping to get a conversation going here (and a new special openLuup/Docker section) not least because I know I'm going to need some help!

I've been doing other projects the past weeks, and now started seeing sluggish behaviour in the system.. SSH'ed in, and noticed that the harddrive was completely full!

I had some other issues as well that caused it to fill up, but i noticed that when I removed the openluup container, that freed up 1.5GB!

This is obviously not the persistent storage folder (cmh-ludl), what else can do this?

On the base system, it showed as a massive sized /overlay2 folder in the /var/lib/docker/..

Mabye @vwout knows some docker-hints on this?

I've now gotten most of my applications/components into the docker system, only one left is influxdb.

I allready have the database files on an external SSD, and the plan was to have a volume for the influxDB (which holds the .conf file), and a bind mount to the database folder.

This is however not the only files of influxdb that needs to be persistent, when i load up the docker, influx has no information about the databases, even when i know the database folder is available.

Anyone here know where influxDB stores database setup info? influxdb.conf just enables stuff and sets folders, the info on existing databases and settings is stored elsewhere.

(should the docker forum be under software, not openluup?)

As part of my hardware infrastructure revamp (a move away from 'hobby' platforms to something a bit more solid) I've just switched from running Grafana (a very old version) on a BeagleBone Black (similar to RPi) to my Synology NAS under docker.

Despite the old system being on the same platform as my main openLuup instance (or, perhaps, because of this) and now with the new system the data has to be shipped across my LAN, this all seems to work much faster.

I had been running Grafana v3.1.1, because I couldn't upgrade on the old system, but now at 7.3.7, which seems to be the latest Docker version. It's a bit different UI from the old one, and needed next to no configuration, apart from importing the old dashboard settings.

One thing I'm missing is that the old system had a pull-down menu to switch between dashboards, but the new one doesn't seem to have that, since it switches to a whole new page with a list of dashboards. This doesn't work too well on an iPad, since it brings up the keyboard and obscures half the choices. Am I missing something obvious here?

Thanks for any suggestions.