-

Just excited to have a new valuable member and a new option to install openLuup so that more can use and contribute.

As long as it doesn't become the only way. I was just sharing my experience on containers. Indeed it has its use case, a great way to start. It's not for everyone and like anything, should not be abused... -

I'm using the docker of vwout for a while now. I've asked for several additions to the docker and vwout has been very helpful. I think a docker container is ideal for me. Problem solving is easier, because the different applications are containerized. It's easy to start an additional dev/test environment and needs fewer resources than a complete VM. Updating is also easier, when the docker is maintained actively of course.

But of course, everyone has to decide what works best for him/her. Dockers are here to stay, that's for sure. I think you're going to like it, @akbooer .

Thanks for the docker again, @vwout! Great support. I have had no problems with it.

-

I'm using the docker of vwout for a while now. I've asked for several additions to the docker and vwout has been very helpful. I think a docker container is ideal for me. Problem solving is easier, because the different applications are containerized. It's easy to start an additional dev/test environment and needs fewer resources than a complete VM. Updating is also easier, when the docker is maintained actively of course.

But of course, everyone has to decide what works best for him/her. Dockers are here to stay, that's for sure. I think you're going to like it, @akbooer .

Thanks for the docker again, @vwout! Great support. I have had no problems with it.

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

-

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

@akbooer

I'm far from an expert, but have some experience with it yes. I haven't composed a container through yaml yet.You first need to define/create the volumes, that's when you can state where the volumes should be "placed" and where the data resides. This can be a local directory or a nfs mount for example. Default, it creates a dir in the docker dir.

If you are not a Linux expert, like me (although I can manage), I would advise installing Portainer first. It gives you a nice GUI for managing your dockers. Far easier than doing everything through command line.

-

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

@akbooer said in Moving to Docker:

- but I can't access it at all or work out where the openLuup files go.

You are using the synology docker package to create and deploy your container, correct? At minimum, to get the container accessible, change the port settings to 3480(local):3480(container). Then you should be able access it at http://YourSynologyIP:3480/

-

@akbooer said in Moving to Docker:

- but I can't access it at all or work out where the openLuup files go.

You are using the synology docker package to create and deploy your container, correct? At minimum, to get the container accessible, change the port settings to 3480(local):3480(container). Then you should be able access it at http://YourSynologyIP:3480/

@kfxo said in Moving to Docker:

You are using the synology docker package to create and deploy your container, correct?

Yes, that's correct for now. I need also to investigate Portainer, apparently.

@kfxo said in Moving to Docker:

change the port settings to 3480(local):3480(container).

OK, I was looking for where to do that, but I guess, I'll look harder. Thanks.

-

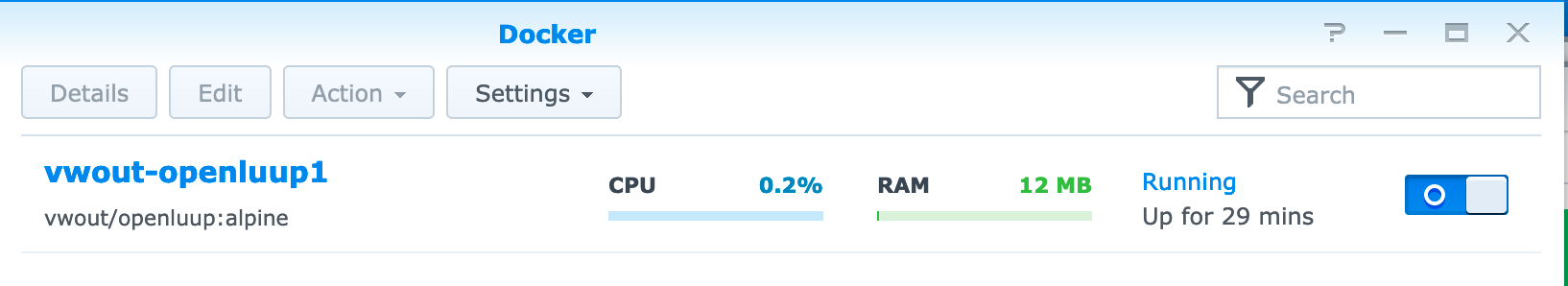

It's MAGIC !

I particularly like the look of this...

This instills a sense of wonder in me... I mean, I know (almost) exactly how openLuup works, but to see it wrapped in a container running on a NAS, seems rather special. "Hats off" ("chapeau?") to those who have engineered this, not least @vwout.

One is never quite finished, however.

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

-

It's MAGIC !

I particularly like the look of this...

This instills a sense of wonder in me... I mean, I know (almost) exactly how openLuup works, but to see it wrapped in a container running on a NAS, seems rather special. "Hats off" ("chapeau?") to those who have engineered this, not least @vwout.

One is never quite finished, however.

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

@akbooer said in Moving to Docker:

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

You cannot create named volumes through the synology GUI. You will need to use portainer or you can ssh into your synology and run the manual commands as outlined by vwout/openluup

You can also do this manually. Start by creating docker volumes:

docker volume create openluup-env

docker volume create openluup-logs

docker volume create openluup-backupsCreate an openLuup container, e.g. based on Alpine linux and mount the created (still empty) volumes:

docker run -d

-v openluup-env:/etc/cmh-ludl/

-v openluup-logs:/etc/cmh-ludl/logs/

-v openluup-backups:/etc/cmh-ludl/backup/

-p 3480:3480

vwout/openluup:alpine -

It's MAGIC !

I particularly like the look of this...

This instills a sense of wonder in me... I mean, I know (almost) exactly how openLuup works, but to see it wrapped in a container running on a NAS, seems rather special. "Hats off" ("chapeau?") to those who have engineered this, not least @vwout.

One is never quite finished, however.

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

@akbooer

I have been messing with this today. I do not currently run openluup through docker so this may not even be the best way to do this but it is what I did to get it up and running and with the ability to easily see and manipulate all openluup files within "File Station" on the synology.I created three folders on my synology:

/docker/openLuup/cmh-ludl

/docker/openLuup/logs

/docker/openLuup/backupsThen I created three volumes with a bind to the created folders

'openluup-env' bound to '/volume1/docker/openLuup/cmh-ludl'

'openluup-logs' bound to '/volume1/docker/openLuup/logs'

'openluup-backups' bound to '/volume1/docker/openLuup/backup'I used portianer but you can ssh into your synology and use the command line to create these volumes with the binds, see this

Then I created the container using portainer making sure to set the correct ports and volume mappings, which can also be done in the command line with

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineHope this helps.

Edit: The binds are not necessary, I only did it to make it easy to access the files. Without the bindings, the volumes are somewhat hidden on synology that is not easily accessible for example at '/volume1/@docker/volumes/openluup-env/_data'

-

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image? -

Hi @vwout, can your docker image also be used on the arm64 platform (Pi4)? If not, how would I go about building one? I think with an Unbutu docker and then use the same setup as on a plain Pi.

I am setting up some new things on a Pi4 and came along this (https://github.com/SensorsIot/IOTstack) to use Dockers on a Pi. Looks like a very quick way to (re)build an environment from a basic starting point. Especially the split between user data and all else looks attractive.

Cheers Rene

-

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?@akbooer said in Moving to Docker:

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

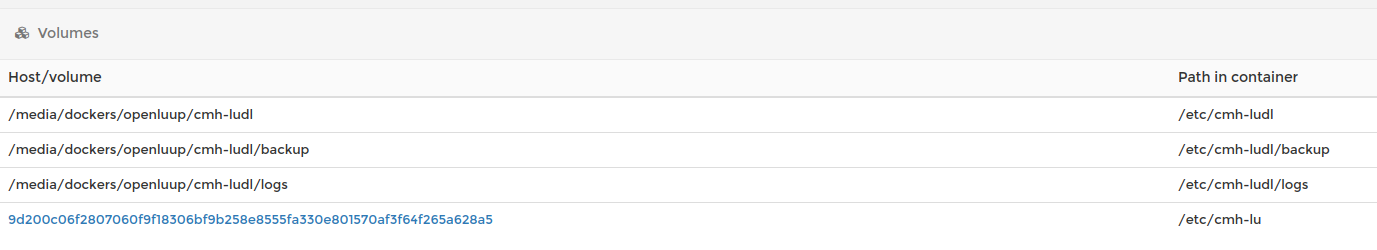

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?I don't know what AlpineWSL is, but you can make a volume for your cmh-ludl folder and map it in vwout's docker container.

This is what it looks like in portainer, like a mapping. Really convenient, like you said.

-

@akbooer said in Moving to Docker:

I'm still struggling with this. I've manually created some external folders, but can't get the multi-line command to work.

@vwout, following my new experience with AlpineWSL on a PC (which I assume to be something very similar to Docker) I created the whole of the

cmh-ludlfolder as one single external volume. This is really convenient. Is it possible to make the same configuration for a Docker image?I don't know what AlpineWSL is, but you can make a volume for your cmh-ludl folder and map it in vwout's docker container.

This is what it looks like in portainer, like a mapping. Really convenient, like you said.

Thanks for that. I guess my question is why these have to be done separately, rather than just making cmh-ludl/ (and all its sub folders) external (which, effectively is what I’ve done for WSL.)

I was trying to do it on the command line, since Portainer seemed to be several hundreds of megabytes, which seemed a bit of overkill just for this task (which, I think, is all I need it for.)

-

You mean not splitting it up in backup, log and cmh-ludl? That's a choice of vwout. To be able to be flexible with what you store where, probably. You can do it with the command line too, but I'm not familiar with that. I don't think you have to do all mappings. Probably the cmh-ludl is enough, but I'm not sure.

-

Why would you want to do that? It’s not as though installing Lua is difficult. What am I missing?

@akbooer Well, if I have a complete openLuup setup, except my data, in a Docker and I need to rebuild a system from scratch I can just run a script, restore my data and be back up and running. Creating a complete new system from scratch takes a lot more. Especially if you need to do Grafana, DB, webserver, ... All the packages I need to install again (i never document that well for the next time) etc.

-

@akbooer Well, if I have a complete openLuup setup, except my data, in a Docker and I need to rebuild a system from scratch I can just run a script, restore my data and be back up and running. Creating a complete new system from scratch takes a lot more. Especially if you need to do Grafana, DB, webserver, ... All the packages I need to install again (i never document that well for the next time) etc.

A number of folk are running openLuup on Docker...

...and I'm about to try the same.

There are a couple of epic threads in the old place:

https://community.getvera.com/t/openluup-on-synology-via-docker/190108

https://community.getvera.com/t/openluup-on-docker-hub/199649

Much of the heavy lifting appears to have been done by @vwout there (and I've asked if they'll join us here!) There's a great GitHub repository:

https://github.com/vwout/docker-openluup

Hoping to get a conversation going here (and a new special openLuup/Docker section) not least because I know I'm going to need some help!

I've been doing other projects the past weeks, and now started seeing sluggish behaviour in the system.. SSH'ed in, and noticed that the harddrive was completely full!

I had some other issues as well that caused it to fill up, but i noticed that when I removed the openluup container, that freed up 1.5GB!

This is obviously not the persistent storage folder (cmh-ludl), what else can do this?

On the base system, it showed as a massive sized /overlay2 folder in the /var/lib/docker/..

Mabye @vwout knows some docker-hints on this?

I've now gotten most of my applications/components into the docker system, only one left is influxdb.

I allready have the database files on an external SSD, and the plan was to have a volume for the influxDB (which holds the .conf file), and a bind mount to the database folder.

This is however not the only files of influxdb that needs to be persistent, when i load up the docker, influx has no information about the databases, even when i know the database folder is available.

Anyone here know where influxDB stores database setup info? influxdb.conf just enables stuff and sets folders, the info on existing databases and settings is stored elsewhere.

(should the docker forum be under software, not openluup?)

As part of my hardware infrastructure revamp (a move away from 'hobby' platforms to something a bit more solid) I've just switched from running Grafana (a very old version) on a BeagleBone Black (similar to RPi) to my Synology NAS under docker.

Despite the old system being on the same platform as my main openLuup instance (or, perhaps, because of this) and now with the new system the data has to be shipped across my LAN, this all seems to work much faster.

I had been running Grafana v3.1.1, because I couldn't upgrade on the old system, but now at 7.3.7, which seems to be the latest Docker version. It's a bit different UI from the old one, and needed next to no configuration, apart from importing the old dashboard settings.

One thing I'm missing is that the old system had a pull-down menu to switch between dashboards, but the new one doesn't seem to have that, since it switches to a whole new page with a list of dashboards. This doesn't work too well on an iPad, since it brings up the keyboard and obscures half the choices. Am I missing something obvious here?

Thanks for any suggestions.