TTS in MSR?

-

Hi!

In Home Assistant I sometimes uses the TTS, either to my Sonos or Google speakers. With reactor in Vera I also use TTS.

But in MSR I can't select the TTS-service. It's simply not there. Am I missing something, or is this the case, so far?

Thanks!

/Fanan -

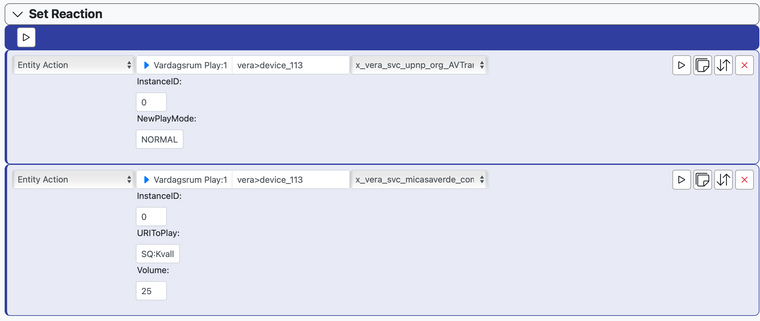

In MSR, try the following action (assuming you have the Sonos_TTS plug-in running on Vera):

[ Entity Action ] [ Sonos device ] [ x_vera_svc_micasaverde_com_Sonos1.say ] where these fields are all (mostly) optional: Text:, Language:, Engine: [AZURE], Volume:, SameVolumeForAll:, GroupDevices:, GroupZones:, Chime:, Repeat:, UnMute:, UseCache:Hope I'm pointing you in the right direction, as that's what I see in my (only) rule that produces TTS output.

-

Home Assistant does not enumerate the full list of services supported by a device in any of its APIs. It's a bit of a hole (but not unusual -- Hubitat is the same).

You can tell MSR that a device supports a service by creating a file

local_hass_devices.yamlwith contents similar to the following (modify the entity ID as needed):# This file has local definitions for HomeAssistant devices. entities: # This media player is a Sonos device, so it supports the Hass "tts" service "hass>media_player_portable": services: - ttsRemember YAML indenting is done with spaces, not tabs; two spaces per level. Use yamlchecker.com for check/diagnostics.

-

Home Assistant does not enumerate the full list of services supported by a device in any of its APIs. It's a bit of a hole (but not unusual -- Hubitat is the same).

You can tell MSR that a device supports a service by creating a file

local_hass_devices.yamlwith contents similar to the following (modify the entity ID as needed):# This file has local definitions for HomeAssistant devices. entities: # This media player is a Sonos device, so it supports the Hass "tts" service "hass>media_player_portable": services: - ttsRemember YAML indenting is done with spaces, not tabs; two spaces per level. Use yamlchecker.com for check/diagnostics.

@toggledbits That worked as a charm!

A new question; I send the TTS-message to a Nest smart display. Is it possible to have the text visible on the screen? Or even a selected picture? Maybe that's to much... But it would still be cool!Thanks for giving me the TTS option!

-

@toggledbits, I wounder if it's possible to use the "PlayURI" command, as in the Reactor in Vera. The aim is to play a certain Sonos playlist. My Sonos is visible both as a Vera integration and a HA integration. The Vera integration shows up as an option, but doesn't work. The HA integration don't seem to have that option in MSR. I tried to add it using the same method as you descriped above, but that didn't work. Do you know how to fix this?

Best regards,

/Fanan -

@toggledbits, I wounder if it's possible to use the "PlayURI" command, as in the Reactor in Vera. The aim is to play a certain Sonos playlist. My Sonos is visible both as a Vera integration and a HA integration. The Vera integration shows up as an option, but doesn't work. The HA integration don't seem to have that option in MSR. I tried to add it using the same method as you descriped above, but that didn't work. Do you know how to fix this?

Best regards,

/Fanan -

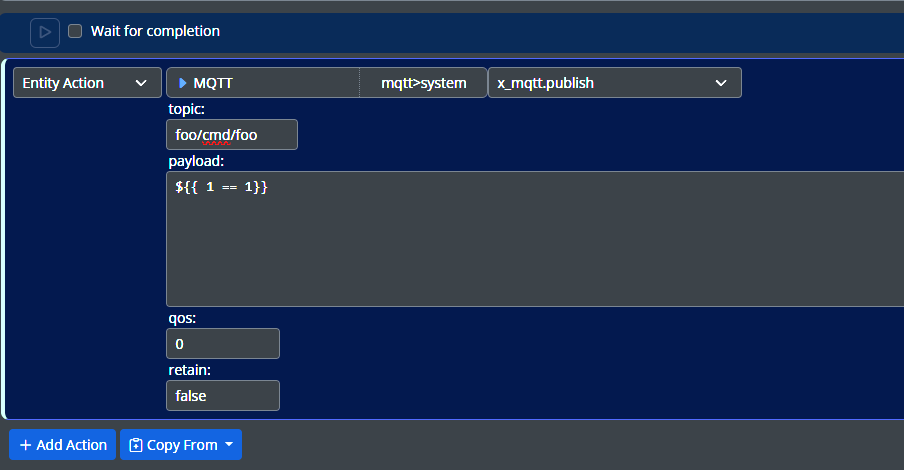

For Hass, the

x_hass_media_player.play_mediaaction is the one to use, but the parameters are platform (Sonos) dependent and you need to figure them out. The Hass documentation for the Sonos component has some information, but it's anything but user-friendly. Google is your friend.For Hubitat, I've never had any luck getting Sonos to play queues, even from the Hubitat's own UI.

I will say, this is a reflection of how much the Sonos plugin on Vera simplifies the use of the zone player, because the Hass and Hubitat interfaces are pretty raw/low-level, and Sonos is complex.

-

Home Assistant does not enumerate the full list of services supported by a device in any of its APIs. It's a bit of a hole (but not unusual -- Hubitat is the same).

You can tell MSR that a device supports a service by creating a file

local_hass_devices.yamlwith contents similar to the following (modify the entity ID as needed):# This file has local definitions for HomeAssistant devices. entities: # This media player is a Sonos device, so it supports the Hass "tts" service "hass>media_player_portable": services: - ttsRemember YAML indenting is done with spaces, not tabs; two spaces per level. Use yamlchecker.com for check/diagnostics.

@toggledbits And in Hubitat?

-

I think I answered that.

-

@toggledbits And in Hubitat?

@matteburk sorry missed your last post

-

This news just in from Microsoft:

"Thanks for using Azure Text-to-Speech. We would like to share the great news that we have recently released 12 new regions. We also released neural Text-to-Speech in 10 more languages, a new neural voice (Jenny Multilingual) that speaks 14 languages, 11 more neural voices in American English and 5 more neural voices in Chinese. When using neural voice, synthesized speech is nearly indistinguishable from human recordings."

-

This news just in from Microsoft:

"Thanks for using Azure Text-to-Speech. We would like to share the great news that we have recently released 12 new regions. We also released neural Text-to-Speech in 10 more languages, a new neural voice (Jenny Multilingual) that speaks 14 languages, 11 more neural voices in American English and 5 more neural voices in Chinese. When using neural voice, synthesized speech is nearly indistinguishable from human recordings."

@librasun . I'm trying to add Microsofts TTS service, but it doesn't work. I got a API key and everything. Still doesn't work. My aim is to use a swedish neural voice instead of Googles voices. Is it working for you?

EDIT: I got it working! The neural voices don't support my region, so I had to change the region (created a new service), when I was logged in at Azure.

-

I am trying to enable MSR TTS on Google home devices. I found the local_hass_devices.yaml file already existed with the above example.

So in the true spirit of cutting and pasting while not knowing what I am doing I ended up with the following file:# This file has local definitions for HomeAssistant devices. --- entities: # Entry shows how an entity can have a service added and its name forced "hass>sensor_connected_clients": name: "WebSocket Clients" services: - homeassistant # Sample Sonos device, add the Hass "tts" service for text-to-speech capability "hass>media_player_sonos_living_room": service: - tts # Sample Sonos device, add the Hass "tts" service for text-to-speech capability "hass>media_player.family_room_speaker": service: - tts -

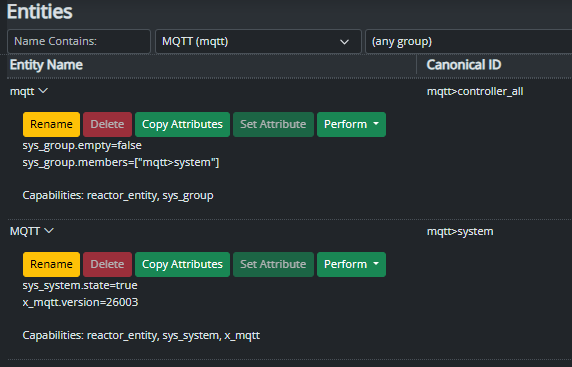

What you've done here (correct approach, one little error, see below) is declare that those two

media_playerdevices have thettsservice as an additional declared service. That will cause MSR to bring in the attributes and actions of that service as supported for the device.Hass doesn't publish what Hass services a Hass entity supports through its API. The only service that can be known for certain is the one that is part of the Hass entity ID (e.g. the Hass entity ID

media_player.family_room_speakertells you that it will support themedia_playerservice). Any other supported services (liketts) aren't enumerated by the API, sadly (in a few cases, they can be inferred as related in a bit mask, but this is an extension and highly implementation/component dependent, not consistent at all, but I try to pick this up when known). This manual way of connecting additional services/capabilities to the entities is the best I could do at the moment.Other things you can do in this file are override the name of the device (by supplying

name: "myoverridename"at the same indent level asservice:), and change the primary attribute (primary_attribute: "servicename.attributename"). When setting/overriding the primary attribute, the service must be supported by the device (so known by the device ID or added viaservices:in this file).One small correction, though: the canonical ID for the third entry

hass>media_player.family_room_speaker-- the dot (.) should probably be an underscore (_). Confirm on the Entities list. -

Picking up on my old thread. I just got Alexa working with tts in Home Assistant. Is it possible to get it to work with MSR as well? I added the speakers to my local_hass_devices.yaml, and then I got the same tts options as with google speakers. But that don't work for sending tts messages. In HA I have to add "Type: tts" for it to work. I also don't see the native Alexa voice as an option, only the same as the google speakers. It's not a personal big deal, but would be a fun and maybe useful feature in the future.

-

Let me play around with this a bit. You can add the

notifyservice to the entity (have you done this?), but I'm not sure about the rest of the parameters. Some are tricky. But I want to get something like this going in my house, so good excuse to figure it out... -

Let me play around with this a bit. You can add the

notifyservice to the entity (have you done this?), but I'm not sure about the rest of the parameters. Some are tricky. But I want to get something like this going in my house, so good excuse to figure it out...@toggledbits said in TTS in MSR?:

You can add the notify service to the entity (have you done this?)

@toggledbits No, I haven't used the notify service. I might give it a try later this week. If you (or anyone else) figure it out, please share!

-

@toggledbits said in TTS in MSR?:

You can add the notify service to the entity (have you done this?)

@toggledbits No, I haven't used the notify service. I might give it a try later this week. If you (or anyone else) figure it out, please share!

@fanan Per this topic https://smarthome.community/topic/744/sending-a-service-request-to-home-assistant?_=1638829172919 I couldn't get Alexa tts to work in MSR, but I do have it working in openLuup with the following code that sits inside a simple plugin:

myEchoDevice = "alexa_media_".. myEchoDevice myEchoMessage = table.concat(myEchoMessage) local request_body = json.encode {message = myEchoMessage, data = {["type"] = "announce",method = "all"}} local response_body = {} local theURL = 'http://'..HomeAssistantIP..':'..HomeAssistantPort..'/api/services/notify/'..myEchoDevice r, c, h = http.request { url = theURL, method = "POST", headers = { ["Content-Type"] = "application/json", ["Authorization"] = "Bearer ".. HomeAssistantToken, ["Content-Length"] = request_body:len() }, source = ltn12.source.string(request_body), sink = ltn12.sink.table(response_body) }The variable "myEchoDevice" is the exact name of the specific echo device (or device group) as presented in the Amazon Alexa app. I typically broadcast to all my devices via a group, intercom style, so I catch the message wherever I am in my home. The variable "HomeAssistantToken" needs to be created in HA. "myEchoMessage "is a lua table in the above code, but you could just as easily capture the message as a string, and then trim the string prior to sending it to the HTTP post request.