here in the community app in the reply box

Donato

Posts

-

openLuup log files - LuaUPnP.log and LuaUPnP_startup.log -

openLuup log files - LuaUPnP.log and LuaUPnP_startup.login my actual user_data file double quotes are preceded by \.

when I paste the file as text and submit the reply here the \ symbol is eliminated .

Following I paste as code :

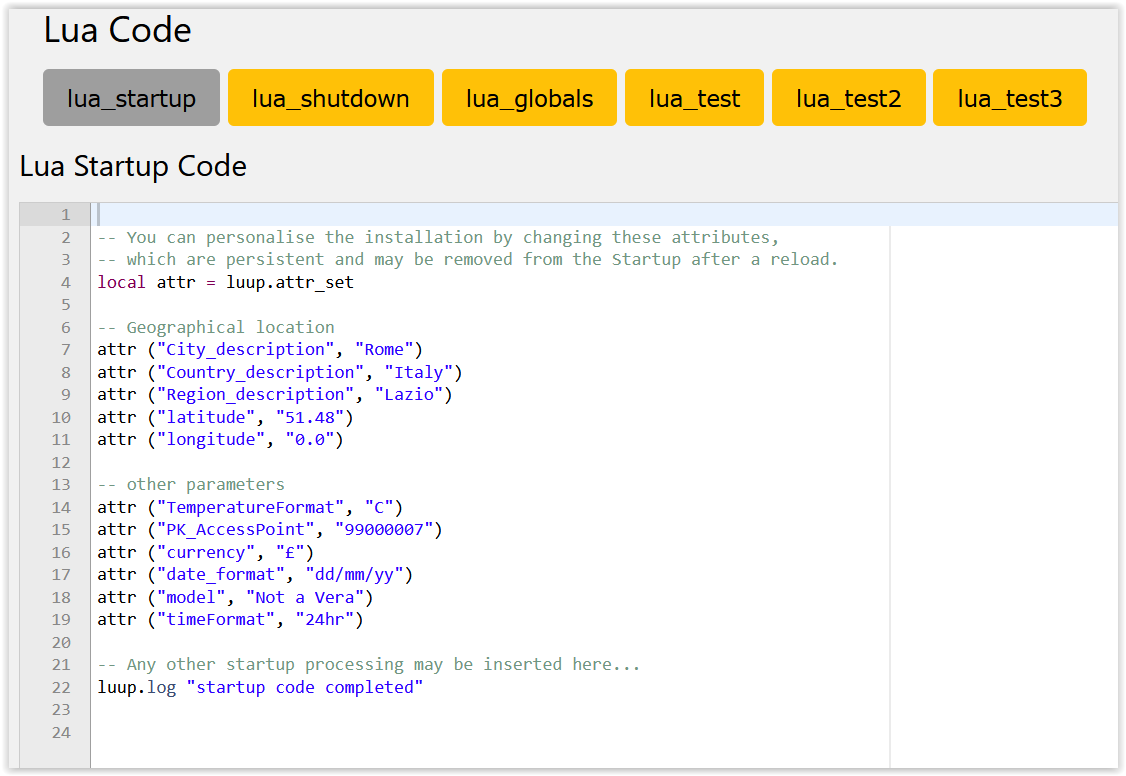

"StartupCode":"\n-- You can personalise the installation by changing these attributes,\n-- which are persistent and may be removed from the Startup after a reload.\nlocal attr = luup.attr_set\n\n-- Geographical location\nattr (\"City_description\", \"Rome\")\nattr (\"Country_description\", \"Italy\")\nattr (\"Region_description\", \"Lazio\")\nattr (\"latitude\", \"51.48\")\nattr (\"longitude\", \"0.0\")\n\n-- other parameters\nattr (\"TemperatureFormat\", \"C\")\nattr (\"PK_AccessPoint\", \"99000007\")\nattr (\"currency\", \"£\")\nattr (\"date_format\", \"dd/mm/yy\")\nattr (\"model\", \"Not a Vera\")\nattr (\"timeFormat\", \"24hr\")\n\n-- Any other startup processing may be inserted here...\nluup.log \"startup code completed\"\n\n",sorry for my error

-

openLuup log files - LuaUPnP.log and LuaUPnP_startup.logattached a copy of startup lua

and the few lines around the error :

"Mode":"1",

"ModeSetting":"1:DC*;2:DC*;3:DC*;4:DC*",

"PK_AccessPoint":"99000007",

"Region_description":"Lazio",

"ShutdownCode":"",

"StartupCode":"\n-- You can personalise the installation by changing these attributes,\n-- which are persistent and may be removed from the Startup after a reload.\nlocal attr = luup.attr_set\n\n-- Geographical location\nattr ("City_description", "Rome")\nattr ("Country_description", "Italy")\nattr ("Region_description", "Lazio")\nattr ("latitude", "51.48")\nattr ("longitude", "0.0")\n\n-- other parameters\nattr ("TemperatureFormat", "C")\nattr ("PK_AccessPoint", "99000007")\nattr ("currency", "£")\nattr ("date_format", "dd/mm/yy")\nattr ("model", "Not a Vera")\nattr ("timeFormat", "24hr")\n\n-- Any other startup processing may be inserted here...\nluup.log "startup code completed"\n\n",

"TemperatureFormat":"C",

"ThousandsSeparator":",",

"currency":"£",

"date_format":"dd/mm/yy", -

openLuup log files - LuaUPnP.log and LuaUPnP_startup.logthe 176 line above is inside the user_data file and every parameter is separated by "," . Following some lines around 176 :

"Region_description":"Lazio",

"ShutdownCode":"",

"StartupCode":"\n-- You can personalise the installation by changing these attributes,\n-- which are persistent and may be removed from the Startup after a reload.\nlocal attr = luup.attr_set\n\n-- Geographical location\nattr ("City_description", "Rome")\nattr ("Country_description", "Italy")\nattr ("Region_description", "Lazio")\nattr ("latitude", "51.48")\nattr ("longitude", "0.0")\n\n-- other parameters\nattr ("TemperatureFormat", "C")\nattr ("PK_AccessPoint", "99000007")\nattr ("currency", "£")\nattr ("date_format", "dd/mm/yy")\nattr ("model", "Not a Vera")\nattr ("timeFormat", "24hr")\n\n-- Any other startup processing may be inserted here...\nluup.log "startup code completed"\n\n",

"TemperatureFormat":"C",

"ThousandsSeparator":",", -

openLuup log files - LuaUPnP.log and LuaUPnP_startup.logThis is the line 176 of User_Data Json file :

"StartupCode":"\n-- You can personalise the installation by changing these attributes,\n-- which are persistent and may be removed from the Startup after a reload.\nlocal attr = luup.attr_set\n\n-- Geographical location\nattr ("City_description", "Rome")\nattr ("Country_description", "Italy")\nattr ("Region_description", "Lazio")\nattr ("latitude", "51.48")\nattr ("longitude", "0.0")\n\n-- other parameters\nattr ("TemperatureFormat", "C")\nattr ("PK_AccessPoint", "99000007")\nattr ("currency", "£")\nattr ("date_format", "dd/mm/yy")\nattr ("model", "Not a Vera")\nattr ("timeFormat", "24hr")\n\n-- Any other startup processing may be inserted here...\nluup.log "startup code completed"\n\n",

I modify these parameter through the console openluup app and these are the values :

-- You can personalise the installation by changing these attributes,

-- which are persistent and may be removed from the Startup after a reload.

local attr = luup.attr_set-- Geographical location

attr ("City_description", "Rome")

attr ("Country_description", "Italy")

attr ("Region_description", "Lazio")

attr ("latitude", "51.48")

attr ("longitude", "0.0")-- other parameters

attr ("TemperatureFormat", "C")

attr ("PK_AccessPoint", "99000007")

attr ("currency", "£")

attr ("date_format", "dd/mm/yy")

attr ("model", "Not a Vera")

attr ("timeFormat", "24hr")-- Any other startup processing may be inserted here...

luup.log "startup code completed"Is there any error ?

tnks

-

openLuup log files - LuaUPnP.log and LuaUPnP_startup.logHi akbooer,

sometimes openluup restore the file user_data.json to the default and I need to restore the configured one. I notice in the LuaUPnP_startup.log these msgs :

2024-07-18 07:46:19.585 :: openLuup STARTUP :: /etc/cmh-ludl 2024-07-18 07:46:19.586 openLuup.init:: version 2022.11.28 @akbooer 2024-07-18 07:46:19.595 openLuup.scheduler:: version 2021.03.19 @akbooer 2024-07-18 07:46:19.723 openLuup.io:: version 2021.03.27 @akbooer 2024-07-18 07:46:19.723 openLuup.mqtt:: version 2022.12.16 @akbooer 2024-07-18 07:46:19.727 openLuup.wsapi:: version 2023.02.10 @akbooer 2024-07-18 07:46:19.727 openLuup.servlet:: version 2021.04.30 @akbooer 2024-07-18 07:46:19.727 openLuup.client:: version 2019.10.14 @akbooer 2024-07-18 07:46:19.729 openLuup.server:: version 2022.08.14 @akbooer 2024-07-18 07:46:19.737 openLuup.scenes:: version 2023.03.03 @akbooer 2024-07-18 07:46:19.750 openLuup.chdev:: version 2022.11.05 @akbooer 2024-07-18 07:46:19.750 openLuup.userdata:: version 2021.04.30 @akbooer 2024-07-18 07:46:19.751 openLuup.requests:: version 2021.02.20 @akbooer 2024-07-18 07:46:19.751 openLuup.gateway:: version 2021.05.08 @akbooer 2024-07-18 07:46:19.757 openLuup.smtp:: version 2018.04.12 @akbooer 2024-07-18 07:46:19.764 openLuup.historian:: version 2022.12.20 @akbooer 2024-07-18 07:46:19.764 openLuup.luup:: version 2023.01.06 @akbooer 2024-07-18 07:46:19.767 openLuup.pop3:: version 2018.04.23 @akbooer 2024-07-18 07:46:19.768 openLuup.compression:: version 2016.06.30 @akbooer 2024-07-18 07:46:19.768 openLuup.timers:: version 2021.05.23 @akbooer 2024-07-18 07:46:19.769 openLuup.logs:: version 2018.03.25 @akbooer 2024-07-18 07:46:19.769 openLuup.json:: version 2021.05.01 @akbooer 2024-07-18 07:46:19.774 luup.create_device:: [1] D_ZWaveNetwork.xml / / () 2024-07-18 07:46:19.774 openLuup.chdev:: ERROR: unable to read XML file I_ZWave.xml 2024-07-18 07:46:19.800 luup.create_device:: [2] D_openLuup.xml / I_openLuup.xml / D_openLuup.json (openLuup) 2024-07-18 07:46:19.800 openLuup.init:: loading configuration user_data.json 2024-07-18 07:46:19.801 openLuup.userdata:: loading user_data json... 2024-07-18 07:46:19.805 openLuup.userdata:: JSON decode error @[8173 of 8192, line: 176] unterminated string ' = luup.attr_set\n\n <<<HERE>>> -- Geographical loca' 2024-07-18 07:46:19.805 openLuup.userdata:: ...user_data loading completed 2024-07-18 07:46:19.805 openLuup.init:: running _openLuup_STARTUP_ 2024-07-18 07:46:19.805 luup_log:0: startup code completed 2024-07-18 07:46:19.806 openLuup.init:: init phase completed 2024-07-18 07:46:19.806 :: openLuup LOG ROTATION :: (runtime 0.0 days)Is this a my error in some configuration files ?

tnks

-

Openluup: DatayoursHi akbooer,

excuse me for late answer. Tnks for your precious support as usual.

I'll test your code asap.

A question for my clarity: does the routine register only a value every minute in the whisper file (average of values in a minute) ? Are the different values in a minute momentarily memorized in a DY cache ?tnks

-

Openluup: DatayoursYes, but all the variables with the name "Variable" (local target = "Variable")

-

Openluup: DatayoursIn my installation sensors measure at least a value every 20/30s (in my case is electric power) and I'd like to register the average value every minute (if possible).

Can I change the retention schemas of the actual whisper files without loosing the actual data or do I have to start from zero?Over hour and daily period the aggregation is different for the whisper files created by L_DataUser routine :

[Power_Daily_DataWatcher]

pattern = .kwdaily

xFilesFactor = 0

aggregationMethod = sum

[Power_Hourly_DataWatcher]

pattern = .kwhourly

xFilesFactor = 0

aggregationMethod = sum

[Power_MaxHourly_DataWatcher]

pattern = .kwmaxhourly

xFilesFactor = 0

aggregationMethod = maxFor the "Variable" whisper files seems correct the average calculation for 5m, 10m, 1h .. etc based on :

retentions = 1m:1d,5m:90d,10m:180d,1h:2y,1d:10y

and the value registered every minute.

-

Openluup: DatayoursHi akbooer,

tnks now things is going well for me too.

You wrote for me the following L_DataUser.lua that I'm using :

local function run (metric, value, time) local target = "Variable" local names = {"kwdaily", "kwhourly", "kwmaxhourly"} local metrics = {metric} for i, name in ipairs (names) do local x,n = metric: gsub (target, name) metrics[#metrics+1] = n>0 and x or nil end local i = 0 return function () i = i + 1 return metrics[i], value, time end end return {run = run}that write for every value of "Variablex" variable to other whisper files with different aggregation and schemas.

-

Openluup: DatayoursHi akbooer,

i hope all is well.I've a question about datayours and the aggregation e schemas parameters.

I've these configurations :-

aggregation :

[Power_Calcolata_Kwatt]

pattern = .Variable

xFilesFactor = 0

aggregationMethod = average -

schemas:

[Power_Calcolata_Kwatt]

pattern = .Variable

retentions = 1m:1d,5m:90d,10m:180d,1h:2y,1d:10y

In the cache history of openluup console for example i see these values :

2024-06-27 09:17:57 46.73

2024-06-27 09:17:25 16.55and in the whisper file (it contains a point every minute) i see :

1719472620, 46.73 (17119472620 is 09.17 time for my zone)

It seems that is considered the last value and not the average of two value registered at 9.17 time.

Is it correct ?

tnks

donato

-

-

Openluup: Datayours@akbooer

Hi akbooer, I've simulated on a test installation a network outage of the remote DY and I've produced two sets of whisper files : one updated (remote DY) and the other one to update (central DY) if possible with a routine similar to Whisper-fill.py from Graphite tool.

In the files I'll send you by email you find :- whisper file Updated ;

- whisper file To Update ;

- L_DataUser.lua used for both that processes and creates different metric names;

- Storage-aggregation.conf and Storage-schema.conf files.

I remain at your disposal for any clarification.

tnks

donato

-

Openluup: Datayours@akbooer excuse me how can i send you the schema ?

-

Openluup: Datayours@akbooer

a stand-alone command line utility is ok possibly with the option to indicate a date interval. The files to fill for a remote DY may be more than one all with the same Openluup/Whisper ID.

At the moment I haven't an example of files to fill . I'll simulate a network outage so I produce the files.Can I send you meanwhile the schema to verify if the openluup/DY configuration remote and centralized are correct (destinations, udp receiver ports, line receiver port)

tnks

donato

-

Openluup: DatayoursHi akbooer,

I've an installation with a centralized openluup/DY on Debian 11 where're archived and consolidated several remote openluup/DY on RPI. I'm also using a user-defined (defined with your support) "DataUser.lua" to process metrics and creating different metric names. I've a schema of this configuration but I can't upload on forum.

I'd like to manage outage network connections between remote and centralized system while the remote DY is running and archives data locally.

I see the whisper-fill.py python routine (https://github.com/graphite-project/whisper/blob/master/bin/whisper-fill.py) from Graphite tool. I know that DY/whisper format is different from Graphite/whisper (CSV vs. binary packing), but based on your deep knowledge and experience is it hard to adapt the fill routine to DY/whisper format ?tnks

donato

-

Unexpected stop of openLuuptnks akbooer for your fix.

About VPN, my server is on a cloud hosting and the web app is accessed by authenticated users so I suppose the only solution is to activate some fw rule on the openluup/datayours server.

-

Unexpected stop of openLuupHi akbooer,

in order to set firewall rules can you give me some info on the openluup log records ? Following there are few normal log lines of datayours read/write :

2022-08-13 12:52:21.921 luup.variable_set:: 4.urn:akbooer-com:serviceId:DataYours1.AppMemoryUsed was: 6568 now: 6871 #hooks:0

2022-08-13 12:52:26.570 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c474b0348

2022-08-13 12:52:26.571 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=-2h&format=json HTTP/1.1 tcp{client}: 0x556c474b0348

2022-08-13 12:52:26.571 luup_log:4: DataGraph: Whisper query: CPU = 0.405 mS for 121 points

2022-08-13 12:52:26.572 openLuup.server:: request completed (3359 bytes, 1 chunks, 1 ms) tcp{client}: 0x556c474b0348

2022-08-13 12:52:26.572 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c474b0348

2022-08-13 12:52:26.574 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c47810548

2022-08-13 12:52:26.574 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=2022-08-13T00:00&format=json HTTP/1.1 tcp{client}: 0x556c47810548

2022-08-13 12:52:26.576 luup_log:4: DataGraph: Whisper query: CPU = 2.085 mS for 773 points

2022-08-13 12:52:26.580 openLuup.server:: request completed (20228 bytes, 2 chunks, 6 ms) tcp{client}: 0x556c47810548

2022-08-13 12:52:26.581 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c47810548

2022-08-13 12:52:35.363 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c4756a4b8

2022-08-13 12:52:35.364 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=-2h&format=json HTTP/1.1 tcp{client}: 0x556c4756a4b8

2022-08-13 12:52:35.364 luup_log:4: DataGraph: Whisper query: CPU = 0.418 mS for 121 points

2022-08-13 12:52:35.366 openLuup.server:: request completed (3359 bytes, 1 chunks, 1 ms) tcp{client}: 0x556c4756a4b8

2022-08-13 12:52:35.366 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c4756a4b8

2022-08-13 12:52:35.368 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c47294958

2022-08-13 12:52:35.368 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.kwdaily3&from=2016-07-01&format=json HTTP/1.1 tcp{client}: 0x556c47294958

2022-08-13 12:52:35.375 luup_log:4: DataGraph: Whisper query: CPU = 6.521 mS for 2235 points

2022-08-13 12:52:35.392 openLuup.server:: request completed (62445 bytes, 4 chunks, 23 ms) tcp{client}: 0x556c47294958

2022-08-13 12:52:35.397 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c47294958

2022-08-13 12:52:35.398 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c47158248

2022-08-13 12:52:35.398 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=2022-08-13T00:00&format=json HTTP/1.1 tcp{client}: 0x556c47158248

2022-08-13 12:52:35.401 luup_log:4: DataGraph: Whisper query: CPU = 2.409 mS for 773 points

2022-08-13 12:52:35.406 openLuup.server:: request completed (20228 bytes, 2 chunks, 7 ms) tcp{client}: 0x556c47158248

2022-08-13 12:52:35.406 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c47158248

2022-08-13 12:53:26.581 openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}: 0x556c4877f9d8

2022-08-13 12:53:26.581 openLuup.server:: GET /data_request?id=lr_render&target=Vera-yyyyyyyy.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=-2h&format=json HTTP/1.1 tcp{client}: 0x556c4877f9d8

2022-08-13 12:53:26.582 luup_log:4: DataGraph: Whisper query: CPU = 0.735 mS for 121 pointsThe read commands of whisper files are all of kind "http://server-ip:3480......."

the following log record :

openLuup.io.server:: HTTP:3480 connection from xx.xx.xx.xx tcp{client}

is from a http read ?Can I see UDP write log record ?

The write commands come from remote datayours through UDP to "server-ip" .

All consolidated whisper files I read/write are on "server-ip".

Is it correct in this scenario that all the regular and normal commands (read/write) must come from "server-ip" ?

tnks

-

Unexpected stop of openLuupHi akbooer,

randomly openluup hangs but today I noticed in the log (attached) something strange :

2022-08-13 12:54:35.283 openLuup.server:: GET /data_request?id=lr_render&target=Vera-45108342.024.urn:upnp-org:serviceId:VContainer1.Variable3&from=2022-08-13T00:00&format=json HTTP/1.1 tcp{client}: 0x556c471741f8

2022-08-13 12:54:35.286 luup_log:4: DataGraph: Whisper query: CPU = 2.068 mS for 775 points

2022-08-13 12:54:35.289 openLuup.server:: request completed (20282 bytes, 2 chunks, 6 ms) tcp{client}: 0x556c471741f8

2022-08-13 12:54:35.290 openLuup.io.server:: HTTP:3480 connection closed openLuup.server.receive closed tcp{client}: 0x556c471741f8

2022-08-13 12:54:57.889 openLuup.io.server:: HTTP:3480 connection from 92.255.85.183 tcp{client}: 0x556c482cf138

2022-08-13 12:54:57.889 openLuup.server:: /*: mstshash=Administr tcp{client}: 0x556c482cf138

2022-08-13 12:54:57.889 openLuup.context_switch:: ERROR: [dev #0] ./openLuup/server.lua:238: attempt to concatenate local 'method' (a nil value)

2022-08-13 12:54:57.889 luup.incoming_callback:: function: 0x556c4753ff20 ERROR: ./openLuup/server.lua:238: attempt to concatenate local 'method' (a nil value)at 12:54 openluup stopped to write and read the datayours files

Is a possible attack ?

-

Help with Z-Way pluginHi akbooer,

I've defined in z-way controller a Virtual Device (a virtual binary switch) but I can't see it on openluup with z-way plugin installed while I can see a real switch.

Is it correct ? Are the z-way virtual device using different API not implemented in openluup ?

tnks

donato