Openluup: Datayours

-

Hi akbooer,

I've an installation with a centralized openluup/DY on Debian 11 where're archived and consolidated several remote openluup/DY on RPI. I'm also using a user-defined (defined with your support) "DataUser.lua" to process metrics and creating different metric names. I've a schema of this configuration but I can't upload on forum.

I'd like to manage outage network connections between remote and centralized system while the remote DY is running and archives data locally.

I see the whisper-fill.py python routine (https://github.com/graphite-project/whisper/blob/master/bin/whisper-fill.py) from Graphite tool. I know that DY/whisper format is different from Graphite/whisper (CSV vs. binary packing), but based on your deep knowledge and experience is it hard to adapt the fill routine to DY/whisper format ?tnks

donato

-

This should be straightforward enough. The Whisper library in openLuup has all the needed functionality, I believe. You’d want to run this as a stand-alone command line utility?

Do you have some example of the files you want to merge?

@akbooer

a stand-alone command line utility is ok possibly with the option to indicate a date interval. The files to fill for a remote DY may be more than one all with the same Openluup/Whisper ID.

At the moment I haven't an example of files to fill . I'll simulate a network outage so I produce the files.Can I send you meanwhile the schema to verify if the openluup/DY configuration remote and centralized are correct (destinations, udp receiver ports, line receiver port)

tnks

donato

-

This should be straightforward enough. The Whisper library in openLuup has all the needed functionality, I believe. You’d want to run this as a stand-alone command line utility?

Do you have some example of the files you want to merge?

@akbooer

Hi akbooer, I've simulated on a test installation a network outage of the remote DY and I've produced two sets of whisper files : one updated (remote DY) and the other one to update (central DY) if possible with a routine similar to Whisper-fill.py from Graphite tool.

In the files I'll send you by email you find :- whisper file Updated ;

- whisper file To Update ;

- L_DataUser.lua used for both that processes and creates different metric names;

- Storage-aggregation.conf and Storage-schema.conf files.

I remain at your disposal for any clarification.

tnks

donato

-

Hi akbooer,

i hope all is well.I've a question about datayours and the aggregation e schemas parameters.

I've these configurations :-

aggregation :

[Power_Calcolata_Kwatt]

pattern = .Variable

xFilesFactor = 0

aggregationMethod = average -

schemas:

[Power_Calcolata_Kwatt]

pattern = .Variable

retentions = 1m:1d,5m:90d,10m:180d,1h:2y,1d:10y

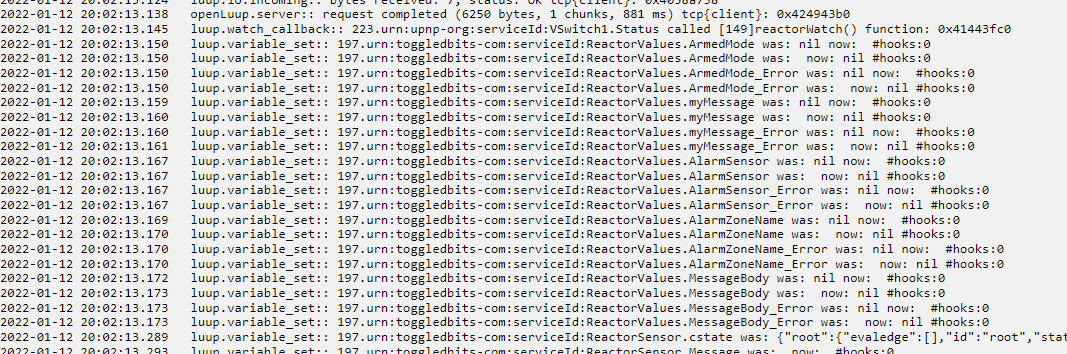

In the cache history of openluup console for example i see these values :

2024-06-27 09:17:57 46.73

2024-06-27 09:17:25 16.55and in the whisper file (it contains a point every minute) i see :

1719472620, 46.73 (17119472620 is 09.17 time for my zone)

It seems that is considered the last value and not the average of two value registered at 9.17 time.

Is it correct ?

tnks

donato

-

-

Hi Donato

Hope that things are going well for you.

Yes, this is expected behaviour. Your shortest aggregation interval is one minute, so any updates faster than that only retain the latest value.

There are two possible options:

- Use a schema with an even shorter initial interval, for example 10s.

- Write a custom aggregator which collects and averages values before writing to the variable.

I think I gave you the means to do option 2 a while ago, by allowing user-defined actions… but it’s so long ago, I’ve forgotten what we called that! The newest versions of openLuup actually allows you to add a user defined function to any variable to modify the value before it is written, so you could also do it that way.

-

Hi akbooer,

tnks now things is going well for me too.

You wrote for me the following L_DataUser.lua that I'm using :

local function run (metric, value, time) local target = "Variable" local names = {"kwdaily", "kwhourly", "kwmaxhourly"} local metrics = {metric} for i, name in ipairs (names) do local x,n = metric: gsub (target, name) metrics[#metrics+1] = n>0 and x or nil end local i = 0 return function () i = i + 1 return metrics[i], value, time end end return {run = run}that write for every value of "Variablex" variable to other whisper files with different aggregation and schemas.

-

Ah yes, that's how we did it!

So, the question is how do you want to proceed? Option #1 is by far the easiest, unless other considerations preclude this. It would allow configuration on a per-file basis.

What is it that concerns you, that you're really trying to avoid? Are variations within a one-minute interval too much for you? Or is the issue that for the longer durations (one hour, one day) you are not averaging over those correctly?

-

In my installation sensors measure at least a value every 20/30s (in my case is electric power) and I'd like to register the average value every minute (if possible).

Can I change the retention schemas of the actual whisper files without loosing the actual data or do I have to start from zero?Over hour and daily period the aggregation is different for the whisper files created by L_DataUser routine :

[Power_Daily_DataWatcher]

pattern = .kwdaily

xFilesFactor = 0

aggregationMethod = sum

[Power_Hourly_DataWatcher]

pattern = .kwhourly

xFilesFactor = 0

aggregationMethod = sum

[Power_MaxHourly_DataWatcher]

pattern = .kwmaxhourly

xFilesFactor = 0

aggregationMethod = maxFor the "Variable" whisper files seems correct the average calculation for 5m, 10m, 1h .. etc based on :

retentions = 1m:1d,5m:90d,10m:180d,1h:2y,1d:10y

and the value registered every minute.

-

I think this will do what you need:

local target = "Variable" local names = {"kwdaily", "kwhourly", "kwmaxhourly"} local avg_time = 60 -- average over 60 seconds local caches = {} local function average (metric, value, time) local stale = time - avg_time local cache = caches[metric] or {} -- find the right cache caches[metric] = cache cache[time] = value -- update latest value local n,sum = 0,0 for t,v in pairs(cache) do if t <= stale then -- clear stale values from the cache cache[t] = nil else -- add to total n = n + 1 sum = sum + v end end return sum / n end local function run (metric, value, time) local metrics = {metric} if metric: match(target) then value = average(metric, value, time) for i, name in ipairs (names) do local x,n = metric: gsub (target, name) metrics[#metrics+1] = n>0 and x or nil end end local i = 0 return function () i = i + 1 return metrics[i], value, time end end return {run = run} -

Hi akbooer,

excuse me for late answer. Tnks for your precious support as usual.

I'll test your code asap.

A question for my clarity: does the routine register only a value every minute in the whisper file (average of values in a minute) ? Are the different values in a minute momentarily memorized in a DY cache ?tnks

-

No, a new 60 second average is calculated, and emitted, on every new measurement.

However, there is still an issue that the averaging window for the internal cache may not be aligned with the one that DataYours is using.

Also, how accurately is the acquisition machine timestamp synchronised with the one doing the averaging and storage?

It’s actually not a trivial problem for these reasons, but there are different options, so do try it, investigate the results, and report back!

Good luck,

AK