Using luup.call_delay() in the browser code test boxes

-

Why doesn't luup.call_delay work in the browser test boxes for Vera & openLuup?

eg this doesn't work:

local m_TestCount = 0 -- Must be global: it is a delay timeout target function delayTest() print('m_TestCount = '..m_TestCount) m_TestCount = m_TestCount+1 if (m_TestCount > 10) then return end print(pretty(delayTest)) print(pretty(_G.delayTest)) local m_PollInterval = 1 -- seconds local result = luup.call_delay('delayTest', m_PollInterval) print(pretty(result)) end delayTest() return true -

Ah yes.

Well, it may, in fact, be running...

Since the routine which runs the code has exited before the delayed callback is run, there’s no way that its output can appear on the screen.

If you did an action which did not require output (eg. set a device variable) then you might find that it works.

-

...it’s also possible that it’s sandboxed such that the system can’t find the named global callback function.

I did test this once, but have forgotten the details of how Vera behaved!

@akbooer said in Using luup.call_delay() in the browser code test boxes:

but have forgotten the details of how Vera behaved!

Just to remind you; badly.

OK not surprised I'm going around in circles - I should know better by now. I modified the example code to write to the log, instead of the UI. In Vera, in either Vera's UI or AltUI the log shows:

LuaInterface::CallFunction_Timer-5 function delayTest failed [string "local altui_device = 5..."]:10: bad argument #1 to 'insert' (table expected, got string)Using AltUI with openLuup, I get similar, where 0x27151d0 is the called function (as printed by the test code):

luup.delay_callback:: function: 0x27151d0 ERROR: [string "RunLua"]:10: bad argument #1 to 'insert' (table expected, got string)OK so then I test it in one of openLuup's three console test windows (I came across these just recently) and as expected with your code; it all works! I think I figured it had all failed because I never saw the test code printed in the log. Your console test windows just show this, rather than listing the code:

openLuup.server:: POST /data_request?id=XMLHttpRequest&action=submit_lua&codename=LuaTestCode2 HTTP/1.1 tcp{client}: 0x33b3328So it can be made to work - looks like altUI and Vera UI need to pass the function reference, not the function name string? Or whatever is needed.

Out of interest I have noted that luup.call_delay can use sub one second delays in openLuup as previously mentioned on the Vera forum. I'm unsure if Vera can or can't.

I did some tests and found on a rasPi that occasionally, a one second delay could be well over one second on occasions (have seen two seconds). There could be any number of reasons for this - just measuring the time alone affects the timing (I used socket.gettime()). Plus with different things happening at the same time, it can get weird. But I do have a plugin that runs code every minute and receives/handles a lot of bytes, one by on, via the infamous Vera "'incoming" feature. I should probably rewrite that plugin code one day, if I can rember how it all worked.

There may even be an argument to code a finite reduction in all delays by some fudge factor representing the delay processing time eg say 20 milliseconds but that's getting too pedantic.

-

Ah yes, once again.

The Lua Test windows in openLuup (I never found just the one to be sufficient) are implemented quite separately from AltUI, and you don’t see the code because it is sent as a POST, not a GET.

Incidentally, you do recall that

pretty()is available to you in all Lua Test windows to examine tables? As in:print(pretty(luup.devices[2]))It works in AltUI too (it’s the same code.)

-

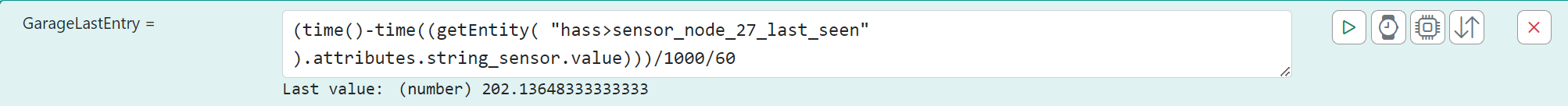

For those interested, I modified the code above to show the differences between the one second delay requested and the delay that actually occurs. This code only works in the openLuup console test windows as noted above. "Max delta" is the figure of interest. It captures the highest mismatch between the requested delay and the actual delay over a two minute testing period 120 tests. I experience fairly long mismatches from time to time. Would be interested if others could run the code and see if they have the same experience.

local socket = require('socket') local m_pollTime = 1 -- seconds local m_loopCount = 120 -- two minutes of testing local m_testCount = 0 local m_runTime = 0 local m_deltaTime = 0 local m_maxTime = 0 local m_startTime = socket.gettime()*1000 local m_lastNow = m_startTime - (m_pollTime*1000) -- must be global: function is a delay timeout target function delayTest() local now = socket.gettime()*1000 m_runTime = now - m_startTime m_deltaTime = now - m_lastNow - (m_pollTime*1000) m_lastNow = now if (m_deltaTime > m_maxTime) then m_maxTime = m_deltaTime end luup.log(string.format("HELLO TESTING: m_testCount: %i, time: %.0f, delta: %.0f, max delta: %.0f", m_testCount, m_runTime, m_deltaTime, m_maxTime), 50) m_testCount = m_testCount+1 if (m_testCount > m_loopCount) then return end luup.call_delay('delayTest', m_pollTime) end print('Starting test') print(delayTest) delayTest() print('Finishing test') return true -

@akbooer said in Using luup.call_delay() in the browser code test boxes:

it is sent as a POST, not a GET

I've run code consisting of over a thousand lines regularly in the Vera UI and AltUI test windows and they generally work OK. But using a GET and sticking all that stuff effectively into a URL is a bit scary.

I have found that the Vera UI test window will occasionally fail - seems to occur when the code contains arrays declared with heaps of stuff in them like look up tables for example. I have never bothered to chase it down. I just switch to AltUI on openluup.

I presume that we could ask amg0 to modify his code to use a POST for the test window in AltUI, when working with openLuup?

An yes I use pretty() - very handy.

-

This variation in delays is to be expected, since the system IS doing other things. The biggest culprit here is AltUI, since its inevitable outstanding lazy-poll request will get answered when variables are changed. It would be very interesting to rerun this without AltUI running in a browser window (although maybe you did that?)

Secondly, there’s absolutely no excuse to need to load that code over HTTP. There’s no way in code development that you’re actually making changes across that whole codebase. Simply move the static parts to a file and use require() in the part that you are changing in the interactive window. (Be aware, though, that if you do change the require file contents, it will not be reloaded unless you explicitly clear it from the packages cache.)

-

@akbooer said in Using luup.call_delay() in the browser code test boxes:

This variation in delays is to be expected, since the system IS doing other things.

Yep - appreciate that. I did a few tests and found a plugin that was being accessed very regularly and was doing this in a for loop of varying loop count/length - and then the json then being processed by openLuup:

lul_json = lul_json .. '{"Id":'..v.Id..',"LastRec":'..v.LastRec..',"LastVal":"'..v.LastVal..'"}'and changed it to this and then a table.concat later:

table.insert(lul_json, '{"Id":') table.insert(lul_json, v.Id) table.insert(lul_json, ',"LastRec":') table.insert(lul_json, v.LastRec) table.insert(lul_json, ',"LastVal":"') table.insert(lul_json, v.LastVal) table.insert(lul_json, '"}')Made a substantial difference as we know a dot dot string concat is very slooow; especially when chained from one string to the next. It may be even faster to not use table.insert but a straight

lul_json[count] = xyz count = count+1So the luup.call_delay() test routine above can be helpful in finding errant plugins, etc.

Roger on the test window and using require: I have my thousand lines in the test window and another 500 line coming in from a require and all works fine!!