Video Doorbells

-

Rafale, how did you manage to get "instant" notification and talk back via your phone with that hikvision? And secondly how did you manage to use the original chime? I always keep relying on a 8 or 12v physical doorbell next to the smart part.

Thank you for bringing this up... The big massive main discussion around this doorbell on IPCamTalk is about power and the old chime. When I switched to Skybell I had moved from an electronic chime to a more traditional mechanical chime. As a result I never faced any problem. It appears that electronic chimes are less standardized and various models can give problems.

In the US doorbells are powered by low voltage AC (similar to yard lighting) with a lot of tolerance on voltage. This doorbell takes both AC and DC... 12 to 24V... but this must work with the chime too. In my case I had to upgrade my power supply to a 30W one. This tiny wires over long runs have a lot of power loss so I went to 20V AC. You get more power loss with DC than AC. You also get more power loss with lower voltages. (Joules law)

As for your first question, you can install this in your chime:

For less than $5, you get a zigbee doorbell notification. I don't use the talk back function, only the snapshot. If I need the inter phone functionality, I can enable the cloud function which is free. I keep mine enabled but I have redundancy... so I am as not to be cloud dependent.

For information, this is the thread I have been mentioning with the 101 about this unit which summarizes almost everything:

https://ipcamtalk.com/threads/new-rca-hsdb2a-3mp-doorbell-ip-camera.31601/page-101

-

Hmmm ... that way it is not really a doorbell but a snapshotter :-). I have that for years... haha...

A hikvsion cam connected to synology surveillance station and parrallel to a fibaro universal sensor. Before the cam was in vera (directly) and the veraalerts app sent me a snapshot via mail when motion was detected.

Recently I moved to home assistant, integrated the vera devices there, have node red, telegram and now I receive an INSTANT notification when doorbell is pressed and an instant snapshot from 2 cameras, doorcam and front yard cam. Only thing I would really like (for after corona period) to talk back and have an instant video and audio stream... -

My door is covered by a hidden microwave sensor. when triggered reactor sends a http request to my phone or tv box, depending on home or away. My phone/box uses Automate to receive the http request, Automate then opens the camera app and displays the camera. I can talk and listen though the camera app. Normally takes 5-10 seconds to complete. If i am home vera sends a TTS to alexa, informing me there is some one at the door.

-

I use a

https://www.ebay.co.uk/itm/AC-110-220V-Microwave-Radar-Sensor-Switch-Body-Motion-Detector-for-LED-Light/274176461640?ssPageName=STRK%3AMEBIDX%3AIT&_trksid=p2057872.m2749.l2649

connected to a Shelly 1, which is integrated with @therealdb Virtul HTTP Switch as a sensor. -

@sender

Be careful with the placement as these sensors can see through doors and thin walls, You can block the sensor with thin metal to stop false trips.

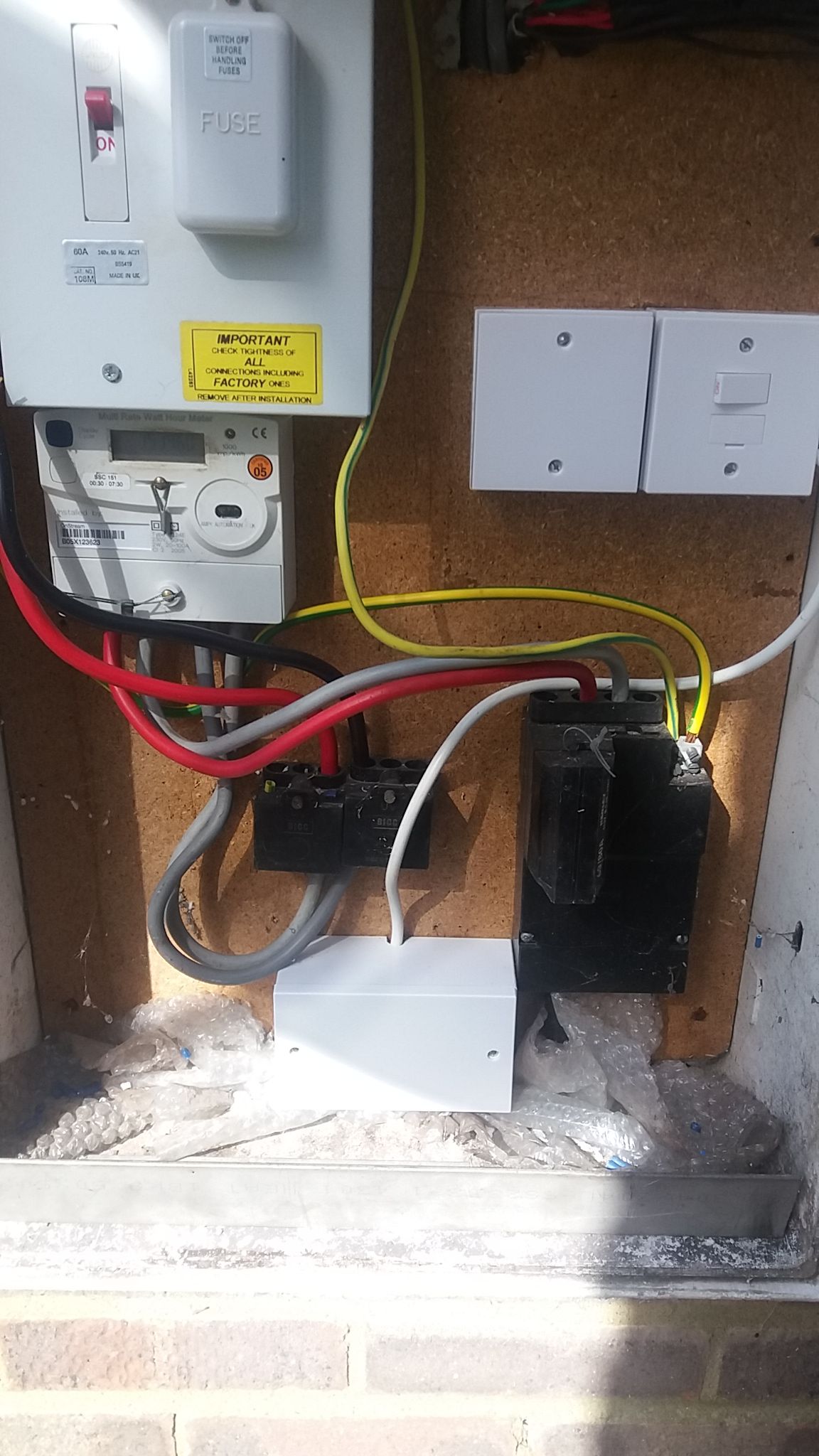

I mounted my sensor in meter cupboard and place a section of angle iron at bottom to stop trips from cats etc. The white box at bottom contains the sensor and Shelly

-

Video over wifi is ok IF the bandwidth is efficient. Usually that is not the case when you start adding multiple wifi cameras to your network and you quickly understand how unreliable a wifi connection can be.

My suggestions here is always:

- Keep wifi cameras on a dedicated WLAN network.

- Calculate your bandwidth and never go more than 80% of recommended throughput depending on your wifi specs.

- If you are distributing the camera feed to multiple clients ALWAYS configure your camera/dvr/clients to use multicast.

When it comes to the topic at hand and just for informational purposes my doorbell is from DoorBird and is connected through POE and so far it's been the most reliable/flexible solution I've tried yet.

-

With the boom of video doorbells with the likes of Ring, Skybell and Next doorbell cams, I came to the realization that I did not want to be cloud dependent for this type service for long term reliability, privacy and cost.

I finally found last year a wifi video doorbell which is cost effective and support RTSP and now ONVIF streaming:The RCA HSDB2A which is made by Hikvision and has many clones (EZViz, Nelly, Laview). It has an unusual vertical aspect ratio designed to watch packages delivered on the floor....

It also runs on 5GHz wifi which is a huge advantage. I have tried running IPCams on 2.4GHz before and it is a complete disaster for your WIFI bandwidth. Using a spectrum analyzer, you will see what I mean. It completely saturates the wifi channels because of the very high IO requirements and is a horrible design. 2.4GHz gets range but is too limited in bandwidth to support any kind of video stream reliably... unless you have a dedicated SSID and channel available for it.The video is recorded locally on my NVR. I was able to process the stream from it on Home Assistant to get it to do facial recognition and trigger automations on openLuup like any other IPCams. This requires quite a bit of CPU power to do...

I also get snapshots through push notifications through pushover on motion like all of my other IPcams. Movement detection is switched on and off by openLuup... based on house mode.°@rafale77 said in Video Doorbells:I also get snapshots through push notifications through pushover on motion like all of my other IPcams. Movement detection is switched on and off by openLuup... based on house mode.°(information text)

How do you use pushover from openluup I’ve only been able to do growl and sms. Also being able to include framegrab in message would be great.

-

I use a home assistant binding for this:

I have setup Home assistant with the pushover component:I then wrote a scene on the vera to send a command to home assistant to take the snapshot from the camera I want and embed into the notification as documented above. I shared the snippet to send commands to home assistant in the snippet/code section.

-

My door is covered by a hidden microwave sensor. when triggered reactor sends a http request to my phone or tv box, depending on home or away. My phone/box uses Automate to receive the http request, Automate then opens the camera app and displays the camera. I can talk and listen though the camera app. Normally takes 5-10 seconds to complete. If i am home vera sends a TTS to alexa, informing me there is some one at the door.

@Elcid said in Video Doorbells:

My door is covered by a hidden microwave sensor. when triggered reactor sends a http request to my phone or tv box, depending on home or away. My phone/box uses Automate to receive the http request, Automate then opens the camera app and displays the camera. I can talk and listen though the camera app. Normally takes 5-10 seconds to complete. If i am home vera sends a TTS to alexa, informing me there is some one at the door.

I have a BTicino (part of Legrand, very common in France/Italy/Spain) doorbell. They have a proprietary system, but it's basically a video doorbell. I spent almost 1k to have it installed, but it's doing multiple entries, multiple inside points, etc. I have it hooked up with a binary sensor to get doorbell status, plus a switch to open the gate. It natively supports Android/iOS and I get a call and I can reply even when outside. I use it to let the packages in my porch, etc, since here in Italy open garden on the front yard is not common, we have the whole property closed to external guests.

I too have a similar setup as @Elcid. When someone rings, I send a notification to our phones and I turn on camera recording (I turn it OFF during the day, unless events are triggered), and I turn on a tablet in the open space to show all the external camera. It's near the video doorbell proprietary tablet, so you'll see the person on the doorbell, plus all the cameras. Everything is pushed via my telegram bot.

If it's night, I turn on all the lights (I have more lights outside that I turn on during parties or when doors/gates are opened). Overall, I like my setup, the only thing I regret is that this system is closed and I can't capture a screenshot of the wide-angle camera easily. I wanted to separate this from the HA system to have stability - and I was right, from that point of view.

-

@Elcid said in Video Doorbells:

My door is covered by a hidden microwave sensor. when triggered reactor sends a http request to my phone or tv box, depending on home or away. My phone/box uses Automate to receive the http request, Automate then opens the camera app and displays the camera. I can talk and listen though the camera app. Normally takes 5-10 seconds to complete. If i am home vera sends a TTS to alexa, informing me there is some one at the door.

I have a BTicino (part of Legrand, very common in France/Italy/Spain) doorbell. They have a proprietary system, but it's basically a video doorbell. I spent almost 1k to have it installed, but it's doing multiple entries, multiple inside points, etc. I have it hooked up with a binary sensor to get doorbell status, plus a switch to open the gate. It natively supports Android/iOS and I get a call and I can reply even when outside. I use it to let the packages in my porch, etc, since here in Italy open garden on the front yard is not common, we have the whole property closed to external guests.

I too have a similar setup as @Elcid. When someone rings, I send a notification to our phones and I turn on camera recording (I turn it OFF during the day, unless events are triggered), and I turn on a tablet in the open space to show all the external camera. It's near the video doorbell proprietary tablet, so you'll see the person on the doorbell, plus all the cameras. Everything is pushed via my telegram bot.

If it's night, I turn on all the lights (I have more lights outside that I turn on during parties or when doors/gates are opened). Overall, I like my setup, the only thing I regret is that this system is closed and I can't capture a screenshot of the wide-angle camera easily. I wanted to separate this from the HA system to have stability - and I was right, from that point of view.

@therealdb said in Video Doorbells:

Overall, I like my setup, the only thing I regret is that this system is closed and I can't capture a screenshot of the wide-angle camera easily.

My NVR his so old that the SSL encryption is obsolete, so the email no longer functions. I capture images with Automate and use Automate to send an email with the screenshot to my device or FTP server. If your system has an App it may be possible to capture the screen with Automate.