OpenLuup unavailable

-

That's great, thanks. Just to let you know that I am taking this very seriously and I now have a fairly continuous stream of UDP updates going from my VeraLite to three different openLuup systems (Rpi, BeagleBone Black, Synology Docker) via the UDP -> MQTT bridge, with MQTT Explorer running on an iMac subscribed to one or other of those. From time to time the iMac sleeps, so the Explorer disconnects and then later reconnects. All openLuup instances are running the latest v21.3.19 looking for large gaps in the logs (or, indeed, a system freeze.)

So I am stress-testing this as far as I can without having your exact configuration.

-

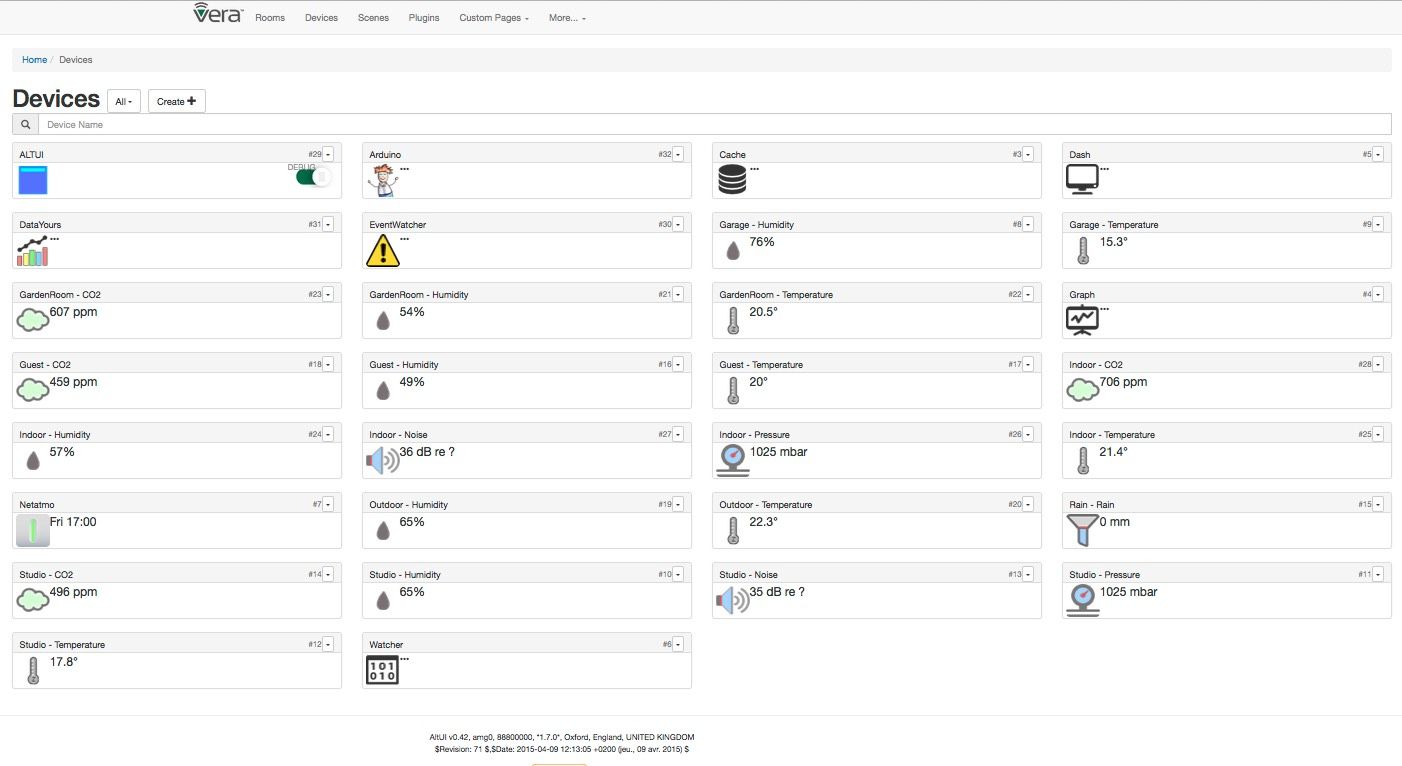

@akbooer I'm running on an an old 2,27x4 GHz Fujitsu i3 Esprimo SFF PC with Ubuntu 18.04.5 and 4GB RAM and a 120 GB SSD.

My Z-way server is on a separate Raspberry Pi 3B+ with a Razberry board. Then one old UI5 Vera Lite and a UI7 Vera Plus, both almost completely offloaded, just a few virtual devices left on them.

-

@akbooer I'm running on an an old 2,27x4 GHz Fujitsu i3 Esprimo SFF PC with Ubuntu 18.04.5 and 4GB RAM and a 120 GB SSD.

My Z-way server is on a separate Raspberry Pi 3B+ with a Razberry board. Then one old UI5 Vera Lite and a UI7 Vera Plus, both almost completely offloaded, just a few virtual devices left on them.

This morning no crash. Not sure which of the changes I made that made the difference. Still good progress and something to continue working from.

This is what I did yesterday:

-

Updated to OpenLuup 21.3.19 from 21.3.18

-

Removed the line

luup.attr_set ("openLuup.MQTT.PublishDeviceStatus", "0")from Lua Startup. I tested reloading without the line and it was not needed anymore as it seems -

Deactivated a few Sitesensors; the slow Shelly Uni, one fetching weather data and three more fetching some local data

-

Deleted two devices from Netmon that does not exist anymore, i.e. never respond to ping

-

Cut the power to my three test Mqtt devices, i.e. no active Mqtt devices reporting anything over Mqtt all night

-

-

Progress indeed. A slow build back should isolate the culprit.

For my part, I’ve spotted a difference in behaviour between my RPi and Docker instances when there’s a network outage, resulting in an error message "no route to host" on the RPi which blocks the system for longer than 2 minutes. Needs further investigation.

-

Progress indeed. A slow build back should isolate the culprit.

For my part, I’ve spotted a difference in behaviour between my RPi and Docker instances when there’s a network outage, resulting in an error message "no route to host" on the RPi which blocks the system for longer than 2 minutes. Needs further investigation.

-

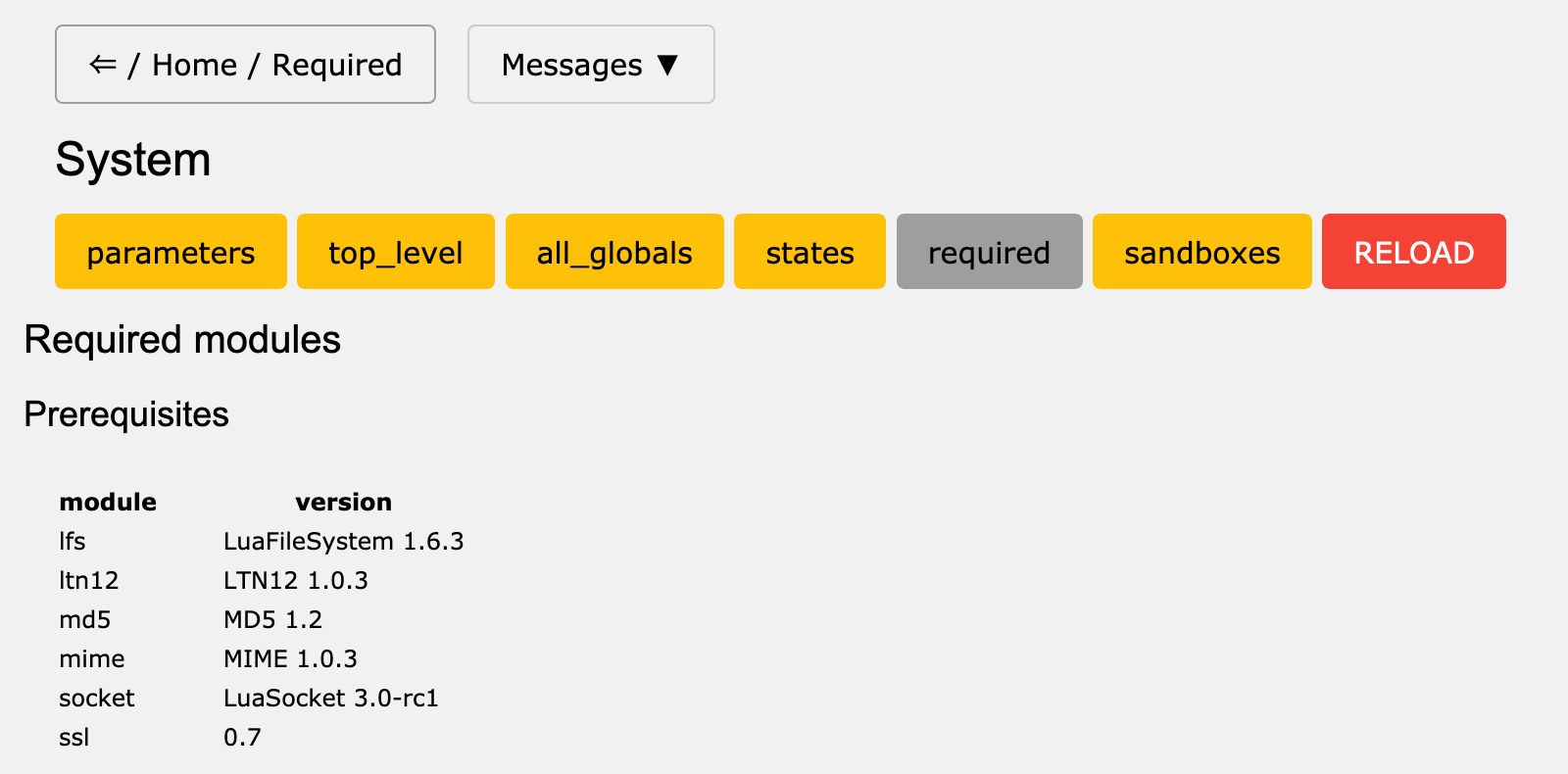

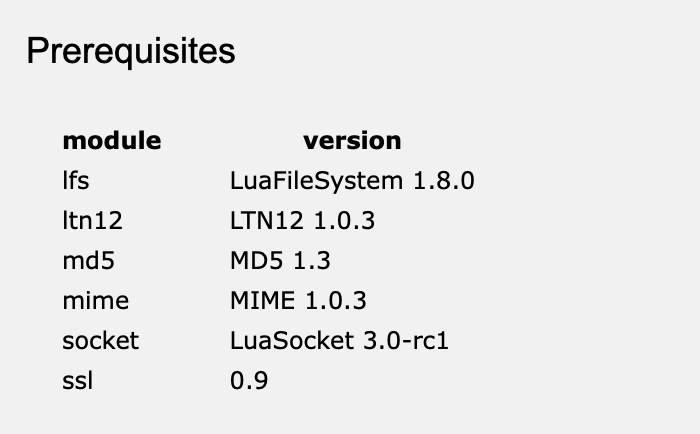

The latest development version v21.3.20 has a new console page openLuup > System > Required which shows key system modules, and also those required by each plugin.

I am seeing some small version differences between my systems, but I don't think this is the issue. I would, nevertheless, be interested in your version of this page:

Incidentally, this system (running in a Docker on my Synology NAS) ran overnight with over 100,000 MQTT messages sent to MQTT Explorer, totalling over 18 Mb of data without any issues at all.

-

The latest development version v21.3.20 has a new console page openLuup > System > Required which shows key system modules, and also those required by each plugin.

I am seeing some small version differences between my systems, but I don't think this is the issue. I would, nevertheless, be interested in your version of this page:

Incidentally, this system (running in a Docker on my Synology NAS) ran overnight with over 100,000 MQTT messages sent to MQTT Explorer, totalling over 18 Mb of data without any issues at all.

-

The latest development version v21.3.20 has a new console page openLuup > System > Required which shows key system modules, and also those required by each plugin.

I am seeing some small version differences between my systems, but I don't think this is the issue. I would, nevertheless, be interested in your version of this page:

Incidentally, this system (running in a Docker on my Synology NAS) ran overnight with over 100,000 MQTT messages sent to MQTT Explorer, totalling over 18 Mb of data without any issues at all.

-

This morning no crash either, progress again. This shows that the Mqtt functionality works overnight as such anyway.

What I changed yesterday:

-

updated OpenLuup from 2.3.19 to 2.3.20

-

powered up one of the test Mqtt devices

Next step it to start one of the other devices and see what happens.

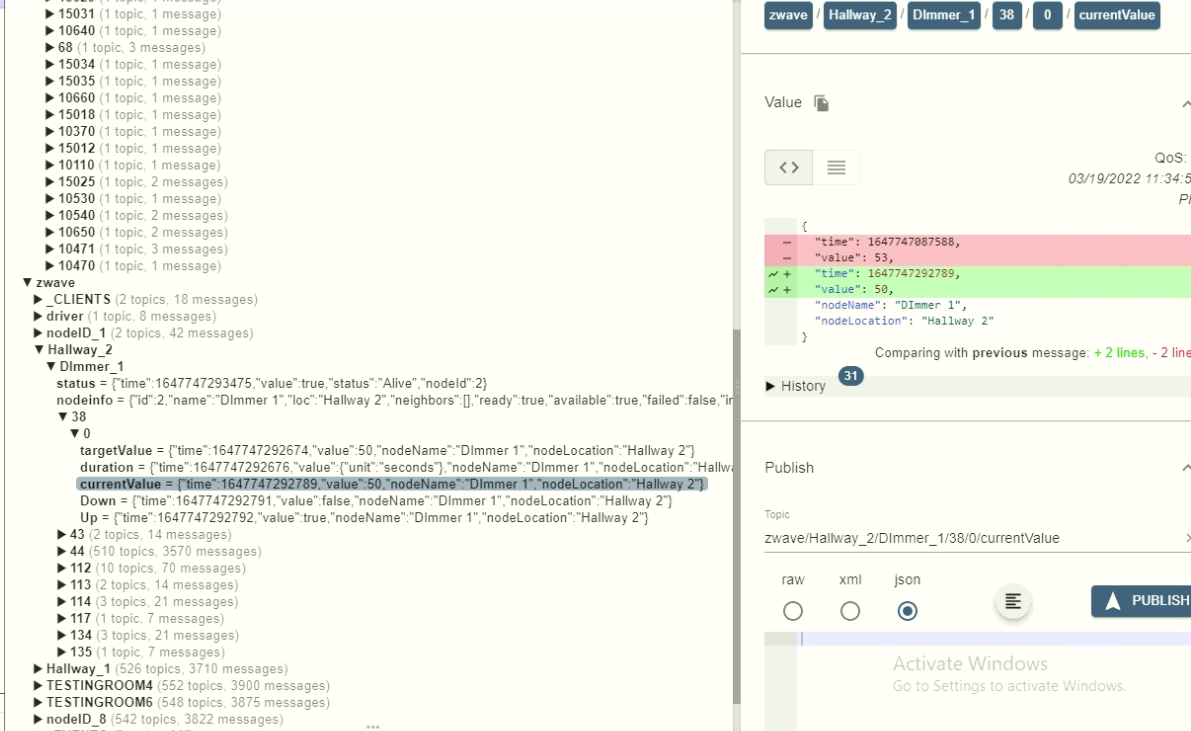

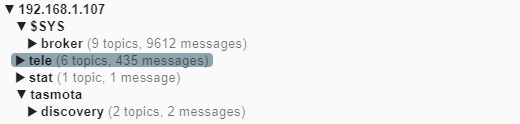

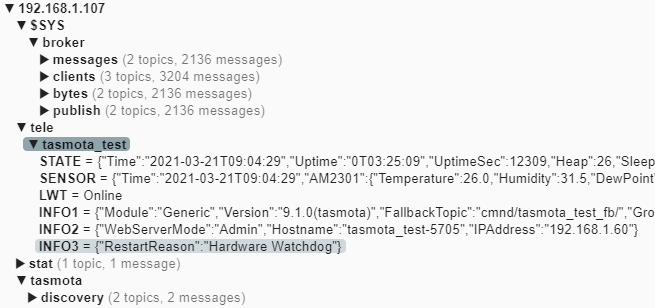

This is what MqttExplorer has logged since yesterday:

The two topics that the device sends out every 5 minutes:

05:44:29 MQT: tele/tasmota_test/STATE = {"Time":"2021-03-21T05:44:29","Uptime":"0T00:05:09","UptimeSec":309,"Heap":27,"SleepMode":"Dynamic","Sleep":50,"LoadAvg":19,"MqttCount":1,"Wifi":{"AP":1,"SSId":"MyNetwork","BSSId":"FC:EC:DA:D1:7A:64","Channel":11,"RSSI":100,"Signal":-45,"LinkCount":1,"Downtime":"0T00:00:03"}} 05:44:29 MQT: tele/tasmota_test/SENSOR = {"Time":"2021-03-21T05:44:29","AM2301":{"Temperature":26.0,"Humidity":30.9,"DewPoint":7.5},"TempUnit":"C"} -

-

This morning no crash either, progress again. This shows that the Mqtt functionality works overnight as such anyway.

What I changed yesterday:

-

updated OpenLuup from 2.3.19 to 2.3.20

-

powered up one of the test Mqtt devices

Next step it to start one of the other devices and see what happens.

This is what MqttExplorer has logged since yesterday:

The two topics that the device sends out every 5 minutes:

05:44:29 MQT: tele/tasmota_test/STATE = {"Time":"2021-03-21T05:44:29","Uptime":"0T00:05:09","UptimeSec":309,"Heap":27,"SleepMode":"Dynamic","Sleep":50,"LoadAvg":19,"MqttCount":1,"Wifi":{"AP":1,"SSId":"MyNetwork","BSSId":"FC:EC:DA:D1:7A:64","Channel":11,"RSSI":100,"Signal":-45,"LinkCount":1,"Downtime":"0T00:00:03"}} 05:44:29 MQT: tele/tasmota_test/SENSOR = {"Time":"2021-03-21T05:44:29","AM2301":{"Temperature":26.0,"Humidity":30.9,"DewPoint":7.5},"TempUnit":"C"}Yet another morning without a crash, once again some progress.

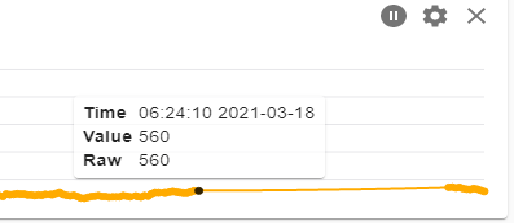

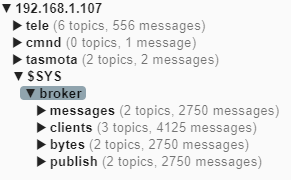

Not so much Mqtt traffic during the last 24 hours:

Just to test my other type of Mqtt test device I did the following yesterday:

-

powered down the Mqtt test device I had up and running

-

powered up the other type Mqtt test device

The one I have had up and running publishes the following every 5 minutes:

08:11:27 MQT: tele/TasmotaCO2An/STATE = {"Time":"2021-03-22T08:11:27","Uptime":"0T22:55:12","UptimeSec":82512,"Heap":22,"SleepMode":"Dynamic","Sleep":50,"LoadAvg":19,"MqttCount":1,"Wifi":{"AP":1,"SSId":"BeachAC","BSSId":"FC:EC:DA:D1:7A:64","Channel":11,"RSSI":74,"Signal":-63,"LinkCount":1,"Downtime":"0T00:00:03"}} 08:11:27 MQT: tele/TasmotaCO2An/SENSOR = {"Time":"2021-03-22T08:11:27","BME280":{"Temperature":20.1,"Humidity":40.4,"DewPoint":6.2,"Pressure":1007.3},"MHZ19B":{"Model":"B","CarbonDioxide":767,"Temperature":43.0},"PressureUnit":"hPa","TempUnit":"C"}Perhaps a bit overcautios but I wanted to see that the second device works overnight since this type was the one I added last time when the problems started.

Next step I think will be to run my three Mqtt sensors for some time.

-

-

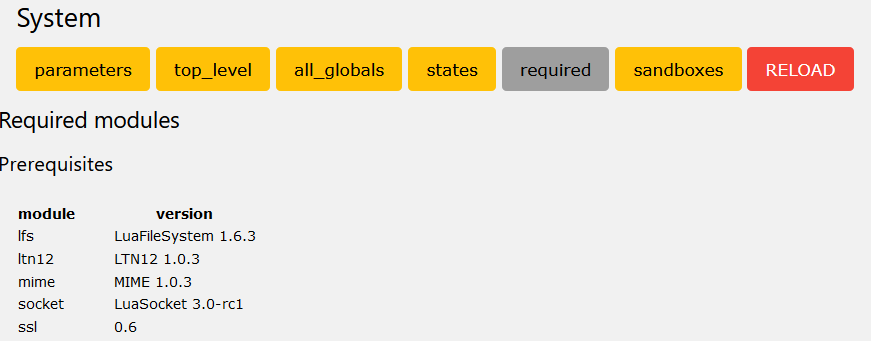

@akbooer I installed v2.3.20, this is what it shows:

Your data sounds promising.

I can add that despite the problems my system still feels really fast when it is up and running. -

-

That’s looking good.

Not to change too many things at one time, but the latest v21.3.21has even more safeguards against sockets being able to hang the system. As a bonus, the log pages show how many times the log contains lines with the word error.

-

Hi AK,

What is the errors count based on? I'm not seeing anything jump out in the log file.Cheers Rene

-

This error counting in the log is a great idea. It triggered my OCD tendencies and got me to track down a couple of typos along with loading the upnp proxy server... not as a plugin but as a systemd service to get rid of the sonos errors in the log.

Suggestion @akbooer: Don't isolate the lines showing errors. Instead it would be nice if they could be highlighted or colored on the log visualization page.

-

This error counting in the log is a great idea. It triggered my OCD tendencies and got me to track down a couple of typos along with loading the upnp proxy server... not as a plugin but as a systemd service to get rid of the sonos errors in the log.

Suggestion @akbooer: Don't isolate the lines showing errors. Instead it would be nice if they could be highlighted or colored on the log visualization page.