M

mblindsey

@mblindsey

Looking at using Home Assistant for the first time, either on a Home Assistant Green, their own hardware or buying a cheap second hand mini PC.

Sounds like Home Assistant OS is linux based using Docker for HA etc.

Would I also be able to install things like MSR as well on their OS ? On the same box?

Thanks.

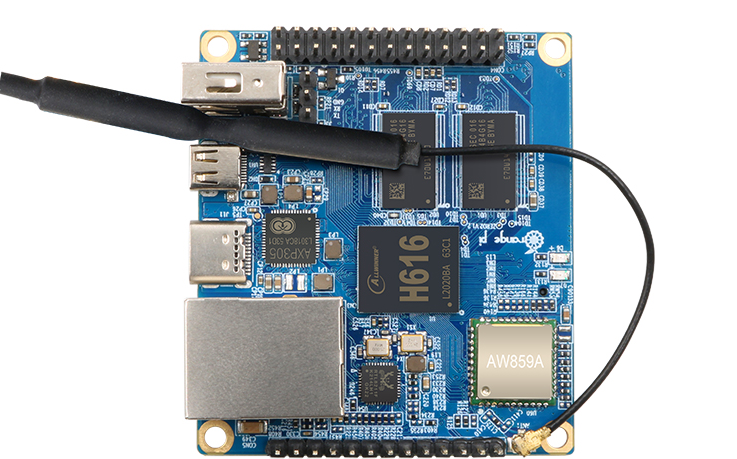

The last of four boards I'm trying in this batch is the Orange Pi 4 LTS. I purchased a 3GB RAM + 16GB eMMC model from Amazon for $83, making it the most costly of the four boards tried, but still well under my US$100 limit.

This board is powered by a Rockchip RK3399-T processor, ARM-compatible with dual Cortex-A72 cores and quad Cortex-A53 cores at 1.6Ghz (1.8Ghz for the 4GB model); compare this to the RPi 3B+ with four Cortex-A53 and the RPi 4B with four Cortex-A72, this board is a hybrid that I would expect to stand in the performance middle between the two RPi models. It's available in 3GB and 4GB DDR4 RAM configurations, with and without 16GB eMMC storage. It has a MicroSDHC slot, gigabit Ethernet, WiFi and BT, two USB 2.0 type A ports, one USB 3.0 type C port, a mini PCIe ribbon-cable connector (requires add-on board for standard connector), two each RPi-compatible camera and LCD ports, HDMI type A, and can be powered (5VDC/3A) via USB-C or DC type C (3.8mm OD/1.1mm ID) jack (center-positive), an odd and perhaps unwelcome departure from the more common type A (5.5mm/2.1mm). A serial port for console/debug can be connected by using a (not included) USB-TTL adapter (3.3V) via pin headers like the Orange Pi Zero 2. The included dual-band antenna connects via U.FL connector to the board, so it's easy substituting for another if you prefer. The manufacturer recommends use of a heat sink (which was included in the box). A metal cooling case is also offered by the manufacturer (a bundle with the metal case and a power supply is sold on Amazon for $90 as of this writing).

The Orange Pi 4 LTS is somewhat longer than the RPi 4B, and although the boards are the same width, the mounting hole placement is different both in length and (oddly) width. Between this and the differences in connector locations, neither board is a drop-in replacecment for the other and their respective cases are not interchangeable. The 26-pin header is a subset of the RPi 4B's 40-pin header, so some HATs for the RPi may work (although the mounting hole differences will make securing them "interesting"), and some HATs will surely not.

Models with eMMC storage have an OS installed and boot immediately with SSH daemon running and ready for login. Mine was running Debian Bullseye, which would probably be fine for most users. It had clearly been on there a while, because it needed a lot of updates, but it's a current distro, so you're running out of the box with something that will last.

A different OS can be installed by downloading an image (once again I chose Ubuntu Jammy) and writing it to a MicroSD card, then booting the system from the SD card. You can either leave the system in that state (running the OS from the SD card), or copy the OS from the SD card to the eMMC. The latter is done by a script; documentation for the process is best described in the downloadable PDF User Manual. This took about 10 minutes and went smoothly, and I was able to boot the system without the SD card after the process completed.

I have lingering questions around the value of the eMMC storage. It's definitely faster than using MicroSD or USB-based storage (I got 311MB/s average on a 4GB write, compared to MicroSD performance around 15MB/s), but it would take a long-term test of this product to determine if the on-board eMMC option has the stamina to take the write counts typical of Linux systems, and if its wear-leveling and error correction are sufficient to assure a long, error-free life. Given the high premium apparently being paid for including eMMC on the board, it should be fast and durable, but only time and experience (perhaps painful) would tell the latter. A careful configuration with other Flash-friendly filesystems could be used to reduce wear, but this is an advanced configuration/cookbook topic and beyond the scope of this writing. This question is also not unique to eMMC — MicroSD cards are also known to fail with high write cycles, so the use of a "high endurance" product is recommended for any and all systems using MicroSD as primary storage. The board has Mini PCIe capability, and that may be a storage alternative, but read on...

Also bear in mind that the eMMC storage is fixed-size forever; it cannot be expanded, and 16GB can run out pretty quickly these days. Users of MicroSD cards for primary storage can upgrade to bigger cards, but when users of eMMC primary storage outgrow it, the only choice is to add a MicroSD card or other "external" storage to the system, move part of the filesystem to it, and then manage both storage devices and deal with the limitations and risks of both.

As I mentioned with the Orange Pi Zero 2, if you are going to use this board as a home automation controller/gateway or similar role, it should (IMO) have a battery-backed real time clock (RTC), and Orange Pi offers an add-on module that connects directly to the 26-pin header on the board. An available expansion board provides a standard Mini PCIe interface and SIM card slot (hmm...), but it connects to the main board via a short ribbon cable, and its mounting holes have no complement on the main board, so it seems like it would be a fragile dangly thing that's a nuisance to deal with.

I want to like this board more, and it's very capable, but I'm concerned about value. The limited options for eMMC (16GB or none), the question mark of the eMMC's longevity vs cost, the strange DC power connector choice, the lack of 40-pin GPIO on a full-size (plus) board, the inconsistent hole placement, and the fragile Mini PCIe arrangement, are all "cons" that devalue this board in my view. The price point is clearly driven by the additional capabilities of the board (camera support, ports, six core CPU, extra RAM, on-board eMMC storage), but unfortunately, a great many of these features may not be useful for home automation, and therefore potentially a waste of money.

In terms of overall value, I still believe the Libre "Le Potato" seems a better choice to me, and the Orange Pi Zero 2 (very) a close second, but I'll admit I'm focused on a particular application and your needs may be better suited to what this board offers than mine.

Passmark Results:

OrangePi 4 LTS

Cortex-A72 (aarch64)

6 cores @ 1200 MHz | 2.9 GiB RAM

Number of Processes: 6 | Test Iterations: 1 | Test Duration: Medium

--------------------------------------------------------------------------

CPU Mark: 583

Integer Math 12037 Million Operations/s

Floating Point Math 2542 Million Operations/s

Prime Numbers 4.5 Million Primes/s

Sorting 3141 Thousand Strings/s

Encryption 153 MB/s

Compression 4049 KB/s

CPU Single Threaded 154 Million Operations/s

Physics 80.5 Frames/s

Extended Instructions (NEON) 244 Million Matrices/s

Memory Mark: 498

Database Operations 551 Thousand Operations/s

Memory Read Cached 2524 MB/s

Memory Read Uncached 2602 MB/s

Memory Write 3182 MB/s

Available RAM 1947 Megabytes

Memory Latency 119 Nanoseconds

Memory Threaded 6243 MB/s

---------------

eMMC storage write 311MB/s average for 4GB; MicroSD (Samsung 32GB class 10) storage write 15MB/s.

With Raspberry Pi boards continuing to be relatively scarce, I've been trying a few alternatives to see what may be usable and good. I had previously written about the Jetson Nano 2GB, which is great, but a little pricey, so I'm trying to find sub-US$100 boards that will run Reactor.

I've got four that I'm trying now, but one in particular goes right to work in the most predictable way and seems worth a mention immediately: the Libre Computer Board AML-S905X-CC 2GB (known as "Le Potato").

The form factor is very similar to that of the Raspberry Pi 3 B+, and has comparable CPU (ARM Cortex-A53, quad 64-bit cores at 1.5+GHz -- slightly higher clock speed). It's US$35 on Amazon and LoverPi in the (recommended) 2GB configuration, and easy to get.

Startup is like RPi: download one of the available OS images (Ubuntu, Raspbian, Debian, ARMbian, etc.) from their site and write the image to a MicroSD card, insert into slot, power up, and off you go. I tried the Ubuntu 22.04 image first and it comes right up. No problem getting nodejs 18.12.1 installed and running (with Reactor).

No WiFi on board, but I don't see that as a minus for use as a controller/hub (which should be hard-wired, IMO). The 40-pin GPIO connector is compatible with typical RPi HATs (PoE, breakouts, etc.).

There is an available eMMC (solid state storage) module to use instead of MicroSD, which I would recommend for long-term use. It runs US$25 for 32GB (64GB and 128GB available). The module is scarcely larger than the chip it carries, and has the smallest board-to-board connector I've ever seen.

Next up: ESPRESSObin 2GB (spoiler: it's... technical...)

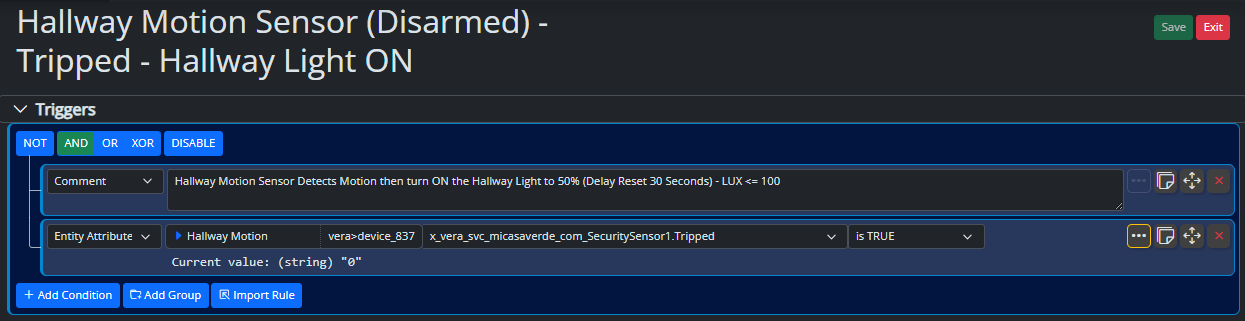

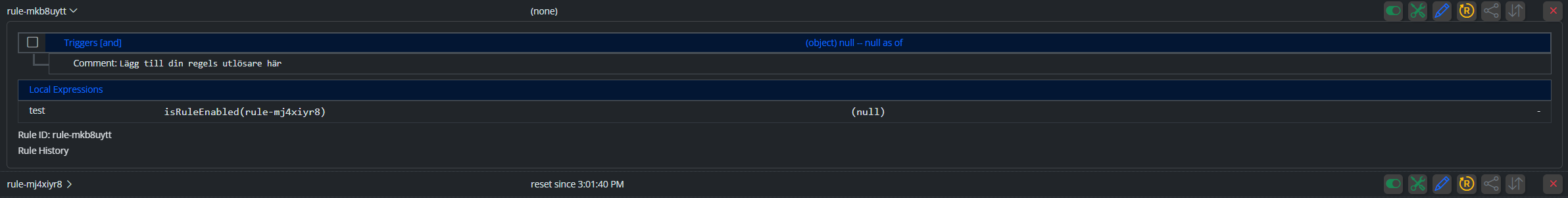

Having been messing around with some stuff I worked a way to self trigger some tests that I wanted to do on the HA <> MSR integration

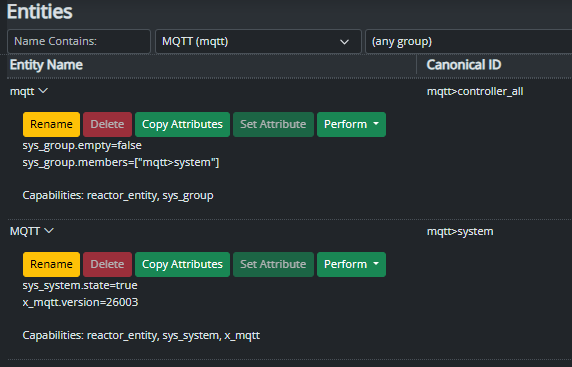

This got me wondering if there's an entity that changes state / is exposed when a configured controller goes off line? I can't see one but thought it might be hidden or something?

Cheers

C

Using build 25328 and having the following users.yaml configuration:

users:

# This section defines your valid users.

admin: *******

groups:

# This section defines your user groups. Optionally, it defines application

# and API access restrictions (ACLs) for the group. Users may belong to

# more than one group. Again, no required or special groups here.

admin_group:

users:

- admin

applications: true # special form allows access to ALL applications

guests:

users: "*"

applications:

- dashboard

api_acls:

# This ACL allows users in the "admin" group to access the API

- url: "/api"

group: admin_group

allow: true

log: true

# This ACL allows anyone/thing to access the /api/v1/alive API endpoint

- url: "/api/v1/alive"

allow: true

session:

timeout: 7200 # (seconds)

rolling: true # activity extends timeout when true

# If log_acls is true, the selected ACL for every API access is logged.

log_acls: true

# If debug_acls is true, even more information about ACL selection is logged.

debug_acls: true

My goal is to allow anonymous user to dashboard, but MSR is still asking for a password when trying to access that. Nothing in the logs related to dashboard access. Probably an error in the configuration, but help needed to find that. Tried to put url: "/dashboard" under api_acls, but that was a long shot and didn't work.

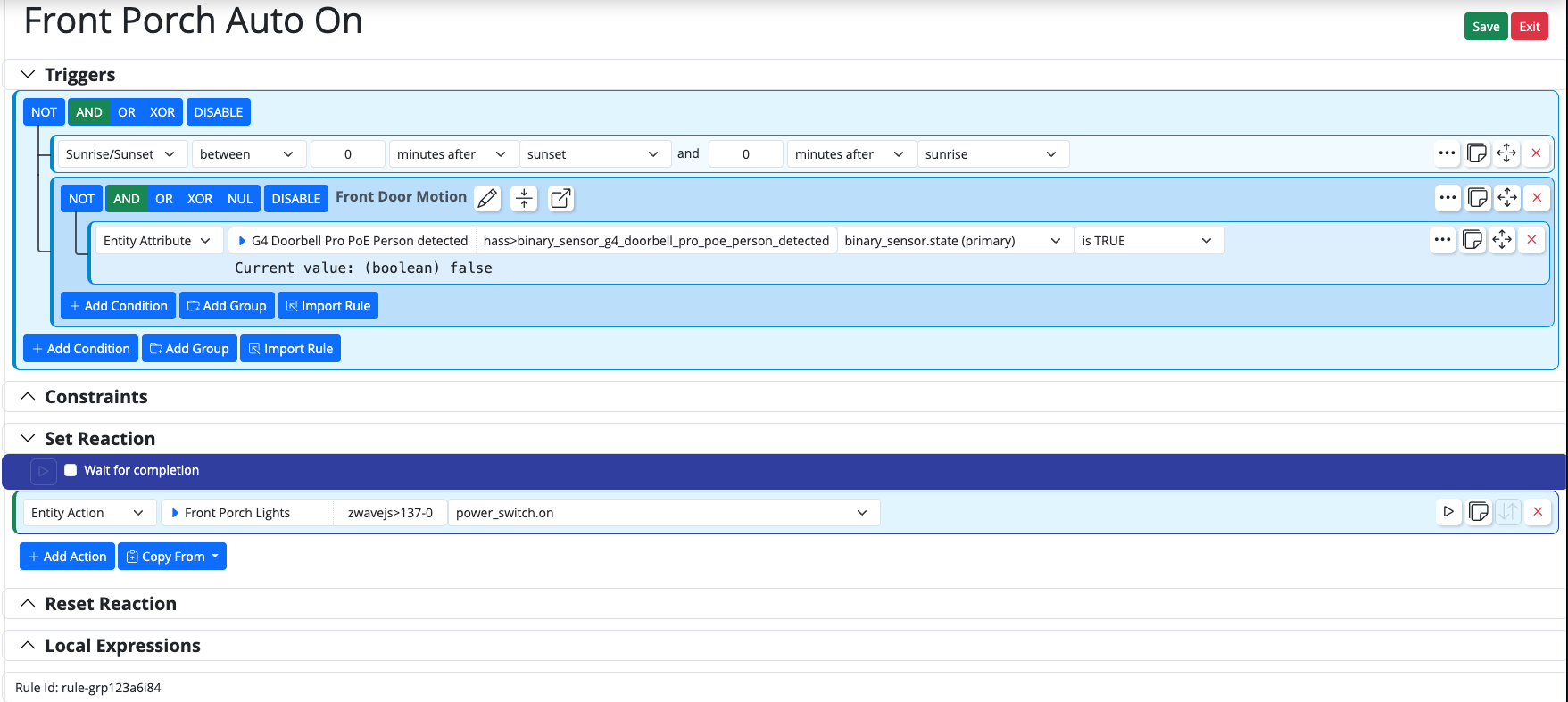

I use Virtual Entity Controller virtual switches which I turn on via webhooks from other applications. Once a switch triggers and turns on, I can then activate associated rules.

I would like each virtual switch to automatically turn off after a configurable time (e.g., 5 seconds, 10 seconds).

Is there a better way to achieve this auto-off behavior instead of creating a separate rule for each switch that uses the 'Condition must be sustained for' option to turn it off?

With a large number of these switches (and the associated turn-off rules), I'm checking to see if there is a simpler approach.If not, could this be a feature request to add an auto-off timer directly to the virtual switches.

Thanks

Reactor (Multi-hub) latest-26011-c621bbc7

VirtualEntityController v25356

Synology Docker

TL;DR: Format of data in storage directory will soon change. Make sure you are backing up the contents of that directory in its entirety, and you preserve your backups for an extended period, particularly the backup you take right before upgrading to the build containing this change (date of that is still to be determined, but soon). The old data format will remain readable (so you'll be able to read your pre-change backups) for the foreseeable future.

In support of a number of other changes in the works, I have found it necessary to change the storage format for Reactor objects in storage at the physical level.

Until now, plain, standard JSON has been used to store the data (everything under the storage directory). This has served well, but has a few limitations, including no real support for native JavaScript objects like Date, Map, Set, and others. It also is unable to store data that contains "loops" — objects that reference themselves in some way.

I'm not sure exactly when, but in the not-too-distant future I will publish a build using the new data format. It will automatically convert existing JSON data to the new format. For the moment, it will save data in both the new format and the old JSON format, preferring the former when loading data from storage. I have been running my own home with this new format for several months, and have no issues with data loss or corruption.

A few other things to know:

If you are not already backing up your storage directory, you should be. At a minimum, back this directory up every time you make big changes to your Rules, Reactions, etc.

Your existing JSON-format backups will continue to be readable for the long-term (years). The code that loads data from these files looks for the new file format first (which will have a .dval suffix), and if not found, will happily read (and convert) a same-basenamed .json file (i.e. it looks for ruleid.dval first, and if it doesn't find it, it tries to load ruleid.json). I'll publish detailed instructions for restoring from old backups when the build is posted (it's easy).

The new .dval files are not directly human-readable or editable as easily as the old .json files. A new utility will be provided in the tools directory to convert .dval data to .json format, which you can then read or edit if you find that necessary. However, that may not work for all future data, as my intent is to make more native JavaScript objects directly storable, and many of those objects cannot be stored in JSON.

You may need to modify your backup tools/scripts to pick up the new files: if you explicitly name .json files (rather than just specifying the entire storage directory) in your backup configuration, you will need to add .dval files to get a complete, accurate backup. I don't think this will be an issue for any of you; I imagine that you're all just backing up the entire contents of storage regardless of format/name, that is the safest (and IMO most correct) way to go (if that's not what you're doing, consider changing your approach).

The current code stores the data in both the .dval form and the .json form to hedge against any real-world problems I don't encounter in my own use. Some future build will drop this redundancy (i.e. save only to .dval form). However, the read code for the .json form will remain in any case.

This applies only to persistent storage that Reactor creates and controls under the storage tree. All other JSON data files (e.g. device data for Controllers) are unaffected by this change and will remain in that form. YAML files are also unaffected by this change.

This thread is open for any questions or concerns.

mblindsey's Groups

There are no groups to see