InfluxFeed plugin & throttling export

-

(Using userauth-24120-7745fb8d build in Docker)

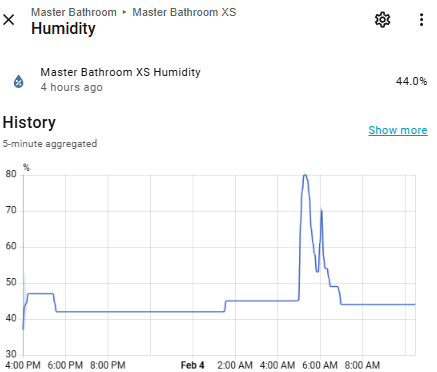

There's a filtering capability for entities in reactor.yaml, but I have a case where I don't want to filter an entity altogether, but would like to "throttle" it, as this sensor updates every 1-2 seconds (and therefore unnecessarily takes database space).

Sensor data comes through home assistant, and seems that there's no way to control update interval at that end.

So I'm asking if plugin configuration could support limiting/throttling updates for certain entities?

-

T tunnus referenced this topic on

T tunnus referenced this topic on

-

This is challenging, because generally in the connector to InfluxDB, we don't want to lose data. If, for example, we just drop any update that's within 60 seconds of a previous update, ignoring the complexity it adds to make that possible, I'm pretty sure the next request from someone (perhaps not you, but someone) will be "well hey, can't it just take the mean of the updates for that period" or "can't it just suppress writing changes that are less than a certain tolerance" or something like that, and now complexity is going non-linear. And on top of that, a lot of that work and computation is exactly what InfluxDB itself is built to do.

With regard to the first-level complexity of just quieting within an interval, there's currently no filtering of the data — what it gets is what goes out — so anything I add to computation there is a 100% increase in work that the plugin has to do.

I'd like to know a bit more about the device in question. You said the data is coming from Home Assistant, so the device has to be putting a big impact on it as well, because HA also maintains a database. What is the device, and why are the updates so frequent?

-

Data comes from a modbus device connected to home assistant, and on a modbus device you can prioritize certain sensors to be near real-time as opposed to non-prioritized sensors where update interval can be minutes. So there isn't too much granularity. Real-time is good, but there are these side effects for disk i/o and/or database burden.

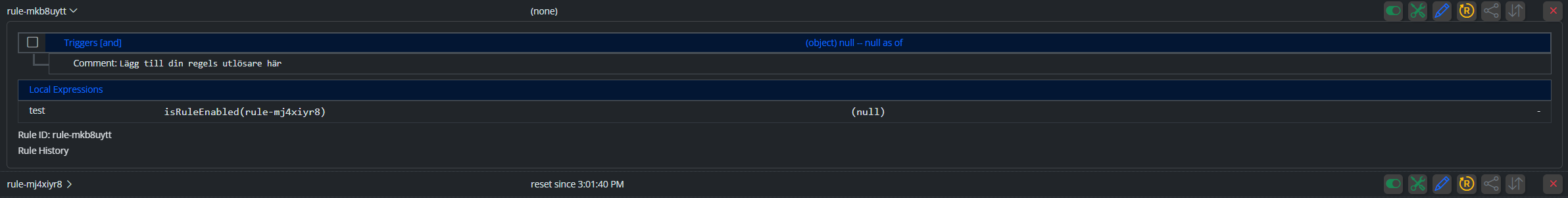

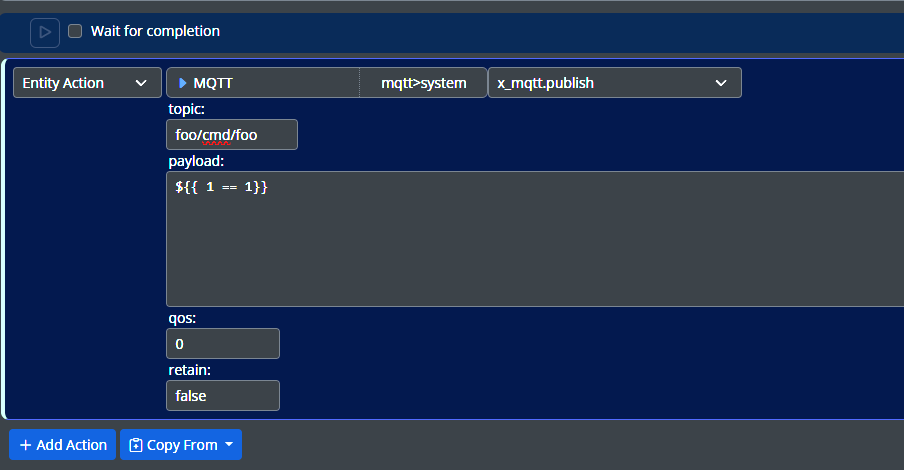

I partly solved this by creating global variables and then rounding them (previously values could fluctuate very rapidly, being something like 140.1, 140.2, 140.1 ...) to integers, then exporting those and filtering original values coming from hass.

-

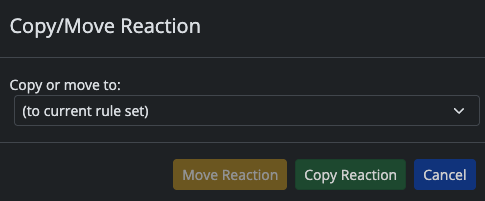

I'm prototyping something that may make a good general solution for this.

-

T toggledbits locked this topic on

T toggledbits locked this topic on