-

A number of folk are running openLuup on Docker...

...and I'm about to try the same.

There are a couple of epic threads in the old place:

https://community.getvera.com/t/openluup-on-synology-via-docker/190108

https://community.getvera.com/t/openluup-on-docker-hub/199649

Much of the heavy lifting appears to have been done by @vwout there (and I've asked if they'll join us here!) There's a great GitHub repository:

Hoping to get a conversation going here (and a new special openLuup/Docker section) not least because I know I'm going to need some help!

-

This might be a good place to get an explanation on what exactly a "docker" is? Pro's and con's?

From what i've understood, it running an OS in a box inside another OS?One can probably google it, haven't bothered as i'm not planning to use one.

I am by no stretch of the imagination an expert in this area but have had some experience with them.

Here is my assessment on (Docker) containers.

It is an alternative to using virtual machines (VM).

Why would one need a virtual machine?

Isolate an additional operating system within the main “bare metal” operating system. For linux applications this is particularly important because of dependencies: libraries get developed and updated very regularly and sometimes cause conflicts. One app may require an older version of a library than another and if you run a lot of apps in the same OS, you can run into a conundrum. They also offer a lot of benefit (applicable for both VM and containers) with backup and snapshots as well as portability as opposed to running them on the host OS.The main benefit of the containers Vs. VMs is that you do not need to allocate resources to them (except for storage). When creating a VM, one needs to allocate CPU cores and DRAM, isolating them away from the main OS. With a container, one does not need to do this and can therefore run a lot of them on a limited machine as ressources are distributed on an as needed basis sharing them with the host OS. It does so very efficiently.

The downsides of containers are:

- They are not nearly as flexible and complete as a VM. For example, you can’t run fundamentally different OS from the host. It is really a linux thing.

- What bothers me the most is the complexity of managing updates and upgrades. It is the dark side pendant of its benefit. It is a mini OS in isolation and is extremely limited. Unlike a VM which is a full fledged OS, modifying files within the container and managing the growing number of them is a headache.

My personal opinion is that it does nothing but move the dependency management problem up one level: From dependencies to containers. I have opted instead to run a couple of VMs where I run all my apps dividing them per the ressources and dependencies rather than managing a dozen of containers. When there is a library update, I go into my 2 VMs and run 2 updates without rebooting them most of the time. With docker... I would have to kill each docker. Get into the container file system, which is not trivial, run the update, rebuild the container and test them one at a time. It is 6-10X more work. Benefit: lower risk, low resource requirements. Inconvenience: A lot of time and complexity to manage, muliplication of container files and storage space since the environments are 99% redundant. In most cases much more complex than managing the dependencies themselves. Containers basically create a mini VM for each program you run in order to control its OS environment.

I use docker containers only when I have to but in general avoid them like the pest. It is notable that Virtual machine managers have also improved over time and are now allowing dynamic CPU and RAM allocations. It is not as good as the “non-allocation” of containers but it is progress. -

I'm sure VMs are more flexible, but I'm not interested in updating the system files, unless absolutely required. The only thing I need to be able to do is to update openLuup's configuration with devices / plugins / and openLuup updates. The

vwout/docker-openluupcontainer should make that easier since all the openLuup file system is mounted on an external volume (IIRC, this is what allows the context to be maintained across restarts.)I'm a Lua kind of a guy, not a Linux one

-

Not saying containers don’t have a use case. They do:

If for example, all you want to do is run openluup in its own isolated environment on your NAS, then it makes total sense to favor a container over a VM.

If however you have a lot more applications you want to run this way... it gradually becomes more and more absurd.

For example I run z-way-server, habridge, mosquito, HomeKit bridge and a slew of other applications. Do I want a container for each? (Most exist by the way). I am sure it would free up a some CPU time and RAM if I did but it would be complete nightmare to maintain. This is the example of what Home-Assistant add-ons have become. It’s now completely absurd. The convenience and efficiency benefit goes away and it’s become actually quite the opposite.A couple of example of differences:

A lot of people are struggling to install z-way-server as we have seen on this forum. They are always due to dependencies. The frequently observed one is libcurl. z-way requires libcurl3 which no longer comes with newer linux distro. The temptation is to build an environment and setup a container for it. Great! It is convenient, people just need to install the container and they are good to go. What I found out is that libcurl4 is actually fully backward compatible. Creating a symbolic link in the /usr/bin folder fixes the problem... In appearance the container was a great solution but over time, if you need to update the z-way version or run some security updates to the libraries in it... not so easy.Another example is say, you have openLuup and another program requiring lua5.3. You can set them up in separate containers and updating one (program and environment) without affecting the other. Great benefit. You could also run both within the same VM each calling its own interpreter. If you only have 2, 3, container is the way to go. If you have 5,6,10? It’s much easier to manage in a single VM and learn to manage the dependencies.

-

I agree with @rafale77 here, and in that, I'm going to play "Devil's Advocate" a bit here and ask of you, @akbooer, if the openLuup filesystem is all on a separate volume, is the benefit to what remains worth building and maintaining a container distribution? Seems like the container wouldn't really contain much. Since I'm considering docker myself for Multi-system Reactor, reading all of this made me ask why. My answer (for MSR) is that it would ease installation for users of NAS systems and small micros that support docker containers, and this is probably a high-frequency user model. Reducing the administration aspect of getting the product installed and rolling is sufficient reason, and I would even say, the better reason. Configuration control isn't, in my view, much of an issue for openLuup, as its dependencies are relatively few. But as more people try to use the product coming from Vera, few are Linux administration experts, so getting the product up and running with as few clicks (or keystrokes) as possible is definitely a benefit. All I would ask is that it not be constrained in that way (if you want to install direct from Github to your VM, still able to do so).

-

I agree with @rafale77 here, and in that, I'm going to play "Devil's Advocate" a bit here and ask of you, @akbooer, if the openLuup filesystem is all on a separate volume, is the benefit to what remains worth building and maintaining a container distribution? Seems like the container wouldn't really contain much. Since I'm considering docker myself for Multi-system Reactor, reading all of this made me ask why. My answer (for MSR) is that it would ease installation for users of NAS systems and small micros that support docker containers, and this is probably a high-frequency user model. Reducing the administration aspect of getting the product installed and rolling is sufficient reason, and I would even say, the better reason. Configuration control isn't, in my view, much of an issue for openLuup, as its dependencies are relatively few. But as more people try to use the product coming from Vera, few are Linux administration experts, so getting the product up and running with as few clicks (or keystrokes) as possible is definitely a benefit. All I would ask is that it not be constrained in that way (if you want to install direct from Github to your VM, still able to do so).

@toggledbits said in Moving to Docker:

if the openLuup filesystem is all on a separate volume, is the benefit to what remains worth building and maintaining a container distribution? Seems like the container wouldn't really contain much.

Well, that's exactly right. Just the Lua and necessary libraries, if my (limited) understanding is correct.

@rafale77 said in Moving to Docker:

if you need to update the z-way version or run some security updates to the libraries in it... not so easy.

I wouldn't dream of trying to do that. Whilst my openLuup system would have the ZWay plugin, it wouldn't contain Zwave.me software and it wouldn't be directly connected to the hardware, either.

@toggledbits said in Moving to Docker:

as more people try to use the product coming from Vera, few are Linux administration experts, so getting the product up and running with as few clicks (or keystrokes) as possible is definitely a benefit.

Exactly the point (and indeed I'm no Linux expert.) The container which @vwout has made appears to satisfy this condition.

@toggledbits said in Moving to Docker:

All I would ask is that it not be constrained in that way

To be clear, I'm not proposing to change anything in terms of openLuup. I simply want to decommission some old hardware and consolidate my IT architecture.

-

Hi all, @akbooer asked me to join this community - so far I was only active on MCV. I am the author of the Docker image for openluup that is referred to in this thread.

I quickly read the complete thread. Most of the comments contain valid statements.

Personally I would not avoid containers as the pest - in fact, you can't ;). Containers exist for a long long time (at least in the Linux kernel) and play an important role for isolation of processes/networks/filesystems. Docker has made this system significantly easier to use and understand. By now also Windows supports containers and (in the latest builds even without HyperV virtualization) can even run Linux containers. This begins to fade the most significant difference between VMs and containers away, this being virtualization (for VMs) versus isolation (containers). Opposed to VMs, containers are very lightweight (try creating a VM that runs openLuup in under 7MB :D). A container does not contain a kernel and does not have an operating system. It also cannot run on itself.Maybe some of you experienced the 'dll hell' - the issue where the installation of an application breaks another application, because one overwrites a shared library of the other. This is what you can prevent by packaging applications and one of the reasons for things like snap, flatpack and docker to emerge.

So as @toggledbits mentioned, a (well written) container indeed does not contain much. A docker container basically provides the 'batteries included' concept. This makes it very easy to get started with a new application like openLuup. In order to get the same experience with a VM, you'd need a pre-build image, or an automation system like Ansible, Puppet or Chef - tools that not every newbee is likely to master.

There is no general 'better' when it comes to VMs verses contains and in many cases both are used, each for their own specific benefits, one of which is personal preference.

When you, like @akbooer, want to consolidate your setup on a not highly powerfull NAS, a container is the way to go. -

Very pleased that you could join us...

...I hope you find this place more than just another sink of your valuable time! (Let's face it, there are probably enough forums around.)

A number here found their interests diverging from the discussions in the other place, as it abandons Vera and struggles, in much the same old way, with new hardware and firmware. We're not just about openLuup as a replacement for Vera – so much more, in fact, and there are advocates of a number of alternatives, and hybrid systems.

I, for one, will value any contribution you can make here.. not least because I really want to get openLuup on Docker up and running

I've loaded the Docker app, and your (alpine) image, for a start. Somewhat at a loss of what to do next, but I need to read the documentation thoroughly.

Anyway, welcome again.

-

Just excited to have a new valuable member and a new option to install openLuup so that more can use and contribute.

As long as it doesn't become the only way. I was just sharing my experience on containers. Indeed it has its use case, a great way to start. It's not for everyone and like anything, should not be abused... -

I'm using the docker of vwout for a while now. I've asked for several additions to the docker and vwout has been very helpful. I think a docker container is ideal for me. Problem solving is easier, because the different applications are containerized. It's easy to start an additional dev/test environment and needs fewer resources than a complete VM. Updating is also easier, when the docker is maintained actively of course.

But of course, everyone has to decide what works best for him/her. Dockers are here to stay, that's for sure. I think you're going to like it, @akbooer .

Thanks for the docker again, @vwout! Great support. I have had no problems with it.

-

I'm using the docker of vwout for a while now. I've asked for several additions to the docker and vwout has been very helpful. I think a docker container is ideal for me. Problem solving is easier, because the different applications are containerized. It's easy to start an additional dev/test environment and needs fewer resources than a complete VM. Updating is also easier, when the docker is maintained actively of course.

But of course, everyone has to decide what works best for him/her. Dockers are here to stay, that's for sure. I think you're going to like it, @akbooer .

Thanks for the docker again, @vwout! Great support. I have had no problems with it.

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

-

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

@akbooer

I'm far from an expert, but have some experience with it yes. I haven't composed a container through yaml yet.You first need to define/create the volumes, that's when you can state where the volumes should be "placed" and where the data resides. This can be a local directory or a nfs mount for example. Default, it creates a dir in the docker dir.

If you are not a Linux expert, like me (although I can manage), I would advise installing Portainer first. It gives you a nice GUI for managing your dockers. Far easier than doing everything through command line.

-

Ooh, super – another Docker expert.

I'm really stuck. I think that there's a vital piece of documentation that I haven't found/read. Whether this is Synology, or Docker, or GitHub/vwout, I'm not sure...

- I have Docker installed in Synology

- I have the (alpine) version of openLuup installed and running (apparently)

- but I can't access it at all or work out where the openLuup files go.

I think this is something to do with the method of persisting data:

docker-compose.ymland whilst I understand that there are (probably) some commands to be run:

docker run -d \ -v openluup-env:/etc/cmh-ludl/ \ -v openluup-logs:/etc/cmh-ludl/logs/ \ -v openluup-backups:/etc/cmh-ludl/backup/ \ -p 3480:3480 vwout/openluup:alpineI don't know where that goes either.

So I feel stupid, and stuck. (Nice, in fact, to understand how others feel when approaching something new.)

Any pointers, anyone?

@akbooer said in Moving to Docker:

- but I can't access it at all or work out where the openLuup files go.

You are using the synology docker package to create and deploy your container, correct? At minimum, to get the container accessible, change the port settings to 3480(local):3480(container). Then you should be able access it at http://YourSynologyIP:3480/

-

@akbooer said in Moving to Docker:

- but I can't access it at all or work out where the openLuup files go.

You are using the synology docker package to create and deploy your container, correct? At minimum, to get the container accessible, change the port settings to 3480(local):3480(container). Then you should be able access it at http://YourSynologyIP:3480/

@kfxo said in Moving to Docker:

You are using the synology docker package to create and deploy your container, correct?

Yes, that's correct for now. I need also to investigate Portainer, apparently.

@kfxo said in Moving to Docker:

change the port settings to 3480(local):3480(container).

OK, I was looking for where to do that, but I guess, I'll look harder. Thanks.

-

It's MAGIC !

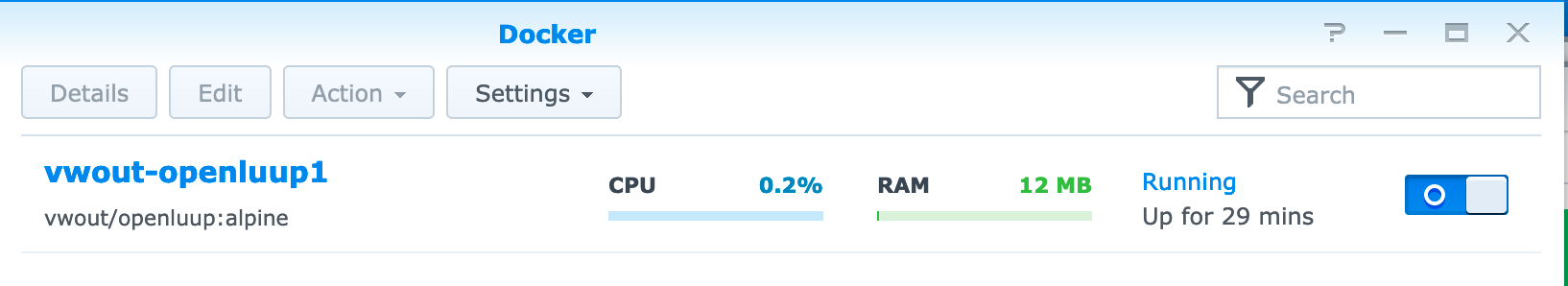

I particularly like the look of this...

This instills a sense of wonder in me... I mean, I know (almost) exactly how openLuup works, but to see it wrapped in a container running on a NAS, seems rather special. "Hats off" ("chapeau?") to those who have engineered this, not least @vwout.

One is never quite finished, however.

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

-

It's MAGIC !

I particularly like the look of this...

This instills a sense of wonder in me... I mean, I know (almost) exactly how openLuup works, but to see it wrapped in a container running on a NAS, seems rather special. "Hats off" ("chapeau?") to those who have engineered this, not least @vwout.

One is never quite finished, however.

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

@akbooer said in Moving to Docker:

I understand that to make any changes last across container/NAS restarts, I need to make the volumes external to the container, as per the above yaml. So this is my next step.

Any further insights welcomed. Perhaps Portainer is that next step?

You cannot create named volumes through the synology GUI. You will need to use portainer or you can ssh into your synology and run the manual commands as outlined by vwout/openluup

You can also do this manually. Start by creating docker volumes:

docker volume create openluup-env

docker volume create openluup-logs

docker volume create openluup-backupsCreate an openLuup container, e.g. based on Alpine linux and mount the created (still empty) volumes:

docker run -d

-v openluup-env:/etc/cmh-ludl/

-v openluup-logs:/etc/cmh-ludl/logs/

-v openluup-backups:/etc/cmh-ludl/backup/

-p 3480:3480

vwout/openluup:alpine

A number of folk are running openLuup on Docker...

...and I'm about to try the same.

There are a couple of epic threads in the old place:

https://community.getvera.com/t/openluup-on-synology-via-docker/190108

https://community.getvera.com/t/openluup-on-docker-hub/199649

Much of the heavy lifting appears to have been done by @vwout there (and I've asked if they'll join us here!) There's a great GitHub repository:

https://github.com/vwout/docker-openluup

Hoping to get a conversation going here (and a new special openLuup/Docker section) not least because I know I'm going to need some help!

I've been doing other projects the past weeks, and now started seeing sluggish behaviour in the system.. SSH'ed in, and noticed that the harddrive was completely full!

I had some other issues as well that caused it to fill up, but i noticed that when I removed the openluup container, that freed up 1.5GB!

This is obviously not the persistent storage folder (cmh-ludl), what else can do this?

On the base system, it showed as a massive sized /overlay2 folder in the /var/lib/docker/..

Mabye @vwout knows some docker-hints on this?

I've now gotten most of my applications/components into the docker system, only one left is influxdb.

I allready have the database files on an external SSD, and the plan was to have a volume for the influxDB (which holds the .conf file), and a bind mount to the database folder.

This is however not the only files of influxdb that needs to be persistent, when i load up the docker, influx has no information about the databases, even when i know the database folder is available.

Anyone here know where influxDB stores database setup info? influxdb.conf just enables stuff and sets folders, the info on existing databases and settings is stored elsewhere.

(should the docker forum be under software, not openluup?)

As part of my hardware infrastructure revamp (a move away from 'hobby' platforms to something a bit more solid) I've just switched from running Grafana (a very old version) on a BeagleBone Black (similar to RPi) to my Synology NAS under docker.

Despite the old system being on the same platform as my main openLuup instance (or, perhaps, because of this) and now with the new system the data has to be shipped across my LAN, this all seems to work much faster.

I had been running Grafana v3.1.1, because I couldn't upgrade on the old system, but now at 7.3.7, which seems to be the latest Docker version. It's a bit different UI from the old one, and needed next to no configuration, apart from importing the old dashboard settings.

One thing I'm missing is that the old system had a pull-down menu to switch between dashboards, but the new one doesn't seem to have that, since it switches to a whole new page with a list of dashboards. This doesn't work too well on an iPad, since it brings up the keyboard and obscures half the choices. Am I missing something obvious here?

Thanks for any suggestions.