@tamorgen said in Condition for trend:

I guess the second condition resets the Latched condition, so both conditions aren't true at the same time?

Correct. Both conditions cannot be true at the same time.

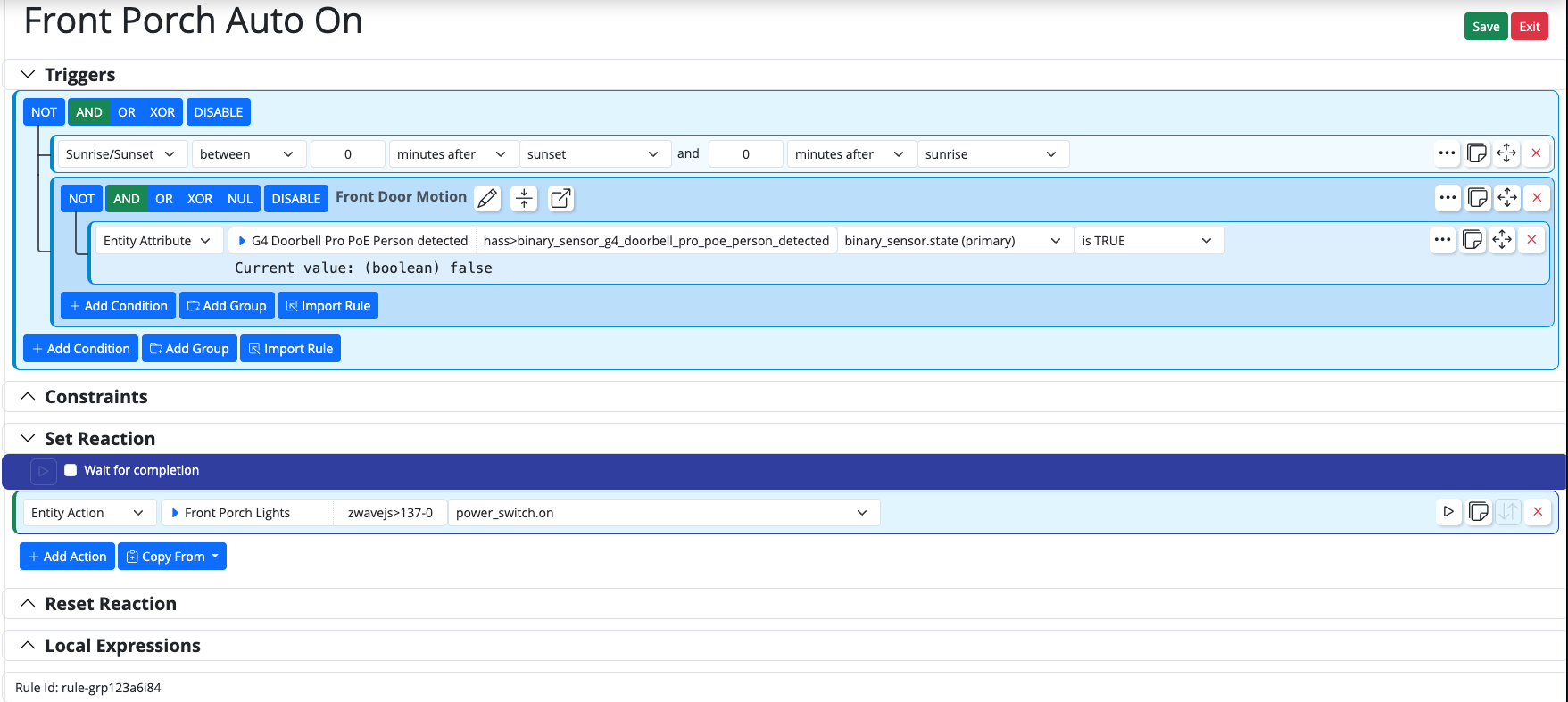

Your relatively simple logic here doesn't need a latch. Just use two rules, one to turn the fan on at humidity >= 70, and the other to turn it off when humidity <= 65.

If you insist on a latch in one rule, then you need to pair the latching condition with a condition that stays true until it's time to reset it. Really, all you need to do is reverse the operand in your second condition: it should be humidity >= 65 (and this second condition not latching). When humidity rises, the second condition will go true before the first (latching) condition, but won't Set the rule because both must be true. When the humidity gets to 70, the first will go true, so both will then be true, and the Set Reaction then runs to turn the fan on. As humidity comes down below 70, the first condition will become logically false, but remain in true state because it is latched while the second condition also remains true (i.e. humidity hasn't yet reached the low cutoff). When the humidity drops below 65, the second condition will go false, causing the reset of the latch on the first condition. The Reset Reaction then runs (it turns the fan off).

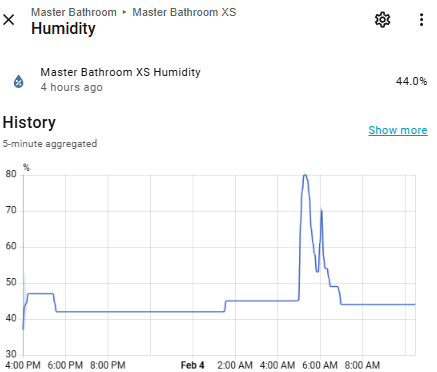

That said, seasonally and with local changes in weather, your indoor humidity varies, and using fixed values like this may not work out very well over the course of a year. I've tried. That's why I added aggregates/trending to VirtualEntityController. You can have VEC create a rate aggregate over 5 minutes. You'd need to "tune" the condition's rate value test, but as a starting point for the conditions, let's say if the rate of change exceeds 2.0 (which is % humidity per minute, the unit VEC will report for rate, equating to about 10% (positive) change in the five minute sampling period), your rule turns the fan on. It may be a little trickier figuring out when to turn the fan off. As a practical matter, I've found that having the fan run on a timed basis for 30 minutes once triggered is a simplifying assumption that works out perfectly in my bathroom, but you could also look at rate (e.g. rate of change is <0.0167 sustained for 15 minutes, for example). I would always add a time component to the run of exhaust fans in any case, because these simple fans aren't built for long runtimes (bathroom exhaust fans have started fires from running too long).

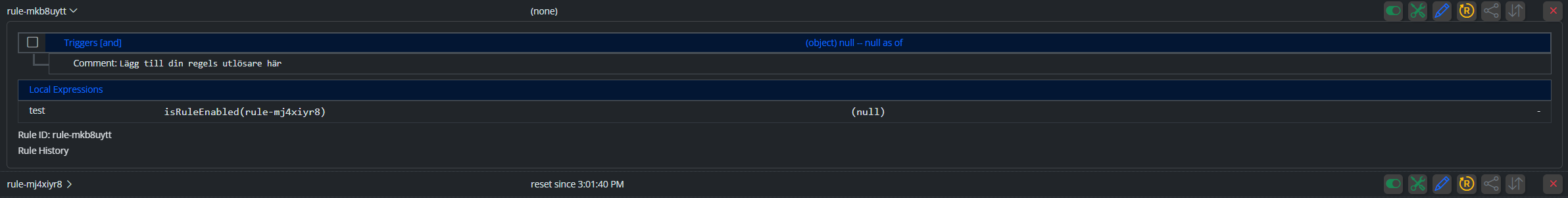

controllers:

- id: virtual

enabled: true

implementation: VirtualEntityController

name: Virtual Entity Controller

config:

entities:

- id: "master_bath_rh_rate"

name: "Master Bath Humidity Change Rate"

type: ValueSensor

capabilities:

value_sensor:

attributes:

value:

model: time series

aggregate: rate

entity: "mqtt>shelly_handt3"

attribute: "humidity_sensor.value"

interval: 150

retention: 300

precision: 3

primary_attribute: "value_sensor.value"

Raspberry Pi 4 dual RAM variant introduced to mitigate RAM price increases and supply challenges - CNX Software

Raspberry Pi 4 dual RAM variant introduced to mitigate RAM price increases and supply challenges - CNX Software