[Solved] latest-22328 restart fails

-

Solution: An update to openLuups MQTT implementation on handling acknowledge packets for QoS > 0 solved this issue.

EDIT: Seems related to any restart without any configuration changes. If I revert to 22310 I can restart Reactor from UI and with systemd within seconds, but with 22328 it fails to restart., both from UI and systemd. I have to stop the service and then start again.

I tested to comment out my http (not https)?baseurlin the config on my bare metal Ubuntu install latest-22328 and triggered a restart from the UI but Reactor would not start after that.

If I uncomment the key and restart the service, Reactor comes back to life.

Is my setup an exceptional circumstance or is this only applicable on new installs -

It's working for me, and I've done some fresh installs of 22328 while testing alternatives to Raspberry Pi (a couple of promising boards so far). I hate to say it, but your post is right on the line of "I tried X and it didn't work for me," so without more detail, I can't really guide you.

-

It's working for me, and I've done some fresh installs of 22328 while testing alternatives to Raspberry Pi (a couple of promising boards so far). I hate to say it, but your post is right on the line of "I tried X and it didn't work for me," so without more detail, I can't really guide you.

@toggledbits said in latest-22328 and baseurl [EDIT]: restarts fail:

It's working for me

This is at first all I wanted to know, if anyone else was having the same issue or it's just my setup.

Sorry for the lack of details, I did not know what else to provide as the log is dead silent after shutting down...

I will debug further.

-

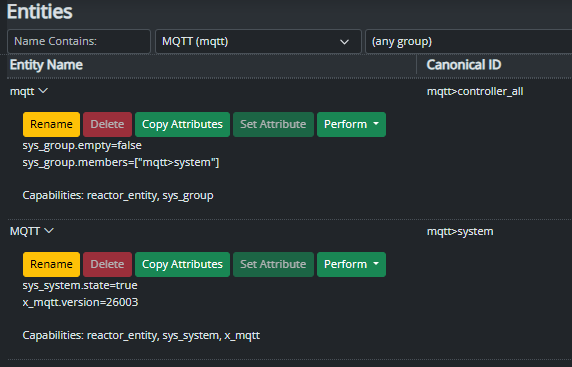

Looks like it's related to openLuups MQTT server. The shutdown process hangs after sending LWT to openLuup (MQTTController#mqtt in log).

Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.775Z <app:NOTICE> Closing Structure... Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.776Z <Structure:INFO> Structure#1 Stopping controllers... Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.776Z <Controller:NOTICE> VeraController#vera stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.778Z <Controller:ERR> Controller VeraController#vera is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.799Z <EzloController:NOTICE> EzloController#ezlo stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.2#5" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#4" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#3" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#2" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.2#1" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.802Z <EzloController:NOTICE> EzloController#ezlo connection closed: 1000 closing Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.804Z <Controller:ERR> Controller EzloController#ezlo is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.804Z <Controller:NOTICE> EzloController#ezlo stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.806Z <DynamicGroupController:null> DynamicGroupController#groups stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.806Z <Controller:NOTICE> DynamicGroupController#groups stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.808Z <Controller:ERR> Controller DynamicGroupController#groups is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.809Z <HassController:NOTICE> HassController#hass stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.810Z <HassController:NOTICE> HassController#hass websocket closing, 1000 Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.810Z <Controller:NOTICE> HassController#hass stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.811Z <Controller:ERR> Controller HassController#hass is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.823Z <Controller:NOTICE> OWMWeatherController#weather stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.824Z <Controller:ERR> Controller OWMWeatherController#weather is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.825Z <Controller:NOTICE> SystemController#reactor_system stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.826Z <Controller:ERR> Controller SystemController#reactor_system is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.827Z <MQTTController:NOTICE> MQTTController#mosquitto-mqtt stopping, sending LWT Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.870Z <MQTTController:NOTICE> LWT sent; closing broker connection Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.870Z <Controller:NOTICE> MQTTController#mosquitto-mqtt stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.871Z <Controller:ERR> Controller MQTTController#mosquitto-mqtt is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.874Z <MQTTController:NOTICE> MQTTController#mqtt stopping, sending LWT Nov 26 10:46:03 homebridge node[686832]: [latest-22328]2022-11-26T09:46:03.452Z <httpapi:INFO> HTTP server closed.If I disable that controller Reactor restarts fine as usual.

@toggledbits Is this the moment openLuup MQTT support ends in Reactor or can I change something to make it work again?

@akbooer I've updated openLuup from 22.9.3 to 22.11.22 but no success to this issue.The thing is it's running great when it finally comes up again after a long wait of a forced restart with systemd but it doesn't feel right to force kill the process even though the shutdown process is almost finished.

-

Looks like it's related to openLuups MQTT server. The shutdown process hangs after sending LWT to openLuup (MQTTController#mqtt in log).

Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.775Z <app:NOTICE> Closing Structure... Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.776Z <Structure:INFO> Structure#1 Stopping controllers... Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.776Z <Controller:NOTICE> VeraController#vera stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.778Z <Controller:ERR> Controller VeraController#vera is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.799Z <EzloController:NOTICE> EzloController#ezlo stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.2#5" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#4" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#3" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.238#2" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.800Z <wsapi:WARN> client close from unknown connection? "192.168.1.2#1" Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.802Z <EzloController:NOTICE> EzloController#ezlo connection closed: 1000 closing Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.804Z <Controller:ERR> Controller EzloController#ezlo is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.804Z <Controller:NOTICE> EzloController#ezlo stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.806Z <DynamicGroupController:null> DynamicGroupController#groups stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.806Z <Controller:NOTICE> DynamicGroupController#groups stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.808Z <Controller:ERR> Controller DynamicGroupController#groups is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.809Z <HassController:NOTICE> HassController#hass stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.810Z <HassController:NOTICE> HassController#hass websocket closing, 1000 Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.810Z <Controller:NOTICE> HassController#hass stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.811Z <Controller:ERR> Controller HassController#hass is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.823Z <Controller:NOTICE> OWMWeatherController#weather stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.824Z <Controller:ERR> Controller OWMWeatherController#weather is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.825Z <Controller:NOTICE> SystemController#reactor_system stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.826Z <Controller:ERR> Controller SystemController#reactor_system is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.827Z <MQTTController:NOTICE> MQTTController#mosquitto-mqtt stopping, sending LWT Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.870Z <MQTTController:NOTICE> LWT sent; closing broker connection Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.870Z <Controller:NOTICE> MQTTController#mosquitto-mqtt stopping Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.871Z <Controller:ERR> Controller MQTTController#mosquitto-mqtt is off-line! Nov 26 10:45:58 homebridge node[686832]: [latest-22328]2022-11-26T09:45:58.874Z <MQTTController:NOTICE> MQTTController#mqtt stopping, sending LWT Nov 26 10:46:03 homebridge node[686832]: [latest-22328]2022-11-26T09:46:03.452Z <httpapi:INFO> HTTP server closed.If I disable that controller Reactor restarts fine as usual.

@toggledbits Is this the moment openLuup MQTT support ends in Reactor or can I change something to make it work again?

@akbooer I've updated openLuup from 22.9.3 to 22.11.22 but no success to this issue.The thing is it's running great when it finally comes up again after a long wait of a forced restart with systemd but it doesn't feel right to force kill the process even though the shutdown process is almost finished.

@crille said in latest-22328 restart fails:

Looks like it's related to openLuups MQTT server. The shutdown process hangs after sending LWT to openLuup (MQTTController#mqtt in log).

I think we've seen an issue like that previously. I don't think I see that line in the log you posted?

There may be a problem with retained messages (ie. LWT) and wildcard subscriptions in the openLuup server... I'd have to check.

-

@crille said in latest-22328 restart fails:

Looks like it's related to openLuups MQTT server. The shutdown process hangs after sending LWT to openLuup (MQTTController#mqtt in log).

I think we've seen an issue like that previously. I don't think I see that line in the log you posted?

There may be a problem with retained messages (ie. LWT) and wildcard subscriptions in the openLuup server... I'd have to check.

-

I use a popular package to handle the MQTT broker connection, so I can't see the innards of communications and confirm, but from the rhythm of the log output, it appears that the broker is not sending an ACK to the publish of the LWT; the

publish()call appears to be sitting there waiting for it. -

I use a popular package to handle the MQTT broker connection, so I can't see the innards of communications and confirm, but from the rhythm of the log output, it appears that the broker is not sending an ACK to the publish of the LWT; the

publish()call appears to be sitting there waiting for it. -

22310 still required an ACK, in a sense. It didn't wait for it. But because the ACK never arrived, the task in the

mqttpackage also never cleared (they don't have a time-out mechanism), so it just stayed in the queue forever... as did every other topic sent with a non-zero QoS. This actually causes a memory leak that could lead to exhaustion and a crash, because the ACK never comes so the task is never removed from the queue, and those tasks remain and proliferate in the queue.IMO, I think it's fine if @akbooer doesn't truly support QoS levels 1 and 2 in his MQTT implementation just for basic use, but not sending an ACK regardless isn't the right choice, in my view, and it's going to cause problems for a lot of clients that may not be immediately evident (like memory leaks/exhaustion).

-

22310 still required an ACK, in a sense. It didn't wait for it. But because the ACK never arrived, the task in the

mqttpackage also never cleared (they don't have a time-out mechanism), so it just stayed in the queue forever... as did every other topic sent with a non-zero QoS. This actually causes a memory leak that could lead to exhaustion and a crash, because the ACK never comes so the task is never removed from the queue, and those tasks remain and proliferate in the queue.IMO, I think it's fine if @akbooer doesn't truly support QoS levels 1 and 2 in his MQTT implementation just for basic use, but not sending an ACK regardless isn't the right choice, in my view, and it's going to cause problems for a lot of clients that may not be immediately evident (like memory leaks/exhaustion).

@toggledbits said in latest-22328 restart fails:

I think it's fine if @akbooer doesn't truly support QoS levels 1 and 2 in his MQTT implementation just for basic use

It only supports QoS 0 .

Nevertheless, the protocol should be respected. AFAIK all PUBLISH requests receive an ACK unless the connection goes down in between times.

-

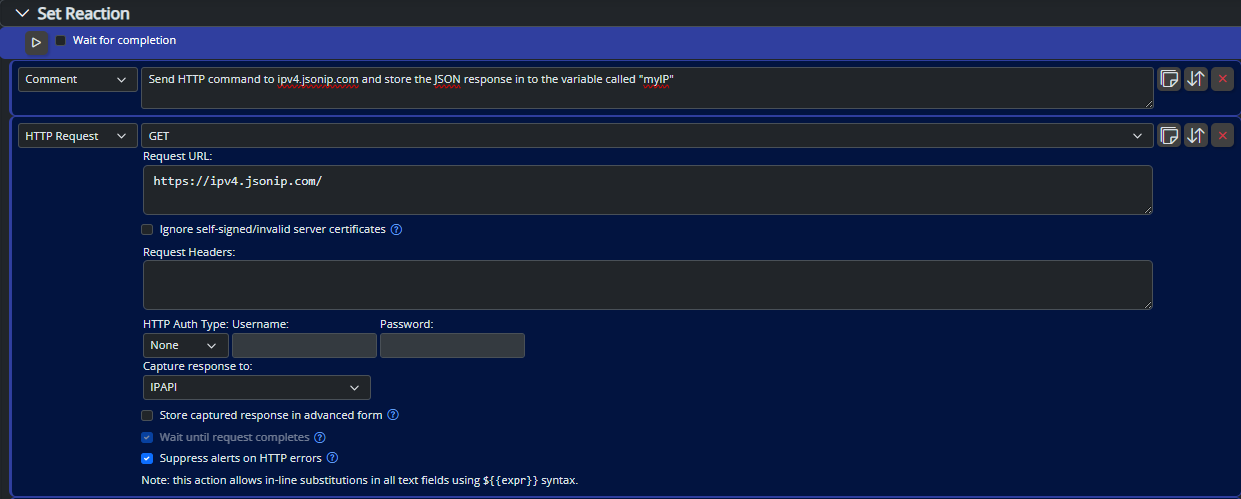

This is the end of

parse.PUBLISH():-- ACKNOWLEDGEMENT -- The receiver of a PUBLISH Packet MUST respond according to Table 3.4 - Expected Publish Packet -- response as determined by the QoS in the PUBLISH packet [MQTT-3.3.4-1] --[[ Table 3.4 - Expected Publish Packet response QoS Level Expected Response QoS 0 None QoS 1 PUBACK Packet QoS 2 PUBREC Packet --]] local ack -- None, because we only handle QoS 0 return ack, nil, TopicName, ApplicationMessage, RETAIN endComments to the contrary, it appears it returns

ack, which is declared butnil... so... no ACK? -

This is the end of

parse.PUBLISH():-- ACKNOWLEDGEMENT -- The receiver of a PUBLISH Packet MUST respond according to Table 3.4 - Expected Publish Packet -- response as determined by the QoS in the PUBLISH packet [MQTT-3.3.4-1] --[[ Table 3.4 - Expected Publish Packet response QoS Level Expected Response QoS 0 None QoS 1 PUBACK Packet QoS 2 PUBREC Packet --]] local ack -- None, because we only handle QoS 0 return ack, nil, TopicName, ApplicationMessage, RETAIN endComments to the contrary, it appears it returns

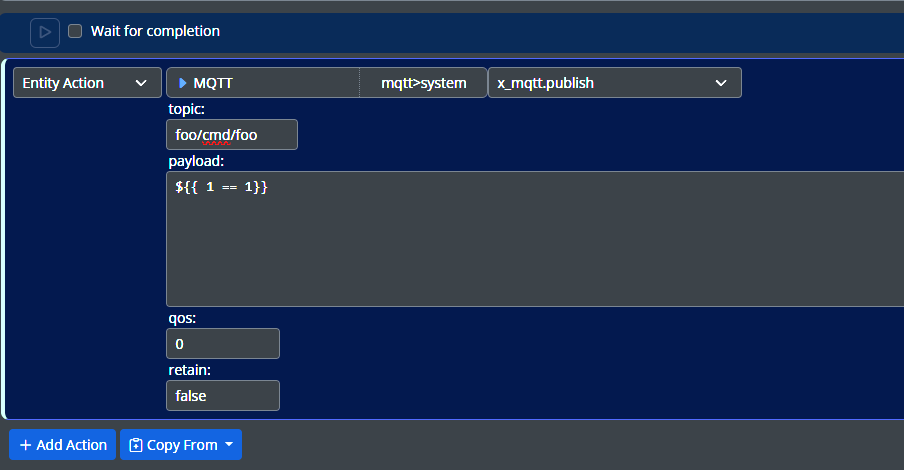

ack, which is declared butnil... so... no ACK?@toggledbits does Reactor publish it's LWT message with QoS > 0 even though the MQTTController config is at

qos: 0? otherwise the expected response would be none. -

Yes, it uses QoS 1 (and retain true) because it's a "vital" message. The

qosyou can set in config is for the echo/entity publish functionality; it does not affect other messages. Still, this only requires that the broker acknowledge its receipt (3.3.4), not any delivery, and does not even enforce that QoS on subscribers (3.8.3) -

"The receiver of a PUBLISH Packet MUST respond according to Table 3.4 - Expected Publish Packet response as determined by the QoS in the PUBLISH Packet."

So even though the server only supports QoS 0 it's obligated to send a PUBACK on a QoS 1 level packet as described in table 3.4, correct?

-

Yes, PUBACK for QoS 1, and PUBREC for QoS 2. I don't think that would be a big problem for @akbooer when he gets the time, because all of the information contained in the response can be sourced from the request (i.e. topic, packet identifier, etc.). And otherwise the treatment of the PUBLISH packet can be the same (no further changes beyond sending those ACKs). Not actually having guaranteed delivery behind that is, in my view, an acceptable variance. On the sending side (repeat to subscribers), even though a subscriber may request QoS 1 or 2 for packets from the broker, it still must accept QoS 0 packets (because the requested QoS is a maximum, not an absolute), so everything outbound at QoS 0 isn't likely going to cause problems, especially in this world.

To summarize: if he just provides the PUBACK and PUBREC responses to fix that layer of the protocol, that's good enough. No further actions required above that layer. Not fully compliant (no guaranteed delivery), but at that point, few if any would ever notice.

The other question I don't have an answer for (or I've forgotten; and haven't dug through code to figure out)... @akbooer, does it support retain? If so, what's the storage mechanism, and is it persistent?

-

Well, I must be reading the MQTT 3.1.1 spec all wrong.

When I read:

"The SUBACK Packet sent by the Server to the Client MUST contain a return code for each Topic Filter/QoS pair. This return code MUST either show the maximum QoS that was granted for that Subscription or indicate that the subscription failed [MQTT-3.8.4-5]. The Server might grant a lower maximum QoS than the subscriber requested. The QoS of Payload Messages sent in response to a Subscription MUST be the minimum of the QoS of the originally published message and the maximum QoS granted by the Server. The server is permitted to send duplicate copies of a message to a subscriber in the case where the original message was published with QoS 1 and the maximum QoS granted was QoS 0 [MQTT-3.8.4-6]."

...I understood it to mean that, since I only ever grant QoS 0, then no message would require a PUBACK or a PUBREC.

I realise that this is my bad for writing my own MQTT broker, but it made sense in the context of openLuup, especially in support of Shelly devices which was the reason I did it in the first place. I apologise if this has led to some difficulties, and I'm starting to look at an MQTT validation suite to check out my implementation further. I also realize that Mosquitto is the de-facto standard, but it turns out that having an internal server confers some significant benefits in terms of the internal openLuup architecture.

However, if anyone can clarify the about QoS response issue further, I'm very happy to comply. If a simple fix is to send PUBACK or PUBREC, then I'll do it, but I want to know the reason why. I do understand that the original CONNECT request contains a LWT QoS, per this paragraph:

3.1.2.6 Will QoS

"Position: bits 4 and 3 of the Connect Flags."

"These two bits specify the QoS level to be used when publishing the Will Message."

"If the Will Flag is set to 0, then the Will QoS MUST be set to 0 (0x00) [MQTT-3.1.2-13]."

"If the Will Flag is set to 1, the value of Will QoS can be 0 (0x00), 1 (0x01), or 2 (0x02). It MUST NOT be 3 (0x03) [MQTT-3.1.2-14]."

...but I had assumed that QoS to be overridden by the actual level established in SUBSCRIBE / SUBACK. However, now that I write that, it seems the LWT actually may have a separate life from standard messages?

-

Yes, PUBACK for QoS 1, and PUBREC for QoS 2. I don't think that would be a big problem for @akbooer when he gets the time, because all of the information contained in the response can be sourced from the request (i.e. topic, packet identifier, etc.). And otherwise the treatment of the PUBLISH packet can be the same (no further changes beyond sending those ACKs). Not actually having guaranteed delivery behind that is, in my view, an acceptable variance. On the sending side (repeat to subscribers), even though a subscriber may request QoS 1 or 2 for packets from the broker, it still must accept QoS 0 packets (because the requested QoS is a maximum, not an absolute), so everything outbound at QoS 0 isn't likely going to cause problems, especially in this world.

To summarize: if he just provides the PUBACK and PUBREC responses to fix that layer of the protocol, that's good enough. No further actions required above that layer. Not fully compliant (no guaranteed delivery), but at that point, few if any would ever notice.

The other question I don't have an answer for (or I've forgotten; and haven't dug through code to figure out)... @akbooer, does it support retain? If so, what's the storage mechanism, and is it persistent?

@toggledbits said in latest-22328 restart fails:

The other question I don't have an answer for (or I've forgotten; and haven't dug through code to figure out)... @akbooer, does it support retain? If so, what's the storage mechanism, and is it persistent?

Yes, it supports retained messages.

Is it persistent? Not across openLuup restarts.

The openLuup console page: openLuupIP:3480/console?page=mqtt shows all current subscriptions and also (at the bottom) retained messages.

-

Well, I must be reading the MQTT 3.1.1 spec all wrong.

When I read:

"The SUBACK Packet sent by the Server to the Client MUST contain a return code for each Topic Filter/QoS pair. This return code MUST either show the maximum QoS that was granted for that Subscription or indicate that the subscription failed [MQTT-3.8.4-5]. The Server might grant a lower maximum QoS than the subscriber requested. The QoS of Payload Messages sent in response to a Subscription MUST be the minimum of the QoS of the originally published message and the maximum QoS granted by the Server. The server is permitted to send duplicate copies of a message to a subscriber in the case where the original message was published with QoS 1 and the maximum QoS granted was QoS 0 [MQTT-3.8.4-6]."

...I understood it to mean that, since I only ever grant QoS 0, then no message would require a PUBACK or a PUBREC.

I realise that this is my bad for writing my own MQTT broker, but it made sense in the context of openLuup, especially in support of Shelly devices which was the reason I did it in the first place. I apologise if this has led to some difficulties, and I'm starting to look at an MQTT validation suite to check out my implementation further. I also realize that Mosquitto is the de-facto standard, but it turns out that having an internal server confers some significant benefits in terms of the internal openLuup architecture.

However, if anyone can clarify the about QoS response issue further, I'm very happy to comply. If a simple fix is to send PUBACK or PUBREC, then I'll do it, but I want to know the reason why. I do understand that the original CONNECT request contains a LWT QoS, per this paragraph:

3.1.2.6 Will QoS

"Position: bits 4 and 3 of the Connect Flags."

"These two bits specify the QoS level to be used when publishing the Will Message."

"If the Will Flag is set to 0, then the Will QoS MUST be set to 0 (0x00) [MQTT-3.1.2-13]."

"If the Will Flag is set to 1, the value of Will QoS can be 0 (0x00), 1 (0x01), or 2 (0x02). It MUST NOT be 3 (0x03) [MQTT-3.1.2-14]."

...but I had assumed that QoS to be overridden by the actual level established in SUBSCRIBE / SUBACK. However, now that I write that, it seems the LWT actually may have a separate life from standard messages?

@akbooer said in latest-22328 restart fails:

...I understood it to mean that, since I only ever grant QoS 0, then no message would require a PUBACK or a PUBREC.

This section is about your response to a SUBSCRIBE (SUBACK)and what QoS you use to PUBLISH. If you only grant QoS 0 on subscribe, then you will never expect a PUBACK or PUBREC because you never publish anything to a subscriber other than QoS 0.

It in no way limits what a client may publish to the broker, which could include any QoS.