Help with Luup Reloads

-

Recently I have been getting constant reloads on my Vera Plus running the latest 7.32 beta build. It usually starts after a 3-4 day Luup uptime and the reloads start at around 5am local time and keep going every 30 mins until I physically restart my Vera Plus (unplugging and plugging it back in). Right before a reload I see on the status bar above the modes on the homepage "cannot write user data" then after a few minutes a reload occurs. Looking into the logs I have seen mention of

03 06/21/21 8:53:18.102 JobHandler_LuaUPnP::Reload: low memory Critical 1 m_bCriticalOnly 0 dirty data 1 running 1 <0x76aa7520>almost every single time a reload happens. I have attached logs from 2 instances this morning where a reload happened. I have noticed that the OpenSprinkler plugin has been very chatty even though I have set debugging to 0, is that what is filling up temp log system?01 06/21/21 8:45:58.123 IOPort::Connect connect -1 192.168.8.43:23 <0x6a148520> 02 06/21/21 8:45:59.031 UserData::CommitToDatabase data size 1244271 1244271 <0x7662b520> 01 06/21/21 8:45:59.333 UserData::WriteUserData saved--before move File Size: 234865 save size 234865 <0x7662b520> 02 06/21/21 8:45:59.333 UserData::TempLogFileSystemFailure start 0 <0x7662b520> 02 06/21/21 8:45:59.334 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x7662b520> 02 06/21/21 8:45:59.334 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 01 06/21/21 8:45:59.341 UserData::WriteUserData failed File Size: 234865 save size 234902 <0x7662b520> 02 06/21/21 8:45:59.342 UserData::TempLogFileSystemFailure start 1 <0x7662b520> 02 06/21/21 8:45:59.344 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 01 06/21/21 8:45:59.632 UserData::WriteUserData failed result:1 size2:234865 vs 234865 <0x7662b520> 02 06/21/21 8:45:59.632 UserData::TempLogFileSystemFailure start 1 <0x7662b520> 02 06/21/21 8:45:59.634 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 02 06/21/21 8:45:59.635 UserData::TempLogFileSystemFailure start 0 <0x7662b520> 02 06/21/21 8:45:59.635 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x7662b520> 02 06/21/21 8:45:59.636 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 06 06/21/21 8:45:59.636 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusID was: 1 now: 2 #hooks: 0 upnp: 0 skip: 0 v:0x1099d38/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.637 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusText was: OK now: Resetting ZWave Network #hooks: 0 upnp: 0 skip: 0 v:0x1a02d48/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.637 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusID was: 0 now: 3 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.638 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusText was: OK now: GET_LANG(resetting_zigbee_network,Resetting Zigbee Network) #hooks: 0 upnp: 0 skip: 0 v:0x12b9e60/NONE duplicate:0 <0x7662b520> 01 06/21/21 8:45:59.648 RAServerSync::SendAlert notification lost quit 1 device 50002761 servers:vera-us-oem-event12.mios.com/vera-us-oem-event11.mios.com for time 23822220 type 7 code user_data <0x7642b520> 02 06/21/21 8:46:01.381 UserData::CommitToDatabase data size 1244349 1244349 <0x770dc320> 01 06/21/21 8:46:01.675 UserData::WriteUserData saved--before move File Size: 234875 save size 234875 <0x770dc320> 02 06/21/21 8:46:01.675 UserData::TempLogFileSystemFailure start 0 <0x770dc320> 02 06/21/21 8:46:01.676 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x770dc320> 02 06/21/21 8:46:01.676 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 01 06/21/21 8:46:01.680 UserData::WriteUserData failed File Size: 234875 save size 234865 <0x770dc320> 02 06/21/21 8:46:01.680 UserData::TempLogFileSystemFailure start 1 <0x770dc320> 02 06/21/21 8:46:01.682 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 01 06/21/21 8:46:01.940 UserData::WriteUserData failed result:1 size2:234875 vs 234875 <0x770dc320> 02 06/21/21 8:46:01.941 UserData::TempLogFileSystemFailure start 1 <0x770dc320> 02 06/21/21 8:46:01.943 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 02 06/21/21 8:46:01.944 UserData::TempLogFileSystemFailure start 0 <0x770dc320> 02 06/21/21 8:46:01.945 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x770dc320> 02 06/21/21 8:46:01.945 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 50 06/21/21 8:46:03.102 luup_log:1304: VeraOpenSprinkler[1.50]: [updateFromController] error: false - nil - nil <0x72581520> 01 06/21/21 8:46:05.210 Main WatchDogRoutine: blocked - terminating 1 <0x695de520> 02 06/21/21 8:46:05.211 Dumping 40 locks <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 OL: (0xbbc560) (>100746) Variable JobHandler_LuaUPnP.cpp l:9817 time: 4:00:00p (1624290365 s) thread: 0x6f948520 Rel: Y Got: Y <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.218 OL: (0xbbc560) (>100751) Variable JobHandler_LuaUPnP.cpp l:9817 time: 4:00:00p (1624290365 s) thread: 0x6d948520 Rel: Y Got: Y <0x695de520> 2021-06-21 08:46:05 - LuaUPnP Terminated with Exit Code: 137 03 06/21/21 8:53:18.102 JobHandler_LuaUPnP::Reload: low memory Critical 1 m_bCriticalOnly 0 dirty data 1 running 1 <0x76aa7520> 01 06/21/21 8:53:18.103 luup_log:901: Tesla Car_error: Monitor awake state, failed #400 HTTP/1.1 400 BAD REQUEST !! <0x72ffd520> 02 06/21/21 8:53:18.482 UserData::CommitToDatabase data size 1244185 1244185 <0x76aa7520> 01 06/21/21 8:53:18.781 UserData::WriteUserData saved--before move File Size: 234865 save size 234865 <0x76aa7520> 02 06/21/21 8:53:18.781 UserData::TempLogFileSystemFailure start 0 <0x76aa7520> 02 06/21/21 8:53:18.781 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x76aa7520> 02 06/21/21 8:53:18.782 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 01 06/21/21 8:53:18.787 UserData::WriteUserData failed File Size: 234865 save size 234875 <0x76aa7520> 02 06/21/21 8:53:18.787 UserData::TempLogFileSystemFailure start 1 <0x76aa7520> 02 06/21/21 8:53:18.789 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 01 06/21/21 8:53:18.813 IOPort::Connect connect -1 192.168.8.43:23 <0x6a7c4520> 01 06/21/21 8:53:19.020 UserData::WriteUserData failed result:1 size2:234865 vs 234865 <0x76aa7520> 02 06/21/21 8:53:19.020 UserData::TempLogFileSystemFailure start 1 <0x76aa7520> 02 06/21/21 8:53:19.023 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 02 06/21/21 8:53:19.027 UserData::TempLogFileSystemFailure start 0 <0x76aa7520> 02 06/21/21 8:53:19.028 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x76aa7520> 02 06/21/21 8:53:19.028 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 06 06/21/21 8:53:19.029 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusID was: 1 now: 2 #hooks: 0 upnp: 0 skip: 0 v:0x14bad80/NONE duplicate:0 <0x76aa7520> 06 06/21/21 8:53:19.029 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusText was: OK now: Resetting ZWave Network #hooks: 0 upnp: 0 skip: 0 v:0x1e23b10/NONE duplicate:0 <0x76aa7520> 06 06/21/21 8:53:19.033 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusID was: 0 now: 3 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x76aa7520> 01 06/21/21 8:53:19.040 RAServerSync::SendAlert notification lost quit 1 device 50002761 servers:vera-us-oem-event12.mios.com/vera-us-oem-event11.mios.com for time 20546340 type 7 code user_data <0x76ca7520> 06 06/21/21 8:53:19.044 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusText was: OK now: GET_LANG(resetting_zigbee_network,Resetting Zigbee Network) #hooks: 0 upnp: 0 skip: 0 v:0x16dadc0/NONE duplicate:0 <0x76aa7520> 02 06/21/21 8:53:20.807 UserData::CommitToDatabase data size 1244263 1244263 <0x77759320> 01 06/21/21 8:53:21.081 UserData::WriteUserData saved--before move File Size: 234875 save size 234875 <0x77759320> 02 06/21/21 8:53:21.081 UserData::TempLogFileSystemFailure start 0 <0x77759320> 02 06/21/21 8:53:21.116 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x77759320> 02 06/21/21 8:53:21.117 UserData::TempLogFileSystemFailure 699 res:1 -rw-r--r-- 1 root root 504 Jun 26 2017 /etc/cmh/user_data.json.luup.lzo -rw-r--r-- 1 root root 234865 Jun 21 08:53 /etc/cmh/user_data.json.lzo -rw-r--r-- 1 root root 234996 Jun 21 08:11 /etc/cmh/user_data.json.lzo.1 -rw-r--r-- 1 root root 234930 Jun 21 08:02 /etc/cmh/user_data.json.lzo.2 -rw-r--r-- 1 root root 234968 Jun 21 07:47 /etc/cmh/user_data.json.lzo.3 -rw-r--r-- 1 root root 234658 Jun 21 07:11 /etc/cmh/user_data.json.lzo.4 -rw-r--r-- 1 root root 234909 Jun 21 06:50 /etc/cmh/user_data.json.lzo.5 -rw-r--r-- 1 root root 234875 Jun 21 08:53 /etc/cmh/user_data.json.lzo.new <0x77759320> 02 06/21/21 8:53:21.228 UserData::TempLogFileSystemFailure start 0 <0x77759320> 02 06/21/21 8:53:21.259 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x77759320> 02 06/21/21 8:53:21.259 UserData::TempLogFileSystemFailure 610 res:1 -rw-r--r-- 1 root root 504 Jun 26 2017 /etc/cmh/user_data.json.luup.lzo -rw-r--r-- 1 root root 234875 Jun 21 08:53 /etc/cmh/user_data.json.lzo -rw-r--r-- 1 root root 234865 Jun 21 08:53 /etc/cmh/user_data.json.lzo.1 -rw-r--r-- 1 root root 234996 Jun 21 08:11 /etc/cmh/user_data.json.lzo.2 -rw-r--r-- 1 root root 234930 Jun 21 08:02 /etc/cmh/user_data.json.lzo.3 -rw-r--r-- 1 root root 234968 Jun 21 07:47 /etc/cmh/user_data.json.lzo.4 -rw-r--r-- 1 root root 234658 Jun 21 07:11 /etc/cmh/user_data.json.lzo.5 <0x77759320> 06 06/21/21 8:53:21.465 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeDevice1 variable: PendingRemoveDevices was: 0024460000144655-01d8,0024460000146120-a155,00244600001467ad-a538 now: 0024460000144655-01d8,0024460000146120-a155,00244600001467ad-a538 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:1 <0x77759320> 06 06/21/21 8:53:21.469 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeDevice1 variable: UnknownDevices was: now: #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:1 <0x77759320> 03 06/21/21 8:53:22.100 LuaUPNP: ending <0x77759320> 2021-06-21 08:53:22 - LuaUPnP Terminated with Exit Code: 0``` -

Recently I have been getting constant reloads on my Vera Plus running the latest 7.32 beta build. It usually starts after a 3-4 day Luup uptime and the reloads start at around 5am local time and keep going every 30 mins until I physically restart my Vera Plus (unplugging and plugging it back in). Right before a reload I see on the status bar above the modes on the homepage "cannot write user data" then after a few minutes a reload occurs. Looking into the logs I have seen mention of

03 06/21/21 8:53:18.102 JobHandler_LuaUPnP::Reload: low memory Critical 1 m_bCriticalOnly 0 dirty data 1 running 1 <0x76aa7520>almost every single time a reload happens. I have attached logs from 2 instances this morning where a reload happened. I have noticed that the OpenSprinkler plugin has been very chatty even though I have set debugging to 0, is that what is filling up temp log system?01 06/21/21 8:45:58.123 IOPort::Connect connect -1 192.168.8.43:23 <0x6a148520> 02 06/21/21 8:45:59.031 UserData::CommitToDatabase data size 1244271 1244271 <0x7662b520> 01 06/21/21 8:45:59.333 UserData::WriteUserData saved--before move File Size: 234865 save size 234865 <0x7662b520> 02 06/21/21 8:45:59.333 UserData::TempLogFileSystemFailure start 0 <0x7662b520> 02 06/21/21 8:45:59.334 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x7662b520> 02 06/21/21 8:45:59.334 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 01 06/21/21 8:45:59.341 UserData::WriteUserData failed File Size: 234865 save size 234902 <0x7662b520> 02 06/21/21 8:45:59.342 UserData::TempLogFileSystemFailure start 1 <0x7662b520> 02 06/21/21 8:45:59.344 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 01 06/21/21 8:45:59.632 UserData::WriteUserData failed result:1 size2:234865 vs 234865 <0x7662b520> 02 06/21/21 8:45:59.632 UserData::TempLogFileSystemFailure start 1 <0x7662b520> 02 06/21/21 8:45:59.634 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 02 06/21/21 8:45:59.635 UserData::TempLogFileSystemFailure start 0 <0x7662b520> 02 06/21/21 8:45:59.635 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x7662b520> 02 06/21/21 8:45:59.636 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x7662b520> 06 06/21/21 8:45:59.636 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusID was: 1 now: 2 #hooks: 0 upnp: 0 skip: 0 v:0x1099d38/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.637 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusText was: OK now: Resetting ZWave Network #hooks: 0 upnp: 0 skip: 0 v:0x1a02d48/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.637 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusID was: 0 now: 3 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x7662b520> 06 06/21/21 8:45:59.638 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusText was: OK now: GET_LANG(resetting_zigbee_network,Resetting Zigbee Network) #hooks: 0 upnp: 0 skip: 0 v:0x12b9e60/NONE duplicate:0 <0x7662b520> 01 06/21/21 8:45:59.648 RAServerSync::SendAlert notification lost quit 1 device 50002761 servers:vera-us-oem-event12.mios.com/vera-us-oem-event11.mios.com for time 23822220 type 7 code user_data <0x7642b520> 02 06/21/21 8:46:01.381 UserData::CommitToDatabase data size 1244349 1244349 <0x770dc320> 01 06/21/21 8:46:01.675 UserData::WriteUserData saved--before move File Size: 234875 save size 234875 <0x770dc320> 02 06/21/21 8:46:01.675 UserData::TempLogFileSystemFailure start 0 <0x770dc320> 02 06/21/21 8:46:01.676 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x770dc320> 02 06/21/21 8:46:01.676 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 01 06/21/21 8:46:01.680 UserData::WriteUserData failed File Size: 234875 save size 234865 <0x770dc320> 02 06/21/21 8:46:01.680 UserData::TempLogFileSystemFailure start 1 <0x770dc320> 02 06/21/21 8:46:01.682 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 01 06/21/21 8:46:01.940 UserData::WriteUserData failed result:1 size2:234875 vs 234875 <0x770dc320> 02 06/21/21 8:46:01.941 UserData::TempLogFileSystemFailure start 1 <0x770dc320> 02 06/21/21 8:46:01.943 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 02 06/21/21 8:46:01.944 UserData::TempLogFileSystemFailure start 0 <0x770dc320> 02 06/21/21 8:46:01.945 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x770dc320> 02 06/21/21 8:46:01.945 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x770dc320> 50 06/21/21 8:46:03.102 luup_log:1304: VeraOpenSprinkler[1.50]: [updateFromController] error: false - nil - nil <0x72581520> 01 06/21/21 8:46:05.210 Main WatchDogRoutine: blocked - terminating 1 <0x695de520> 02 06/21/21 8:46:05.211 Dumping 40 locks <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.211 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.212 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.213 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.214 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.215 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.216 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.217 OL: (0xbbc560) (>100746) Variable JobHandler_LuaUPnP.cpp l:9817 time: 4:00:00p (1624290365 s) thread: 0x6f948520 Rel: Y Got: Y <0x695de520> 02 06/21/21 8:46:05.217 finished check for exceptions <0x695de520> 02 06/21/21 8:46:05.218 OL: (0xbbc560) (>100751) Variable JobHandler_LuaUPnP.cpp l:9817 time: 4:00:00p (1624290365 s) thread: 0x6d948520 Rel: Y Got: Y <0x695de520> 2021-06-21 08:46:05 - LuaUPnP Terminated with Exit Code: 137 03 06/21/21 8:53:18.102 JobHandler_LuaUPnP::Reload: low memory Critical 1 m_bCriticalOnly 0 dirty data 1 running 1 <0x76aa7520> 01 06/21/21 8:53:18.103 luup_log:901: Tesla Car_error: Monitor awake state, failed #400 HTTP/1.1 400 BAD REQUEST !! <0x72ffd520> 02 06/21/21 8:53:18.482 UserData::CommitToDatabase data size 1244185 1244185 <0x76aa7520> 01 06/21/21 8:53:18.781 UserData::WriteUserData saved--before move File Size: 234865 save size 234865 <0x76aa7520> 02 06/21/21 8:53:18.781 UserData::TempLogFileSystemFailure start 0 <0x76aa7520> 02 06/21/21 8:53:18.781 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x76aa7520> 02 06/21/21 8:53:18.782 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 01 06/21/21 8:53:18.787 UserData::WriteUserData failed File Size: 234865 save size 234875 <0x76aa7520> 02 06/21/21 8:53:18.787 UserData::TempLogFileSystemFailure start 1 <0x76aa7520> 02 06/21/21 8:53:18.789 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 01 06/21/21 8:53:18.813 IOPort::Connect connect -1 192.168.8.43:23 <0x6a7c4520> 01 06/21/21 8:53:19.020 UserData::WriteUserData failed result:1 size2:234865 vs 234865 <0x76aa7520> 02 06/21/21 8:53:19.020 UserData::TempLogFileSystemFailure start 1 <0x76aa7520> 02 06/21/21 8:53:19.023 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 02 06/21/21 8:53:19.027 UserData::TempLogFileSystemFailure start 0 <0x76aa7520> 02 06/21/21 8:53:19.028 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x76aa7520> 02 06/21/21 8:53:19.028 UserData::TempLogFileSystemFailure 0 res:0 (null) <0x76aa7520> 06 06/21/21 8:53:19.029 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusID was: 1 now: 2 #hooks: 0 upnp: 0 skip: 0 v:0x14bad80/NONE duplicate:0 <0x76aa7520> 06 06/21/21 8:53:19.029 Device_Variable::m_szValue_set device: 1 service: urn:micasaverde-com:serviceId:ZWaveNetwork1 variable: NetStatusText was: OK now: Resetting ZWave Network #hooks: 0 upnp: 0 skip: 0 v:0x1e23b10/NONE duplicate:0 <0x76aa7520> 06 06/21/21 8:53:19.033 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusID was: 0 now: 3 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:0 <0x76aa7520> 01 06/21/21 8:53:19.040 RAServerSync::SendAlert notification lost quit 1 device 50002761 servers:vera-us-oem-event12.mios.com/vera-us-oem-event11.mios.com for time 20546340 type 7 code user_data <0x76ca7520> 06 06/21/21 8:53:19.044 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeNetwork1 variable: NetStatusText was: OK now: GET_LANG(resetting_zigbee_network,Resetting Zigbee Network) #hooks: 0 upnp: 0 skip: 0 v:0x16dadc0/NONE duplicate:0 <0x76aa7520> 02 06/21/21 8:53:20.807 UserData::CommitToDatabase data size 1244263 1244263 <0x77759320> 01 06/21/21 8:53:21.081 UserData::WriteUserData saved--before move File Size: 234875 save size 234875 <0x77759320> 02 06/21/21 8:53:21.081 UserData::TempLogFileSystemFailure start 0 <0x77759320> 02 06/21/21 8:53:21.116 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x77759320> 02 06/21/21 8:53:21.117 UserData::TempLogFileSystemFailure 699 res:1 -rw-r--r-- 1 root root 504 Jun 26 2017 /etc/cmh/user_data.json.luup.lzo -rw-r--r-- 1 root root 234865 Jun 21 08:53 /etc/cmh/user_data.json.lzo -rw-r--r-- 1 root root 234996 Jun 21 08:11 /etc/cmh/user_data.json.lzo.1 -rw-r--r-- 1 root root 234930 Jun 21 08:02 /etc/cmh/user_data.json.lzo.2 -rw-r--r-- 1 root root 234968 Jun 21 07:47 /etc/cmh/user_data.json.lzo.3 -rw-r--r-- 1 root root 234658 Jun 21 07:11 /etc/cmh/user_data.json.lzo.4 -rw-r--r-- 1 root root 234909 Jun 21 06:50 /etc/cmh/user_data.json.lzo.5 -rw-r--r-- 1 root root 234875 Jun 21 08:53 /etc/cmh/user_data.json.lzo.new <0x77759320> 02 06/21/21 8:53:21.228 UserData::TempLogFileSystemFailure start 0 <0x77759320> 02 06/21/21 8:53:21.259 UserData::TempLogFileSystemFailure (not failure, only WriteUserData) 0 <0x77759320> 02 06/21/21 8:53:21.259 UserData::TempLogFileSystemFailure 610 res:1 -rw-r--r-- 1 root root 504 Jun 26 2017 /etc/cmh/user_data.json.luup.lzo -rw-r--r-- 1 root root 234875 Jun 21 08:53 /etc/cmh/user_data.json.lzo -rw-r--r-- 1 root root 234865 Jun 21 08:53 /etc/cmh/user_data.json.lzo.1 -rw-r--r-- 1 root root 234996 Jun 21 08:11 /etc/cmh/user_data.json.lzo.2 -rw-r--r-- 1 root root 234930 Jun 21 08:02 /etc/cmh/user_data.json.lzo.3 -rw-r--r-- 1 root root 234968 Jun 21 07:47 /etc/cmh/user_data.json.lzo.4 -rw-r--r-- 1 root root 234658 Jun 21 07:11 /etc/cmh/user_data.json.lzo.5 <0x77759320> 06 06/21/21 8:53:21.465 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeDevice1 variable: PendingRemoveDevices was: 0024460000144655-01d8,0024460000146120-a155,00244600001467ad-a538 now: 0024460000144655-01d8,0024460000146120-a155,00244600001467ad-a538 #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:1 <0x77759320> 06 06/21/21 8:53:21.469 Device_Variable::m_szValue_set device: 2 service: urn:micasaverde-com:serviceId:ZigbeeDevice1 variable: UnknownDevices was: now: #hooks: 0 upnp: 0 skip: 0 v:(nil)/NONE duplicate:1 <0x77759320> 03 06/21/21 8:53:22.100 LuaUPNP: ending <0x77759320> 2021-06-21 08:53:22 - LuaUPnP Terminated with Exit Code: 0``` -

@pabla I could definitely remove some logs and move them to debug only.

All that said, these crashes are normal and all related to memory leaks. I have similar ones and I’ve just set a memory watch to automatically reload it under these circumstances.

@therealdb Do you think using a USB for logs would stop the memory leaks? The reloads continually happen until I manually do something about it

-

@therealdb Do you think using a USB for logs would stop the memory leaks? The reloads continually happen until I manually do something about it

-

@pabla I’m not confident it will. I have my logs on a network share… just use sysmon plugin to watch for memory and when below 90.000 do a luup.reload via a scene.

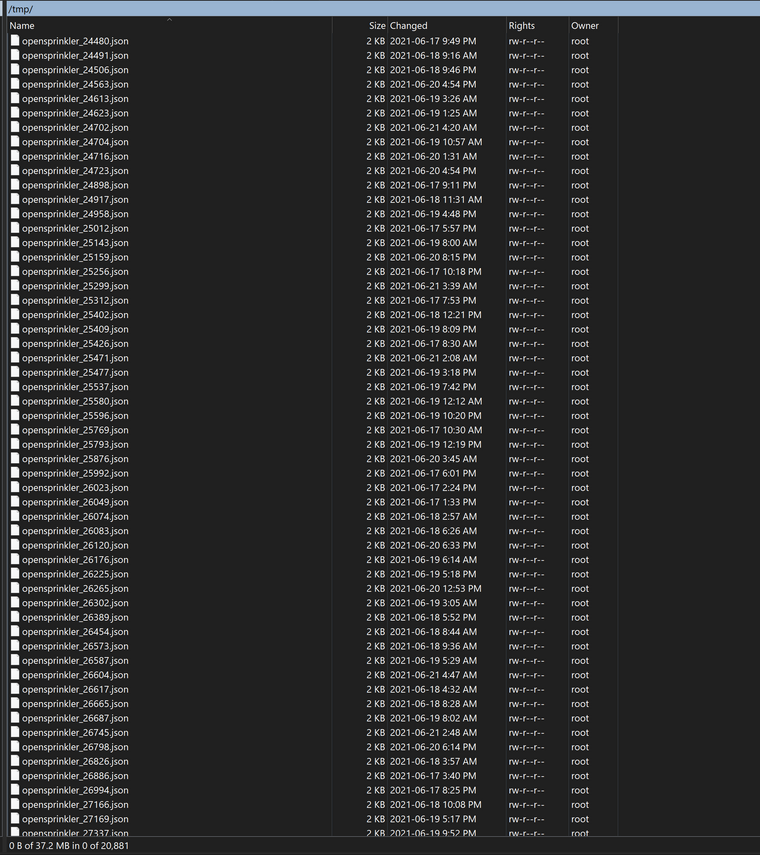

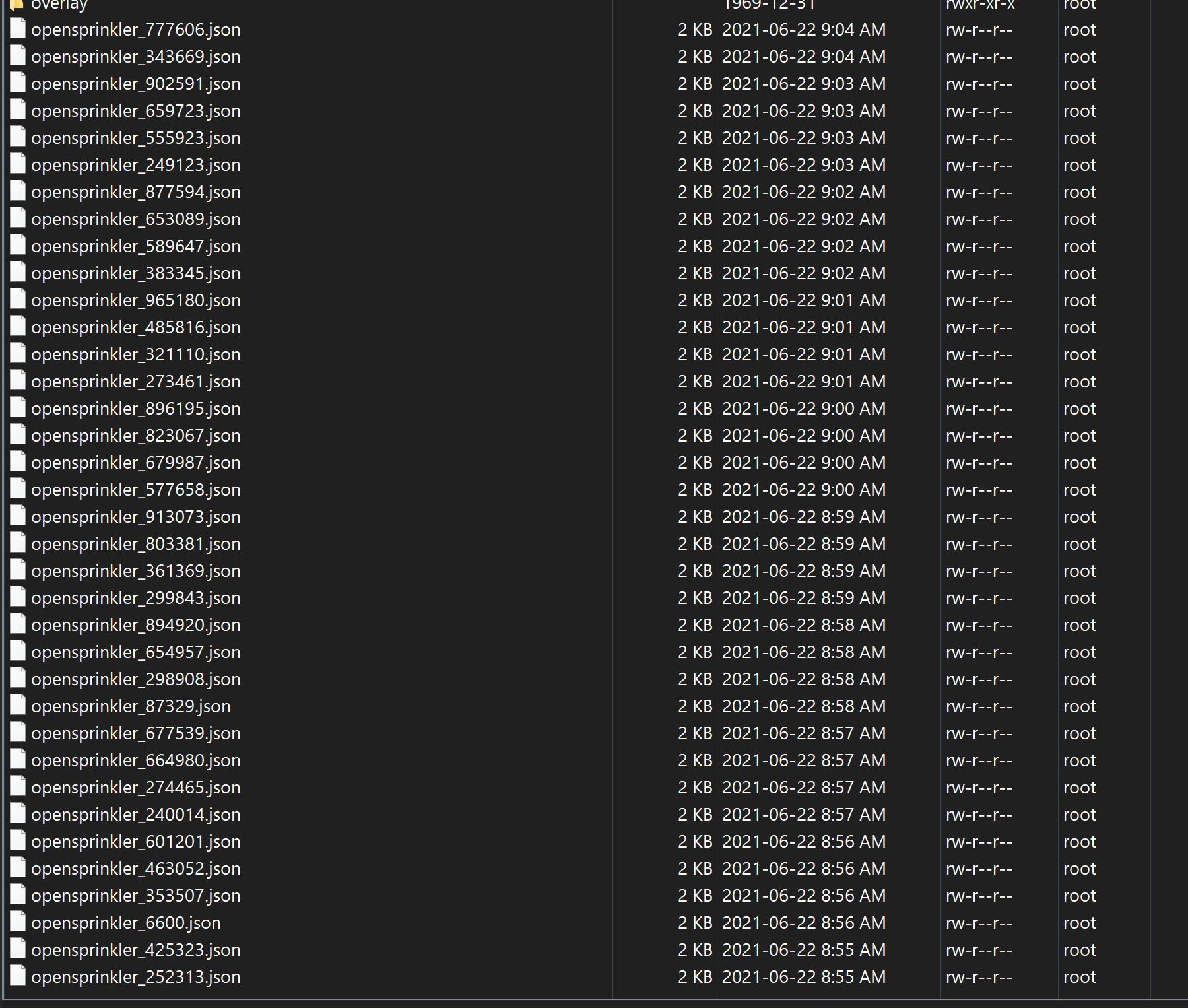

@therealdb I just SSH into my unit and checked the /tmp folder and I found this, is this normal? I have tons of these files which I have never seen before

Edit: I deleted all those files and more keep showing up by the minute lol! Something is definitely up

-

@therealdb I just SSH into my unit and checked the /tmp folder and I found this, is this normal? I have tons of these files which I have never seen before

Edit: I deleted all those files and more keep showing up by the minute lol! Something is definitely up

-

@therealdb I just SSH into my unit and checked the /tmp folder and I found this, is this normal? I have tons of these files which I have never seen before

Edit: I deleted all those files and more keep showing up by the minute lol! Something is definitely up

@pabla version 1.51 is on github, addressing the temp files problem specifically. It will delete file older than 3 minutes. I've it running in my own test system with no problems.

I still need to fix the duplication of programs in case of re-config, so just update the CurrentVersion variable on the master to 1.51 before updating the plug-in's files, so it will not configure it again.

A fix for "ChildrenInSameRoom" is on its way in the next release. Try it and lemme know.

-

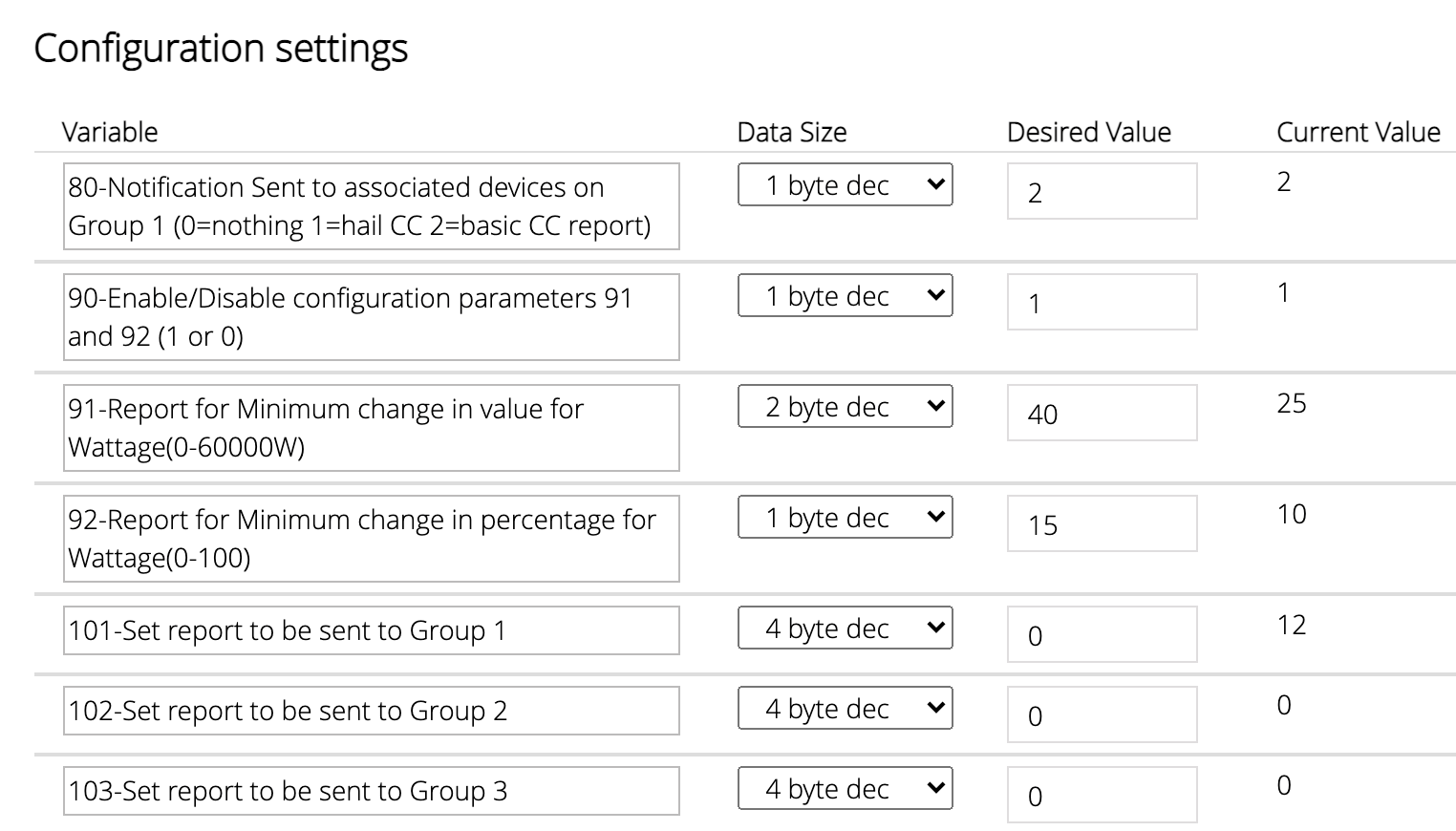

One thing to add here, a possibility: I notice that the plugin is using the 'ip' attribute to store the IP address of the controller. I have found that this causes a lot of instability. Luup has certain automatic things that it does in the background to try to open connections when the IP is stored on that attribute. It's all part of their built-in automatic I/O for serial and IP connections, which this plugin doesn't use. But Luup will try, try and retry forever to start and maintain a connection to that IP. I believe that this is at least one source of a memory leak -- I suspect their error handling on connection failure is not completely cleaning up after itself, so the system leaks memory, and/or possible even sockets. I discovered this some years ago and switched all of my plugins to just use state variables for the IP. My systems do not restart due to memory leaks, and memory usage is generally flat.

-

One thing to add here, a possibility: I notice that the plugin is using the 'ip' attribute to store the IP address of the controller. I have found that this causes a lot of instability. Luup has certain automatic things that it does in the background to try to open connections when the IP is stored on that attribute. It's all part of their built-in automatic I/O for serial and IP connections, which this plugin doesn't use. But Luup will try, try and retry forever to start and maintain a connection to that IP. I believe that this is at least one source of a memory leak -- I suspect their error handling on connection failure is not completely cleaning up after itself, so the system leaks memory, and/or possible even sockets. I discovered this some years ago and switched all of my plugins to just use state variables for the IP. My systems do not restart due to memory leaks, and memory usage is generally flat.

@toggledbits said in Help with Luup Reloads:

But Luup will try, try and retry forever to start and maintain a connection to that IP.

Yes, this is, indeed, an issue, although NOT one, I would say, for openLuup. It may well explain why Vera behaves (sort of) when you remove some plugins.

For some time, now, I had a Vera Edge running without any plugins or Z-wave devices, and, I have to say, it just runs and runs!

-

One thing to add here, a possibility: I notice that the plugin is using the 'ip' attribute to store the IP address of the controller. I have found that this causes a lot of instability. Luup has certain automatic things that it does in the background to try to open connections when the IP is stored on that attribute. It's all part of their built-in automatic I/O for serial and IP connections, which this plugin doesn't use. But Luup will try, try and retry forever to start and maintain a connection to that IP. I believe that this is at least one source of a memory leak -- I suspect their error handling on connection failure is not completely cleaning up after itself, so the system leaks memory, and/or possible even sockets. I discovered this some years ago and switched all of my plugins to just use state variables for the IP. My systems do not restart due to memory leaks, and memory usage is generally flat.

@toggledbits yep, I will change it in next build. I'm onto it as we speak. On my system I have few plug-ins, no one is using an IP and I'm still experiencing memory leaks. But I will probably just move everything to openLuup and use the Vera as a radio system.

-

@toggledbits said in Help with Luup Reloads:

But Luup will try, try and retry forever to start and maintain a connection to that IP.

Yes, this is, indeed, an issue, although NOT one, I would say, for openLuup. It may well explain why Vera behaves (sort of) when you remove some plugins.

For some time, now, I had a Vera Edge running without any plugins or Z-wave devices, and, I have to say, it just runs and runs!

@akbooer said in Help with Luup Reloads:

Yes, this is, indeed, an issue, although NOT one, I would say, for openLuup.

Sorry, did I miss that this was openLuup? Or are you just confirming that openLuup is better than real Luup (which everyone already knows)?

-

@toggledbits yep, I will change it in next build. I'm onto it as we speak. On my system I have few plug-ins, no one is using an IP and I'm still experiencing memory leaks. But I will probably just move everything to openLuup and use the Vera as a radio system.

@therealdb said in Help with Luup Reloads:

yep, I will change it in next build. I'm onto it as we speak.

One more thing... some versions of Luup have trouble returning the status from

os.execute(). They said they have fixed it in the newer beta builds (5363/4/5), but it's concerning that it could be broken at all (should be straightforward, not unstable), and Edward's response to me when queried was a bit uncertain. So I've started moving away from using the return value under Luup and started doing error detection other ways (e.g. does the file I expect now exist?). -

@akbooer said in Help with Luup Reloads:

Yes, this is, indeed, an issue, although NOT one, I would say, for openLuup.

Sorry, did I miss that this was openLuup? Or are you just confirming that openLuup is better than real Luup (which everyone already knows)?

@toggledbits said in Help with Luup Reloads:

Sorry, did I miss that this was openLuup?

Oooh, hope not... NO, just checked...

@pabla said in Help with Luup Reloads:

Recently I have been getting constant reloads on my Vera Plus

@toggledbits said in Help with Luup Reloads:

are you just confirming that openLuup is better than real Luup

Er, yes, just that, really. Let's say that this is one feature I chose not to emulate.

-

@pabla I found a small problem, and I'll fix it in a moment. Please wait for a new release.

@therealdb No rush, just reporting back

-

@therealdb No rush, just reporting back

@pabla 1.51-hotfix is on GitHub.

- programs duplication is now fixed, so a reconfiguration is OK

- IP is now a variable (and it's automatically migrated - don't worry)

- temp files are correctly deleted

- I've tracked the problem with infinite stations - and I'm working on a permanent fix. this seems to be related to reloads and I'll investigate this further in the next days.

Give it a try!

-

One thing to add here, a possibility: I notice that the plugin is using the 'ip' attribute to store the IP address of the controller. I have found that this causes a lot of instability. Luup has certain automatic things that it does in the background to try to open connections when the IP is stored on that attribute. It's all part of their built-in automatic I/O for serial and IP connections, which this plugin doesn't use. But Luup will try, try and retry forever to start and maintain a connection to that IP. I believe that this is at least one source of a memory leak -- I suspect their error handling on connection failure is not completely cleaning up after itself, so the system leaks memory, and/or possible even sockets. I discovered this some years ago and switched all of my plugins to just use state variables for the IP. My systems do not restart due to memory leaks, and memory usage is generally flat.

-

@pabla 1.51-hotfix is on GitHub.

- programs duplication is now fixed, so a reconfiguration is OK

- IP is now a variable (and it's automatically migrated - don't worry)

- temp files are correctly deleted

- I've tracked the problem with infinite stations - and I'm working on a permanent fix. this seems to be related to reloads and I'll investigate this further in the next days.

Give it a try!

@therealdb So I updated to the latest hot fix, and the files still aren't getting deleted. I have a fill from 11:06am local time and its 11:27am. Do they auto delete after a longer time?

-

@therealdb So I updated to the latest hot fix, and the files still aren't getting deleted. I have a fill from 11:06am local time and its 11:27am. Do they auto delete after a longer time?