Multi-System Reactor

850

Topics

8.0k

Posts

Looking at using Home Assistant for the first time, either on a Home Assistant Green, their own hardware or buying a cheap second hand mini PC.

Sounds like Home Assistant OS is linux based using Docker for HA etc.

Would I also be able to install things like MSR as well on their OS ? On the same box?

Thanks.

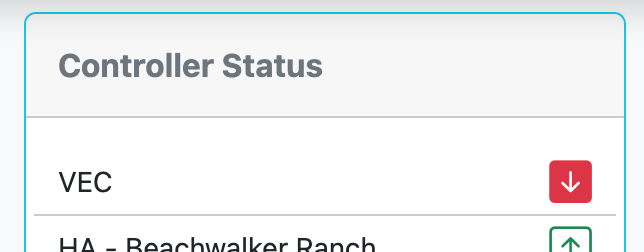

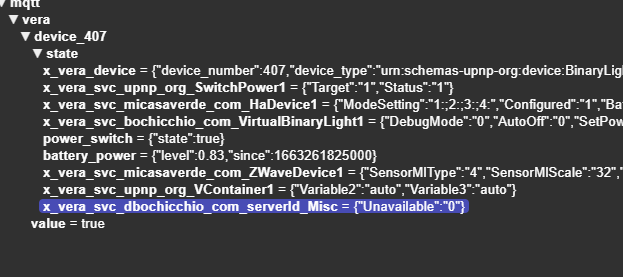

Having been messing around with some stuff I worked a way to self trigger some tests that I wanted to do on the HA <> MSR integration

This got me wondering if there's an entity that changes state / is exposed when a configured controller goes off line? I can't see one but thought it might be hidden or something?

Cheers

C

Using build 25328 and having the following users.yaml configuration:

users:

# This section defines your valid users.

admin: *******

groups:

# This section defines your user groups. Optionally, it defines application

# and API access restrictions (ACLs) for the group. Users may belong to

# more than one group. Again, no required or special groups here.

admin_group:

users:

- admin

applications: true # special form allows access to ALL applications

guests:

users: "*"

applications:

- dashboard

api_acls:

# This ACL allows users in the "admin" group to access the API

- url: "/api"

group: admin_group

allow: true

log: true

# This ACL allows anyone/thing to access the /api/v1/alive API endpoint

- url: "/api/v1/alive"

allow: true

session:

timeout: 7200 # (seconds)

rolling: true # activity extends timeout when true

# If log_acls is true, the selected ACL for every API access is logged.

log_acls: true

# If debug_acls is true, even more information about ACL selection is logged.

debug_acls: true

My goal is to allow anonymous user to dashboard, but MSR is still asking for a password when trying to access that. Nothing in the logs related to dashboard access. Probably an error in the configuration, but help needed to find that. Tried to put url: "/dashboard" under api_acls, but that was a long shot and didn't work.

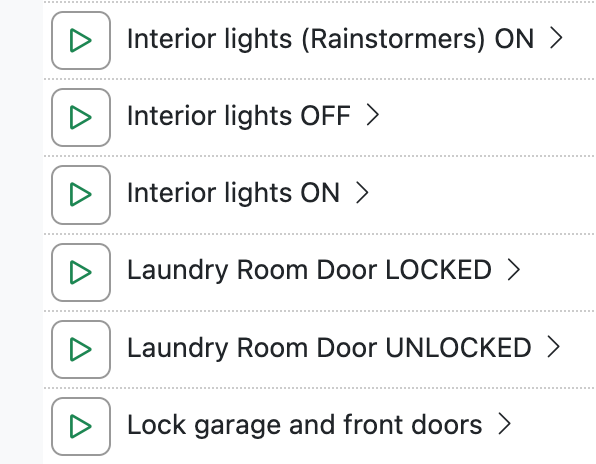

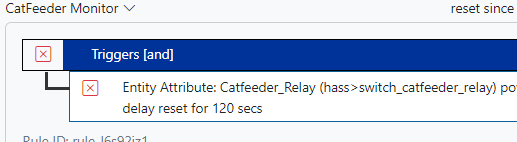

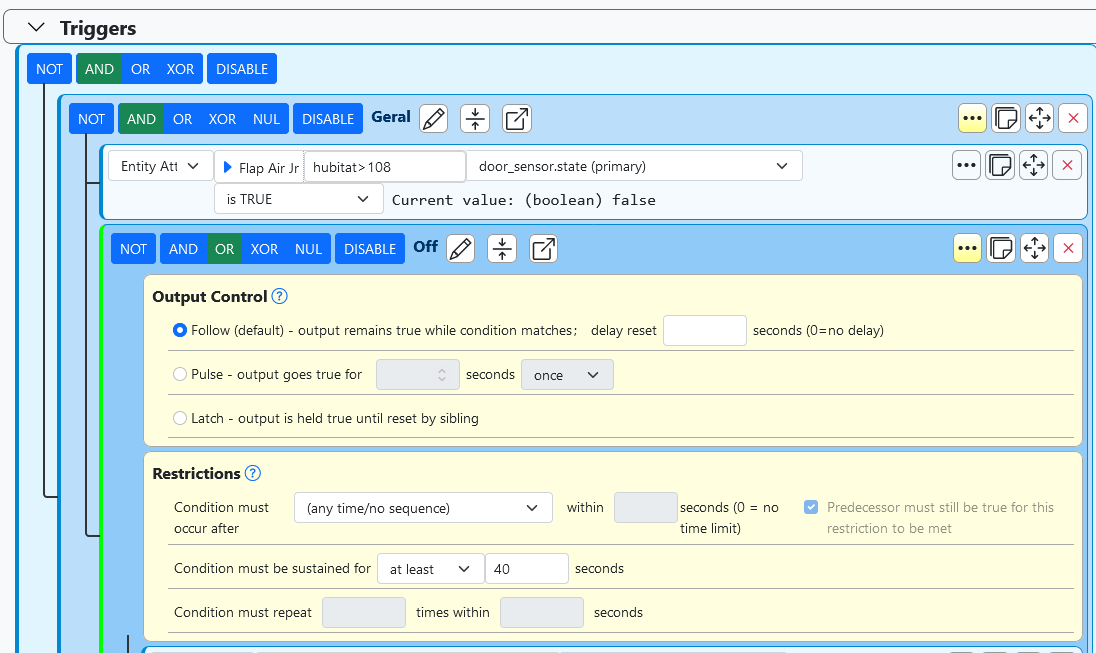

I use Virtual Entity Controller virtual switches which I turn on via webhooks from other applications. Once a switch triggers and turns on, I can then activate associated rules.

I would like each virtual switch to automatically turn off after a configurable time (e.g., 5 seconds, 10 seconds).

Is there a better way to achieve this auto-off behavior instead of creating a separate rule for each switch that uses the 'Condition must be sustained for' option to turn it off?

With a large number of these switches (and the associated turn-off rules), I'm checking to see if there is a simpler approach.If not, could this be a feature request to add an auto-off timer directly to the virtual switches.

Thanks

Reactor (Multi-hub) latest-26011-c621bbc7

VirtualEntityController v25356

Synology Docker

TL;DR: Format of data in storage directory will soon change. Make sure you are backing up the contents of that directory in its entirety, and you preserve your backups for an extended period, particularly the backup you take right before upgrading to the build containing this change (date of that is still to be determined, but soon). The old data format will remain readable (so you'll be able to read your pre-change backups) for the foreseeable future.

In support of a number of other changes in the works, I have found it necessary to change the storage format for Reactor objects in storage at the physical level.

Until now, plain, standard JSON has been used to store the data (everything under the storage directory). This has served well, but has a few limitations, including no real support for native JavaScript objects like Date, Map, Set, and others. It also is unable to store data that contains "loops" — objects that reference themselves in some way.

I'm not sure exactly when, but in the not-too-distant future I will publish a build using the new data format. It will automatically convert existing JSON data to the new format. For the moment, it will save data in both the new format and the old JSON format, preferring the former when loading data from storage. I have been running my own home with this new format for several months, and have no issues with data loss or corruption.

A few other things to know:

If you are not already backing up your storage directory, you should be. At a minimum, back this directory up every time you make big changes to your Rules, Reactions, etc.

Your existing JSON-format backups will continue to be readable for the long-term (years). The code that loads data from these files looks for the new file format first (which will have a .dval suffix), and if not found, will happily read (and convert) a same-basenamed .json file (i.e. it looks for ruleid.dval first, and if it doesn't find it, it tries to load ruleid.json). I'll publish detailed instructions for restoring from old backups when the build is posted (it's easy).

The new .dval files are not directly human-readable or editable as easily as the old .json files. A new utility will be provided in the tools directory to convert .dval data to .json format, which you can then read or edit if you find that necessary. However, that may not work for all future data, as my intent is to make more native JavaScript objects directly storable, and many of those objects cannot be stored in JSON.

You may need to modify your backup tools/scripts to pick up the new files: if you explicitly name .json files (rather than just specifying the entire storage directory) in your backup configuration, you will need to add .dval files to get a complete, accurate backup. I don't think this will be an issue for any of you; I imagine that you're all just backing up the entire contents of storage regardless of format/name, that is the safest (and IMO most correct) way to go (if that's not what you're doing, consider changing your approach).

The current code stores the data in both the .dval form and the .json form to hedge against any real-world problems I don't encounter in my own use. Some future build will drop this redundancy (i.e. save only to .dval form). However, the read code for the .json form will remain in any case.

This applies only to persistent storage that Reactor creates and controls under the storage tree. All other JSON data files (e.g. device data for Controllers) are unaffected by this change and will remain in that form. YAML files are also unaffected by this change.

This thread is open for any questions or concerns.

Just another thought. Adding devices from my Home Assistant / Zigbee2MQTT integration. Works perfectly but they always add as their IEEE address. Some of these devices have up to 10 entities associated, and the moment they are renamed to something sensible, each of those entities 'ceases to exist' in MSR. I like things tidy, and deleting each defunct entity needs 3 clicks.

Any chance of a 'bulk delete' option?

No biggy as I've pretty much finished my Z-wave migration and I don't expect to be adding more than 2 new Zigbee devices

Cheers

C

Build 21228 has been released. Docker images available from DockerHub as usual, and bare-metal packages here.

Home Assistant up to version 2021.8.6 supported; the online version of the manual will now state the current supported versions;

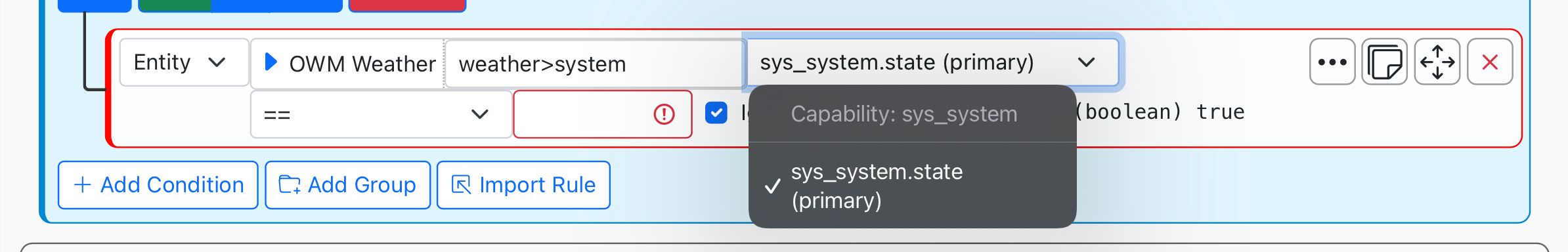

Fix an error in OWMWeatherController that could cause it to stop updating;

Unify the approach to entity filtering on all hub interface classes (controllers); this works for device entities only; it may be extended to other entities later;

Improve error detail in messages for EzloController during auth phase;

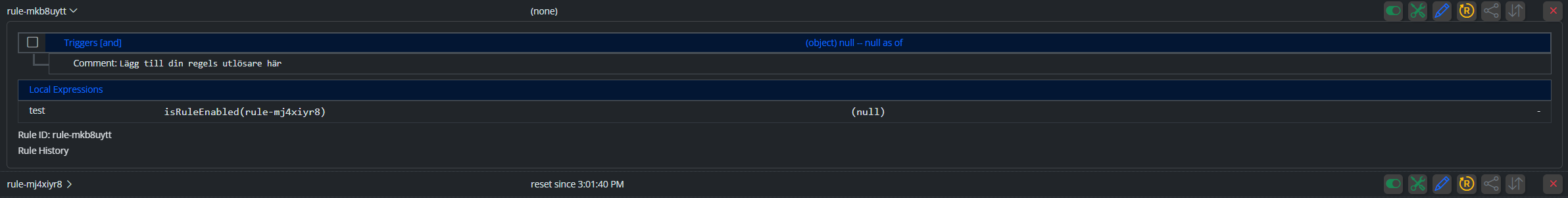

Add isRuleSet() and isRuleEnabled() functions to expressions extensions;

Implement set action for lock and passage capabilities (makes them more easily scriptable in some cases);

Fix a place in the UI where 24-hour time was not being displayed.

I have tried numerous ways to define a recurring annual period, for example from December 15 to January 15. No matter which method I try - after and before, between, after and/not after, Reactor reports "waiting for invalid date, invalid date. Some constructs also seem to cause Reactor to hang, timeout and restart. For example "before January 15 is evaluated as true, but reports "waiting for invalid date, invalid date". Does anyone have a tried and true method to define a recurring annual period? I think the "between" that I used successfully in the past may have broken with one of the updates.

-

-

-

-

-

-

-

-

-

-

ZWaveJS new device alerts

Locked -

-

-

-

-

-

First post

Locked -

-

-

-