Home Assistant Voice

-

This morning I noticed an update was available for Home Assistant Voice. I did the update and it broke the HAV. I had to re-enable it along with the Cloud Service to get it working again.

I got mine working by watching a lot of Youtube Videos. I would start by watching the newest videos you find. That is important. The one thing I noticed with Home Assistant in general, Updates come very often and instructions you find today may be different after an update. I also read lots in the Home Assistant Forum. Same goes there, read the latest posts first. What I did 6 months ago, if I could remember what I did, probably would not be the way to do it today. I keep planning on really learning the most I can about Home Assistant but life keeps getting in the way. So I continue to stumble my way through it.. -

Cheers! I shall try and make some time

(Is it just me that hates having to watch videos? Can't we just have instructions written down? )

)C

You can find lots of written instructions in the Home Assistant forums. The problem I found was that when I went into the HA Settings I could not find many of the items the instructions mentioned. They had already been changed by updates to HA. That is why I mentioned looking at the newest posts and videos. I found that the Youtube videos seem to have the latest tips and instructions. While I love all the frequent advancements and updates being made in HA, it can be rather difficult for someone new to HA to jump in and get everything working.

-

Cheers! I shall try and make some time

(Is it just me that hates having to watch videos? Can't we just have instructions written down? )

)C

@CatmanV2 said in Home Assistant Voice:

Cheers! I shall try and make some time

(Is it just me that hates having to watch videos? Can't we just have instructions written down? )

)Not just you! Such a lot of very poor narration and not so easy to refer back to! A modern disease.

-

@CatmanV2 said in Home Assistant Voice:

Cheers! I shall try and make some time

(Is it just me that hates having to watch videos? Can't we just have instructions written down? )

)Not just you! Such a lot of very poor narration and not so easy to refer back to! A modern disease.

-

I got my HAV a couple of days ago. Out of the box, setup was actually pretty easy. I agree with others that watching videos isn't my favorite way to do that kind of thing, but there are written instructions behind the QR code they provide in the box.

It deemed my HA host system (NUC) insufficient to do speech-to-text/conversation locally, so I signed up for Home Assistant Cloud and set it up that way. I'll circle around to that again later and see if I can free myself from the cloud, but the small monthly or annual fee is within my sensibilities for ensuring high Wife Acceptability on functionality.

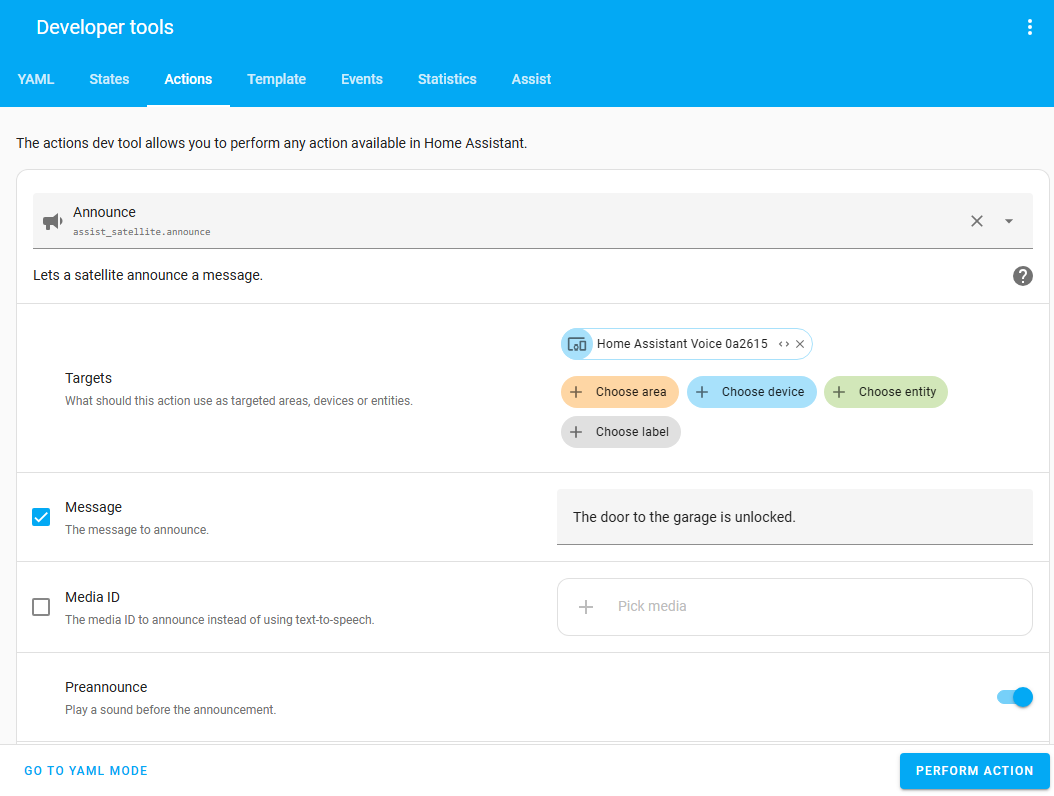

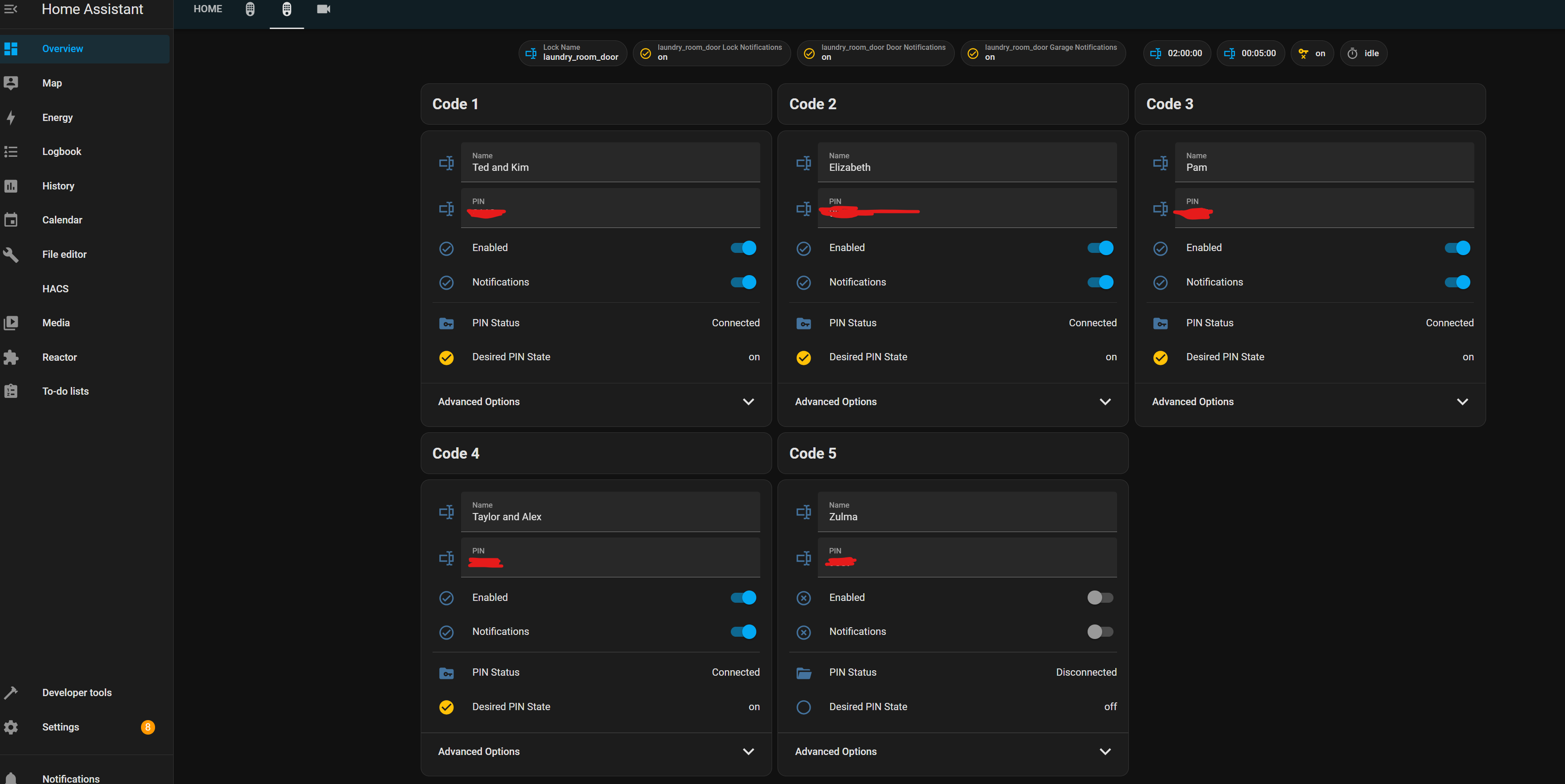

Lights on an off is easy. This is the most basic thing to get right, I guess, and it works great. With a few small tweaks to HassController (future build/release) I was able to get TTS output working very easily. Also playing media is pretty straightforward. It's also easy to control the brightness and color of the LED ring (maybe some future purpose for this will arise).

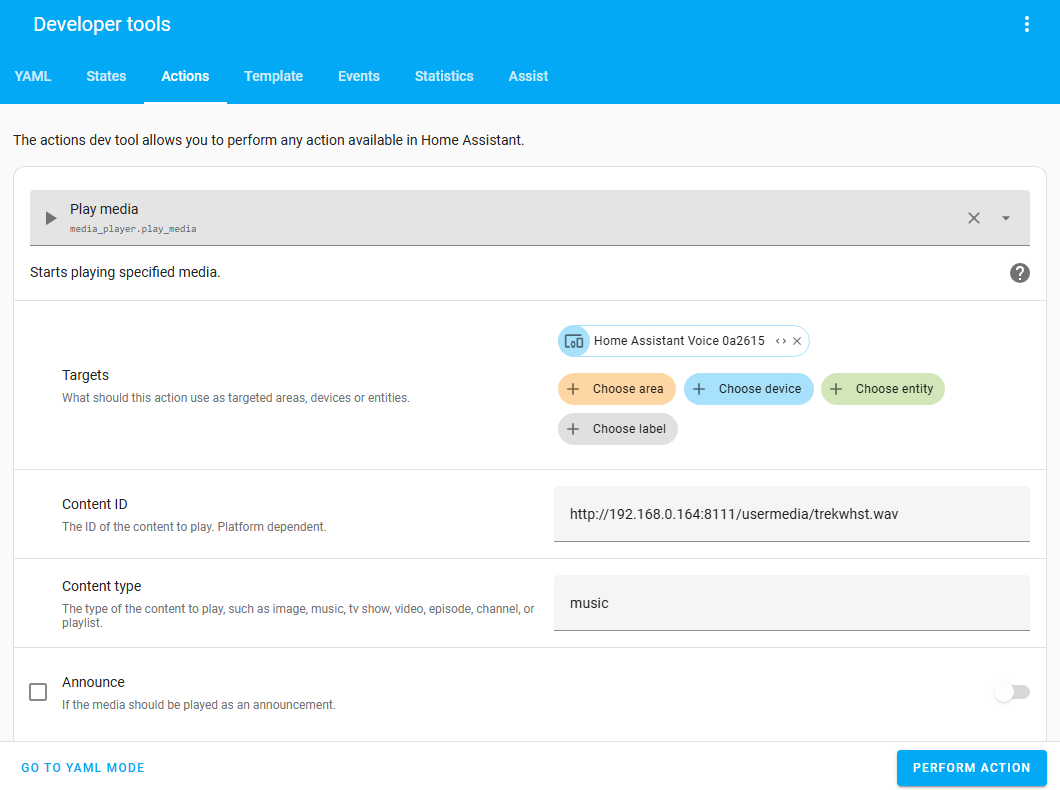

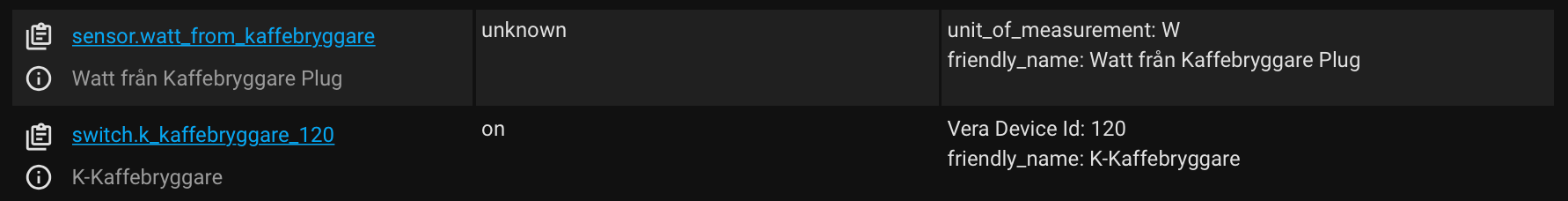

Most challenging so far was getting it to recognize a custom sentence. Actually, configuring the custom sentence was not really hard, but getting HA to do something other than speak "Intent does not exist" took some trial and error -- a classic HA trial of getting the right configuration magic into the right places, restarting untold numbers of times, while combing through inconsistent examples in the pages of their online documentation. Finally got it, although I swear my last attempt was the same as a half-dozen prior that didn't work. But I can finally say "Hey Jarvis, bark like a dog" and have it cause Reactor to reliably launch the play of an MP3 file on my living room Sonos SL1. Woof!

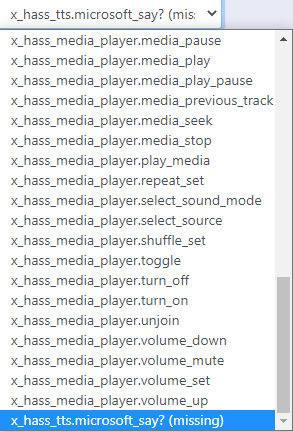

I also was able, from Reactor, to get it to speak a question, recognize a response from a list of expected responses, and store the response data in a Reactor variable. Seems useful, although I'm not yet sure how. That also took a little HassController modification (again, future release) to fully realize, and some tribal knowledge of how the HA API wants data structured for certain fields of that call. This isn't the kind of knowledge we normally encounter when calling HA services, so I'll write that up separately somewhere.

These minor successes are actually very encouraging. I think this thing is going to create a lot of opportunities.

-

I got my HAV a couple of days ago. Out of the box, setup was actually pretty easy. I agree with others that watching videos isn't my favorite way to do that kind of thing, but there are written instructions behind the QR code they provide in the box.

It deemed my HA host system (NUC) insufficient to do speech-to-text/conversation locally, so I signed up for Home Assistant Cloud and set it up that way. I'll circle around to that again later and see if I can free myself from the cloud, but the small monthly or annual fee is within my sensibilities for ensuring high Wife Acceptability on functionality.

Lights on an off is easy. This is the most basic thing to get right, I guess, and it works great. With a few small tweaks to HassController (future build/release) I was able to get TTS output working very easily. Also playing media is pretty straightforward. It's also easy to control the brightness and color of the LED ring (maybe some future purpose for this will arise).

Most challenging so far was getting it to recognize a custom sentence. Actually, configuring the custom sentence was not really hard, but getting HA to do something other than speak "Intent does not exist" took some trial and error -- a classic HA trial of getting the right configuration magic into the right places, restarting untold numbers of times, while combing through inconsistent examples in the pages of their online documentation. Finally got it, although I swear my last attempt was the same as a half-dozen prior that didn't work. But I can finally say "Hey Jarvis, bark like a dog" and have it cause Reactor to reliably launch the play of an MP3 file on my living room Sonos SL1. Woof!

I also was able, from Reactor, to get it to speak a question, recognize a response from a list of expected responses, and store the response data in a Reactor variable. Seems useful, although I'm not yet sure how. That also took a little HassController modification (again, future release) to fully realize, and some tribal knowledge of how the HA API wants data structured for certain fields of that call. This isn't the kind of knowledge we normally encounter when calling HA services, so I'll write that up separately somewhere.

These minor successes are actually very encouraging. I think this thing is going to create a lot of opportunities.

@toggledbits I'm watching all of this play out for now. "Leading edge vs bleeding edge" atm.

I'm very encouraged to see this doing as well in testing as it is. Anything to get me able to move off of non-privacy/non-cloud Echo devices is def getting my attention.